The recent OpenAI drama has made something very clear: Microsoft is in control.

At the world's most promising AI startup, staff know that they have a job and resources at Microsoft if they ever want it. Even if they stay, they'll be working on Microsoft cloud hardware, developing products for Microsoft's cloud software, that contribute to Microsoft's bottom line, all for a company part-owned by Microsoft.

The coup and revolt at OpenAI showed that the vast majority of the company's staff prefer this arrangement to one of non-profit independence, marking yet another victory for the Redmond-based backer.

But OpenAI is just a small part of the Microsoft story, and how it has reinvented itself as the most exciting company in the cloud and data center space.

Just last week, the company revealed that it is operating the third most powerful supercomputer in the world (bar some secret Chinese systems). The Eagle system burst onto the Top500 ranking as the fastest supercomputer ever operated by a private corporation.

The 561 petaflops system, designed for training large language models for generative AI, is built from publicly available ND H100 v5 virtual machines on a single 400G NDR InfiniBand fat tree fabric.

"Eagle is likely the single largest installation of both H100 and NDR InfiniBand on the planet," Microsoft supercomputing researcher and former NERSC employee Glenn K. Lockwood wrote in a personal blog.

"Not only does this signal that it's financially viable to stand up a leadership supercomputer for profit-generating R&D, but industry is now willing to take on the high risk of deploying systems using untested technology if it can give them a first-mover advantage."

Microsoft has made a number of high-profile high-performance computing hires in recent years - alongside Lockwood, it has brought on Cray's CTO Steve Scott, and the head of Cray's exascale efforts, Dr. Dan Ernst, among others. Of the top 50 supercomputers, six are operated by Microsoft.

"Supercomputing is the next wave of hyperscale, in some regard, and you have to completely rethink your processes, whether it's how you procure capacity, how you are going to validate it, how you scale it, and how you are going to repair it," Nidhi Chappell, Microsoft GM for Azure AI, told DCD earlier this year.

The company made this bet before the current generative AI wave, giving it a key advantage. “You don't build ChatGPT's infrastructure from scratch,” Chappell continued.

“We have a history of building supercomputers that allowed us to build the next generation. And there were so many learnings on the infrastructure that we used for ChatGPT, on how you go from a hyperscaler to a supercomputing hyperscaler.”

Microsoft will continue to build out huge Nvidia GPU-based supercomputers, and next year plans to deploy AMD's MI300X GPU at scale.

“Even just looking at the roadmap that I am working on right now, it's amazing, the scale is unprecedented," Chappell said. "And it's completely required.”

Just a day after the company debuted Eagle, it also unveiled the fruits of another long-gestating project: Azure Maia 100. The first in a new family of in-house AI chips, the accelerator boasts impressive benchmarks and may help provide at least some competition to Nvidia.

"The FLOPS of this chip outright crush Google’s TPUv5 (Viperfish) as well as Amazon’s Trainium/Inferentia2 chips. Surprisingly it’s not even that far off from Nvidia’s H100 and AMD’s MI300X in that department," SemiAnalysis' Dylan Patel and Myron Xie wrote. However, "the more relevant spec here is the memory bandwidth at 1.6TB/s. This still crushes Trainium/Inferentia2, but it is less memory bandwidth than even the TPUv5, let alone the H100 and MI300X."

Given it is a first-generation product, it performs much better than many were expecting.

But the chip is just part of the package. In its announcement, Microsoft was keen to highlight that its true advantage lies in the breadth of its operations.

“Microsoft is building the infrastructure to support AI innovation, and we are reimagining every aspect of our data centers to meet the needs of our customers,” said Scott Guthrie, EVP of Microsoft’s Cloud and AI Group.

“At the scale we operate, it’s important for us to optimize and integrate every layer of the infrastructure stack to maximize performance, diversify our supply chain, and give customers infrastructure choice.”

The chip will only be available in a custom liquid-cooled rack, with Microsoft showing a willingness to buck convention and develop unusually wide racks.

It is doing this all at production scale.

Just in the last four weeks, Microsoft has acquired 580 acres outside Columbus, Ohio, along with an additional 1,030 acres at its Mount Pleasant campus in Wisconsin. It has also filed for a new data center campus in Des Moines, Iowa, said it will spend $500 million expanding in eastern Canada, $1bn in Georgia, and AU$5bn in Australia.

It also quietly launched its Israeli cloud region, with more than 60 such regions around the world.

Microsoft is reportedly planning to spend some $50 billion a year on data centers (including the hardware within them), an unprecedented buildout that will have a far-reaching impact on the sector.

In the shorter term, it has shown itself not to be precious about relying on other providers as its buildout (and Nvidia's supply chains) catch up - signing deals with CoreWeave and Oracle to use their compute.

At the same time, Microsoft is looking further afield, bolstering its data center research team aggressively. DCD was first to report on its efforts to build data center robots and its plans to develop a global small modular reactor and microreactor strategy to power data centers amid the grid crunch.

When it comes to long-term storage, Microsoft also appears to be in the lead - its Project Silica effort can store 7TB on fused silica glass that lasts 10,000 years. If it can be commercialized, it would dramatically reduce the energy usage of cold storage, and reduce the reliance on rare earth metals.

"Hard drives are languishing, we've had so little capacity increases in the last five years; tape is suffering," Silica lead Dr. Ant Rowstron told DCD in 2022. "There is more and more data being produced, and trying to store that sustainably is a challenge for humanity."

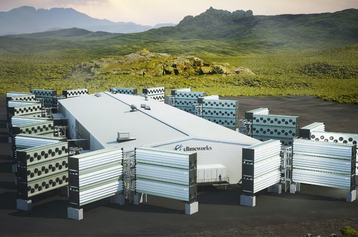

In another long-term move, the company has also vaulted to the front of the carbon capture market. The move is controversial - carbon capture is not a replacement for reducing emissions, is hard to track, and can use renewable energy that would otherwise displace fossil fuels - but it has been wholeheartedly embraced by Microsoft.

To name but a few: Microsoft has paid ClimeWorks to remove 11,400 metric tons of carbon, Running Tide to remove 12,000 tons, Carbon Streaming to remove 10,000 tons annually, and Heirloom to remove some 300,000 tons. It has also patented the idea of running carbon capture at data center sites.

It is worth reiterating that this is a drop in the ocean compared to the company's emissions, or the amount needed to get the planet back on track. Other Microsoft efforts, such as buying forests, have also backfired after they burned down during climate change-exacerbated wildfires.

But the company is still far ahead of its competitors in funding and experimenting with capture efforts, and is hoping to make carbon accounting standards better with its efforts with Carbon Call and its investment in FlexiDAO (alongside Google).

Microsoft will have to prove that its latest AI expansion won't disrupt its sustainable energy plans, and it's not clear if there will be enough 24x7 PPAs to match that $50bn a year expansion (as well as the rest of the industry, and other sectors). The company's data center water usage surged 34 percent amid the AI boom.

However, what is clear is that Microsoft will approach the problem with resources and a speed which is rare for a company of its scale. Gone is the Windows-reliant giant happy rest on its laurels.

"Despite the potential of the past few days to distract us, both Microsoft and OpenAl scientists and engineers have been working with undiminished urgency," Microsoft CTO and EVP of AI Kevin Scott said in an internal memo published by The Verge.

"Since Friday, Azure has deployed new Al compute, our newly formed MSR Al Frontiers organization published their new cutting-edge research Orca 2, and OpenAl continued to ship product like the new voice features in ChatGPT that rolled out yesterday. Any of these things alone would have been the accomplishment of a quarter for normal teams.

"Three such achievements in a week, with a major US holiday and with a huge amount of noise surrounding us, speaks volumes to the commitment, focus, and sense of urgency that everyone has."

While the other hyperscalers are also spending prodigiously and have teams of brilliant researchers, it is hard to see that same level of company-wide urgency.

As the industry rides the generative AI wave, Microsoft has pushed out in front - and shows no sign of slowing down.