Every few years digital infrastructure undergoes an unanticipated, but sudden transformation.

In the early '90s, we saw the growth of the world wide web and the client-server model of distributed computing which resulted in what we know today as the cloud. Within five years, the market flipped. Then came Linux: cloud vendors grew their offerings to support additional services and created frameworks that could abstract the underlying hardware, which in turn sped up innovation. Cloud, initially thought too far-fetched a concept, was quickly adopted by smart movers and has become a massive force for disruption.

Cloud providers have been able to harvest telemetry data for insights into the most popular workloads that in turn lead to chip optimizations for particular workloads or KPIs. AWS perhaps has gone the furthest with Nitro, a homegrown networking/storage/security subsystem, its Inferentia machine learning processor, and Graviton2, its general-purpose application processor inside instances adopted by Twitter, Snap and Coinbase, among others. Google has developed TPUs as an accelerator for neural network machine learning, and other leading cloud providers are developing ASICs for functions like AI and security.

The drive towards silicon verticalization in infrastructure

But why would cloud providers want to undertake the onerous, time-consuming and expensive task of building and deploying custom semiconductors? It can be boiled down to two strong motives: a desire for autonomy and competitive pressure on every component of performance delivered per TCO dollar spent. TCO is a complex calculation that drives the decision makers in hyperscale data centers – here we’ll talk about one component of TCO, energy.

When it comes to autonomy, companies want better control over their roadmaps, costs, security, and supply chains. It’s as simple as that. In difficult times, more autonomy can help a company withstand shocks like Covid-19, while in good times it forms the foundation for differentiation. A company is rarely an island— vertical integration has proved too costly and complicated in everything from cannons to CAT scanners—but they continually probe their buy/build portfolio to figure out an optimal mix.

A “custom, but not completely” approach to silicon lets designers eliminate features with potential performance trade-offs (like multithreading) while keeping a foot in the larger ecosystem. The size of hyperscale operations, the economic impact that small improvements make at scale, the cloud-native software movement that can support diverse hardware, and the greater availability of tools and technology to develop in-house chip design have effectively combined in a significant way to make silicon diversity a force once again. In addition, the rise of third-party silicon manufacturing and silicon IP providers helps dramatically reduce the transaction costs for bringing new designs to market.

For smaller companies, the good news is that the customization genie is out of the bottle. Independent chip designers are spinning up variants of cloud-based processors with different layouts, core count, cache size, speed, memory bandwidth, I/O and other factors to serve the wider market beyond those that can do it themselves. Service providers to today’s largest hyperscalers will likewise carve out niches with performance-optimized, cost-optimized and/or location-optimized instances. Customization at a core level of technology will be for everyone.

All roads lead back to energy

Customization will also become one of the primary tools for countering another key factor, the energy component of TCO, as will chip-level integration when combining diverse silicon components. Energy can come to 40 percent or more of the operating cost of a data center, but power and heat also can increase capital costs, real estate and maintenance. In turn, those higher costs put pressure on profits and customer satisfaction, and innovation slows. Hyperscale data centers are also facing pressure to better control their water and power consumption. Cities such as Amsterdam and Beijing have placed strict limits on data center size and power consumption. If autonomy is about what you want to do, the energy equation is about what you need to do.

In the 2010s, energy efficiency and performance per watt were unsung heroes of the cloud revolution. Data center workloads and Internet traffic grew by 8x and 12x respectively during the decade, but data center power consumption stayed roughly flat.

And it appears the 2020s will continue to be challenging. Moore’s Law delivers diminishing returns and many of the gains that can be achieved from consolidation, virtualization and cooling have been harvested. Meanwhile, data center workloads and Internet traffic are growing even faster with videoconferencing, streaming media and AI. Applied Materials predicts that data center power consumption could grow from two percent of world electricity consumption to 15 percent by 2025 without significant innovations. Higher power consumption could raise operating costs, capital requirements and ultimately the cost of cloud services, which in turn could dampen adoption.

The energy equation is even more challenging for developers of 5G and Edge technology, who will have to contend with even more restrictive performance, power and price parameters.

The good news is that silicon diversity is stepping up to this challenge. Combining CPUs with NPUs can slash the power consumption for running inference calculations, paving the way for running more AI operations on individual devices rather than the cloud. Not to mention, AWS says the Graviton2 processor delivers over 3x better performance per watt than traditional processors.

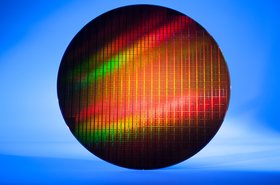

As the decade goes on, diversity will proliferate. Next on the horizon are chiplets that unify hundreds if not thousands of CPUs, GPUs, NPUs, DPUs with terabytes of SRAM and DRAM along with high-speed interconnects into a virtual SoC. Chiplets promise to dramatically improve performance by combining optimized semiconductor silicon into a single device, and at a much lower cost by eliminating many of the yield and design issues that come with producing a monolithic semiconductor. They also provide a more rapid roadmap to adoption by effectively allowing the disparate elements inside a chiplet advance at their own natural pace. A wafer-scale chiplet-based design might draw a kilowatt of power but it will be capable of petaflop-level performance and managing exponentially more tasks than today’s processors.

And of course, there will be unexpected breakthroughs along the way. We can’t predict exactly how the roadmap will unfold, but the next decade will be a fascinating time when it comes to core silicon innovation.