Data center construction is a specialized task, and builders have evolved particular ways to handle it. But what would you do if you suddenly had to build a data center underwater?

It’s a serious question. Tests in the US and China have shown that pressure vessels on the sea bed could deliver data center services - and provide benefits in terms of reliability and efficiency. Commercial roll out has begun, and engineers are working on the practical issues.

Read more in our Construction Supplement

Launching Microsoft’s Natick

It all started with Project Natick, a Microsoft project which served some Azure workloads from an eight-foot (2.4m) cylinder, 30ft (9m) underwater off the Pacific coast of the US in 2015. Microsoft followed up in 2018 with 12 racks of servers in a container 12m long, which ran for two years in 117 feet of water in Scotland’s Orkney Islands.

Phase 2, the Scottish experiment, was focused on “researching whether the concept is logistically, environmentally and economically practical,” so it took several steps which approached towards an actual commercial construction model.

Microsoft subcontracted the build process to Naval Group, a 400-year-old French marine engineering company, with experience in naval submarines and civil nuclear power.

The Microsoft team presented Naval Group with general specifications for the underwater data center and let the company take the lead on the design and manufacture of the vessel deployed in Scotland. This included the creation of interfaces to other systems such as its umbilical cable, and the delivery of the actual system.

“At the first look, we thought there is a big gap between data centers and submarines, but in fact they have a lot of synergies,” commented Naval CTO Eric Papin at the time.

Submarines are basically big pressure vessels that have to protect complex systems, maintaining the right electrical and thermal conditions.

Naval Group used a heat-exchange process already in use for cooling submarines. It pipes seawater through radiators on the back of each of the 12 server racks and back out into the ocean.

The size of Natick Phase 2 was chosen to make an easy journey towards commercial deployment. Like many land-based data centers, the Natick vessel was designed to have similar dimensions to shipping containers used in ships, trains, and trucks.

The data center was bolted shut and tested in France, then loaded onto an 18-wheel articulated truck and driven to the Orkney Islands, including multiple ferry crossings. In Scotland, the vessel was fixed to the ballast-filled triangular base which would keep it on the sea bed.

A gantry barge then took the data center, and was towed out to sea to the eventual deployment site.

Microsoft said the experiment was a success. In particular, it found that its Natick servers failed eight times less often than land-based ones, because they were immersed in a nitrogen atmosphere and weren’t disturbed by any engineers.

What happened to Natick Phase 3?

After claiming success for Natick Phase 2, observers expected Microsoft to move straight on to a more commercialized deployment Indeed, the company promptly patented the idea for a giant “reef” of containers holding servers, and things looked interesting.

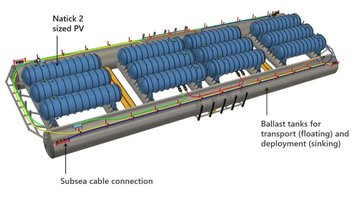

One image of “Azure Natick Gen 3.12” has surfaced, suggesting a step-change in capacity. The design shows a 300m long steel frame, holding 12 data center cylinders similar to that from Phase 2. A figure of 5MW for the total structure has been suggested - and that’s in line with a pretty low power density for the 144 racks it could hold.

If that’s not ambitious enough. Microsoft has suggested it could group multiple 5MW modules together to create underwater Azure availability zones.

As you’d expect from a project transitioning from test to development, there are new practical aspects to the design. Each frame has two long ballast tanks which can be filled with air, so the unit can be floated into position without support vessels.

Once on site, the tanks can be filled with water to sink it to the sea bed.

Despite these details, Microsoft has said very little about any third generation of Natick, and declined DCD’s invitation to elaborate.

But Systems Engineering students at the University of Maryland were asked to analyze the likely lifecycle of an underwater data center from need, through operations, to retirement.

One resulting essay, published on Medium said: “A Natick data center deployment cycle will last up to five years, which is the anticipated lifespan of the computers contained within the deep-water servers. After each five-year deployment cycle, the data center would be retrieved, reloaded with new computers, and redeployed. The target lifespan of a Natick data center is at least 20 years. After that, the data center is designed to be retrieved, recycled, and redeployed.”

While Microsoft is keeping tight-lipped about any plans to commercialize its ideas for underwater data centers, it did include some Natick ideas in the Low Carbon Pledge, a promise to give free access to a raft of patents designed to make data centers more sustainable.

Over to China

Meanwhile, the next move in the underwater data center race came from China. A company called Beijing Highlander launched a four-rack test vessel at the port of Guangdong in early 2021, and ran live China Telecom data on the servers later that year.

That same year, Highlander quickly followed up with a bigger test at the Hainan Free Trade Port, and announced the world’s first commercial underwater data center would be constructed from 100 of its data cabins, networked on the sea bed, connected to land by power-and-data cables, and powered by low-carbon electricity from Hainan’s nuclear power station.

Coastal regions of China are adopting the idea of underwater data centers. The concept is included in the five-year economic plans of several Chinese regional authorities, including the provinces of Hainan and Shandong, as well as the coastal cities of Xiamen and Shenzhen.

In Hainan, contracts were signed to deliver the data center in January 2022. Like Microsoft, Highlander engaged a more experienced marine engineering partner to handle the actual construction of the eventual data center.

As its name suggests, Offshore Oil Engineering Co (COOEC) is a marine firm with a history in the oil industry. It announced its partnership with Highlander in a release that makes it clear it sees the data center project as part of a move from the oil industry to a low-carbon strategy.

COOEC says the Hainan data center project will allow it to "expand from traditional offshore oil and gas engineering products to new offshore businesses." Other than that, it says it is keen to enter green marine engineering industries such as offshore wind power.

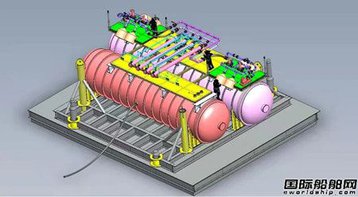

The company says it is working on data cabins at its Tianjin Lingang manufacturing site, to be deployed in 20m deep water off the coast of the Hainan Free Trade Port.

The announcement says the cabins will weigh 1,300 tons (the equivalent of around 1,000 cars), and be connected to land via a “placenta” tank with a diameter of 3.6 meters. COOEC says this will make it the world's largest submarine data cabin - though as far as we know at DCD, with the retirement of Natick Phase 2, it will be more or less the only submarine data cabin besides the smaller Highlander test vessel.

Details are emerging about the efforts COOEC and Highlander are making to deliver an engineering solution to the problem of underwater data centers, which can be constructed in volume.

COOEC says the cabins will be “innovative marine equipment,” and will have to be constructed to withstand the sea pressure. The system will also have to resist corrosion.

The cabin will hold sophisticated electronics, and will need a large number of openings for pipelines and cables to pass through. All of these will need high-pressure, non-corroding seals.

“From scheme research, engineering design, construction to testing, there are very big technical challenges,” COOEC says.

In one small additional detail, COOEC says the external circulation pipework will be recyclable.

COOEC will handle the design of the vessels, procurement of materials, manufacturing, and testing. It will also manage the land transportation of the modules and ships to take them to their eventual working environment.

Highlander says the system will consume no water, and will use less energy.

Despite the amount of steel involved in the cabins, the two companies say the underwater facility will have a lower construction cost than a land-based one and (obviously enough) will save on the use of land.

Like Microsoft, Highlander praised the reliability of underwater servers. In particular, "It is a new type of marine engineering that effectively saves energy and resources and integrates technology, big data, low carbon, and green, and has far-reaching significance for promoting the green development of the data industry," COOEC and Highlander say in their press release.

Let’s go deeper!

While Highlander is the front-runner in actually deploying underwater data centers, a rival US firm is promising to set a depth record - at least in principle - for a concept announced early in 2022.

Microsoft and Highlander have not spoken of going any deeper than 120m (400ft), but Texas-based Subsea Cloud claims it will go way deeper, to 3,000m (9,850ft).

Subsea says it wants to take its liquid-cooled underwater data center pods (UDCPs) to these depths for security reasons, so they will be safe from interference by divers and submersibles, which cannot reach those depths.

"You can’t [access these pods] with divers,” said CEO Maxie Reynolds. “You’re going to need some very disruptive equipment. You can’t do it with a submarine, they don’t go deep enough. So you’re gonna need a remote operated vehicle (ROV) and those are very trackable. It takes care of a lot of the physical side of security.”

Reynolds has a background in marine engineering and security penetration testing, and claims her engineering partners, connected with offshore technology company Energy Subsea, have pressure-tested the pods to show they can work at 3,000m.

We’ve got a big caveat here: Subsea has not shared very much about its pods or how they were tested. Despite that, it’s a proposal that offers interesting challenges to anyone involved in the field - and suggests that this emerging idea could still change radically.

Instead of heavy-duty pressure vessels, Reynolds says Subsea is working on simple, lightweight pods which will each hold around 800 servers, and have a "far more simple and proven design” than Microsoft or Highlander.

Though the pods are designed to operate at very high pressure, she says they “don’t use pressure vessels (a competitive advantage for us).”

At sea level, every square inch of a surface is subjected to a force of 14.6 pounds. This is atmospheric pressure. Under the sea, pressure increases rapidly at one atmosphere every 10m; so at 3,000m, a shell designed to keep that pressure out would have to withstand 300 atmospheres of excess pressure.

“Both Highlander and Microsoft have opted to use pressure vessels. This design matters because the deeper data centers go into the oceans, the more pressure they incur,” says Reynolds. “To withstand the pressure they incur, they must make the walls of the data centers thicker. This affects many elements of the logistics on land and the deployment subsea.”

If you don’t attempt to recreate conditions on land, you can allow the pressure to equalize, inside the vessel, and you no longer have to have such a physically strong structure.

Subsea has shared a photo of its system - a square yellow container that looks sturdy, but is clearly not a pressure vessel. Visible openings in the box are presumably designed to allow pressure to equalize. The electronics must be able to work at a high pressure, probably immersed in some sort of oil.

“As subsea engineers, we've designed ours to be versatile whilst maintaining its design integrity. The data center pods will work in shallow depths just as they will at deeper depths. The design ensures that at any depth, the pressure inside the housing is equal to the pressure outside – we make no changes to accommodate different depths because we don't have to.”

To sum up, she says: “We designed the solution based on subsea engineering principles, not on submarine engineering principles (broadly speaking).”

Construction developments

Noting that modular construction is already used in land-based containerized data centers, Reynolds predicts that underwater installations could leapfrog to new levels of prefabrication: “this type of construction isn’t held back by legacy manufacturing issues and the industry, as well as the businesses that it supports need to scale quickly.”

She also points out that modular construction processes are well established offshore: “Historically, modular construction has been the norm subsea. Things must be fabricated in sections and laid in sections.”

Some of the other parts of construction are also easier, because the sea bed does not already have buildings and other infrastructure installed, she tells DCD.

“If you think of the complexities of laying cables to data centers on land, it is resource-intensive and expensive (it could cost millions per mile). It faces many bureaucratic and logistical challenges,” she says. “A cable deployed subsea takes a matter of weeks and actually costs less. Laying a cable subsea can cost as little as $50,000 per mile. It’s counterintuitive.”

If underwater data centers ever reach bulk production, that will cut the costs, she says: “Most everything is cheaper in bulk.”

While Natick had to ship one module from France to Scotland, and then charter a boat to tow it to sea, future deployments would plan for shorter travel, and deploy in larger numbers.

“It will be more efficient to deploy in large numbers simply because connecting to existing infrastructure needs a vessel, which has a day rate,” says Reynolds. “You want to lay as many as you can in one go. A vessel can typically carry 80 units. It is more economical to use it to its full capacity.”

While Subsea’s pods are designed for deep-sea deployment, they will operate just as well in shallow water, though they might have to be buried for security, says Reynolds: “The specs of each pod will not change for us, deep or shallow. Installation engineering will change.”

For instance, in deep water, the pods will have to be maneuvered into place using a thruster tool, while that won’t be needed in shallow water.

With large concentrations of underwater pods, it will be possible to locate a maintenance vessel nearby. Small lightweight pods can be pulled to the surface quickly to be worked on.

“If we deploy in a large cluster, let’s say more than 20 pods, there will be a nearby maintenance vessel which will react to large faults or disturbances,” she says. ”To disconnect, recover, replace servers, redeploy, will take us around six to eight hours.”

But most underwater data centers will have spare hardware in place, so operations can be rerouted without having to bring the module to the surface: “We have redundancy in place for all pods. I presume any other company in the space will offer similar and they will also see the benefits of different classes of monitoring.”

It’s sometimes asserted that the sea is governed by different rules, generally aimed at controlling fishing and mineral extraction. Data center operators may hope that they can be free of tiresome zoning rules created for land-based buildings, but they may still have planning headaches.

“In the US and Asia, planning permission will not necessarily be easier, unfortunately,” warns Reynolds. “The governing system for subsea permitting is underdeveloped relative to future needs, but it will catch up as the industry shifts.”