Amazon Web Services plans to launch a new chip for training machine learning models.

Trainium was developed in-house, and joins the company's existing Inferentia processor that focuses on inference workloads.

Each cloud an island

Chip specifics were not revealed, but Amazon claimed Trainium will offer the most teraflops of any machine learning instance in the cloud.

The company says it will have a 30 percent higher throughput and 45 percent lower cost-per-inference compared with the standard AWS GPU instances, but by the time it releases in the second half of 2021 new GPUs may be available and prices may have changed.

No benchmarks were revealed, so it's not possible to compare the hardware to Google's own in-house TPU chips, soon set to be in its fourth generation.

Amazon also plans to roll out another machine learning processor to its cloud service, the Intel Habana Gaudi.

Intel acquired Habana Labs back in 2019 for some $2bn, immediately replacing the company's chips with its own Nervana line - itself part of a prior acquisition.

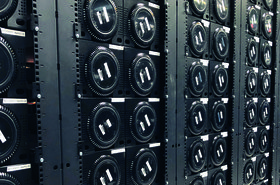

Now, Habana's Gaudi processors are nearly ready for prime time, available in early 2021 as EC2 instances. An 8-card Gaudi EC2 instance can process about 12,000 images-per-second training the ResNet-50 model on TensorFlow, Intel claims. The company also boasts a 40 percent better price-performance than current GPU-based EC2 instances for machine learning workloads.

“Our portfolio reflects the fact that artificial intelligence is not a one-size-fits-all computing challenge,” said Remi El-Ouazzane, chief strategy officer of Intel’s Data Platforms Group.

“Cloud providers today are broadly using the built-in AI performance of our Intel Xeon processors to tackle AI inference workloads. With Habana, we can now also help them reduce the cost of training AI models at scale, providing a compelling, competitive alternative in this high-growth market opportunity.”