The Open19 rack and server design, announced two years ago by LinkedIn, is now being deployed in Microsoft-owned data centers, and the blueprints will be published and shared, the Open19 Summit heard this week.

The Open19 design packs standard servers into bricks within a standard 19-inch rack, claiming to cut the cost of these systems by more than 50 percent by reducing waste from the networking and power distribution. The design is being offered under an open source license. Announced two years ago at DCD>Webscale, the specification is ready for production use, project leader Yuval Bachar said at this week's Summit in San Jose.

One brick at a time

"Last summer, LinkedIn helped announce the launch of the Open19 Foundation," said Bachar, LinkedIn's chief data center architect, and president of the Open19 Foundation. "In the weeks and months to come, we plan to open source every aspect of the Open19 platform—from the mechanical design to the electrical design—to enable anyone to build and create an innovative and competitive ecosystem."

LinkedIn has shared plenty of open source software, but this is the first time the social networking site has released hardware, Bachar said in a post on the LinkedIn Engineering blog. The idea was announced in 2016, and the Foundation was launched in 2017 to manage the process.

Open19 followed on from the Open Compute Project (OCP), which Facebook created in 2011 to share designs for efficient data center hardware that would save money in large-scale deployments. However, OCP's Open Rack standard relies on non-standard 21 in server racks. Open19 involves traditional racks, and aims to create a design which can be used in small facilities including "edge" locations, as well as the large data centers at LinkedIn, where it was first conceived.

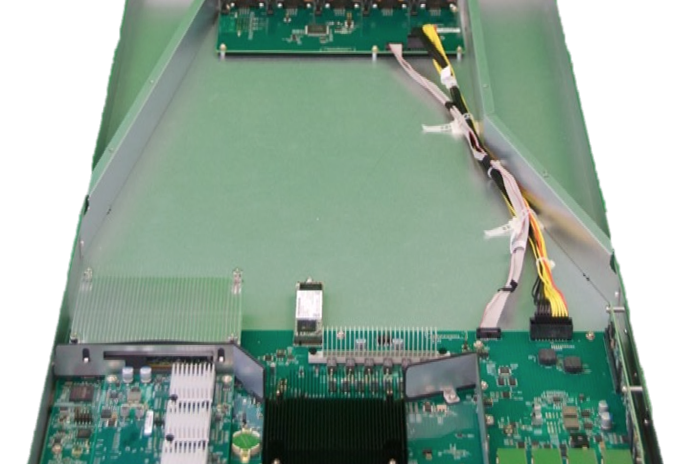

The project proposes "bricks" which can hold standard server motherboards, installed into cages within a normal rack. The bricks can be 1U or 2U high and either full or half-width. Power and network connections are both carried by a simplified system using clip-on connectors. The power supply has been removed from each server to cut costs, and DC power is distributed at 12V from a power shelf. The clip on power system is claimed to be safer than the busbar used in the Open Rack's DC distribution system.

Due diligence

"Over the last two years, we conducted due diligence while overcoming a few challenges that come with developing a new industry standard," Bachar said.

Open19 is not presented as a rival to Open Rack: LinkedIn joined the OCP this year, and Bachar worked closely with OCP on its network switch program, in his previous job at Facebook. However the timing of this launch, and the Open19 Summit, is interesting, coming as it does one week before an OCP Regional Summit, to be held in Amsterdam.

The elements

Going through the elements of the Open19 system, Bachar pointed out that the Open19 cages are completely passive, and come in two sizes, 12U and 8U, able to hold either full or half-width bricks.

There are four brick formats - all have 50GbE connectivity, which can be extended to 200GbE in future, and start with 200W for an unmanaged system, or 400W for a managed system.

The power cable is designed to deliver up to 19.2kW to two "leaf zones," he said: "We can take 9.6kW and create two levels of power—one for each managed system of 200W per half-width 1U server. We selected power connectors and pins that will handle 400w (35A@12v) per server, which enables a power-managed system to push each half-width server to 400w. That means 800w to double size and 1600w for a 2RU system."

The current top of rack (TOR) switch supports up to 3.2Tb, and has a design with Bachar says is available from multiple sources. It is connected to 48 servers, with cables that can handle 100Gbps, and the server will get "four bidirectional channels rated to 50G PSM4 on day one, which enables up to 200G per half-width server."

The power shelf holds hardware vendors' normal power modules - though each power shelf will be supplier-specific, only holding modules form one supplier. The shelf goes up to 9.6kW in a redundant 3+3 configuration, which can be increased to 15.5kW at the cost of redundancy in a 5+1 configuration.