One day after unveiling the world's third fastest supercomputer at the Lawrence Livermore National Laboratory, the US Department of Energy (DOE) has announced yet another super-fast machine is on its way.

The new Perlmutter system will be built for the National Energy Research Scientific Computing (NERSC) center at the Lawrence Berkeley National Laboratory, and delivered in late 2020.

Named after Berkeley Lab’s Nobel Prize winning astrophysicist Saul Perlmutter, the system has a total contract value of $146 million, and is expected to more than triple the computational power currently available at NERSC, the high performance computing facility for the DOE Office of Science.

Shasta la vista

Perlmutter (also known as NERSC-9) will be delivered by American supercomputing company Cray, featuring future generation AMD Epyc CPUs and Nvidia Tesla GPUs, and will be based on Cray's forthcoming supercomputing platform 'Shasta.'

“Continued leadership in high performance computing is vital to America’s competitiveness, prosperity, and national security,” US Secretary of Energy Rick Perry said. “This advanced new system, created in close partnership with US industry, will give American scientists a powerful new tool of discovery and innovation and will be an important milestone on the road to the coming era of exascale computing.”

The supercomputer will be a heterogeneous system, featuring Nvidia GPUs just like the DOE's other pre-exascale systems, Summit and Sierra. However, in the case of those two systems, they will use IBM Power9 processors for the CPU.

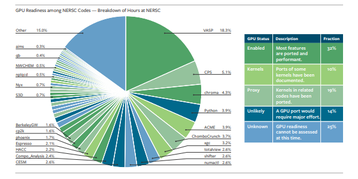

“Perlmutter will be NERSC's first large-scale GPU system, with a heterogeneous combination of CPU and GPU-accelerated nodes designed to maximize the productivity of our diverse mix of applications,” Nick Wright, chief architect of the Perlmutter system, said. “While the adoption of GPUs will benefit many codes immediately, others will require more preparation.”

Nvidia's GPUs will come with Tensor Core support, "a breakthrough technology that provides multi-precisions capabilities, allowing scientists and other users to do both traditional scientific computing and emerging AI workloads,” Ian Buck, VP and GM of accelerated computing at Nvidia, told DCD. “No other acceleration platform provides such universal capabilities.”

This heterogeneous architecture is likely to be the future of supercomputing, Cray's CTO Steve Scott told DCD in an interview. "We're seeing more and more customers wanting to run workflows containing a mix of simulation and analytics and AI and we and they need systems that can handle all of these simultaneously."

The company's Shasta system, which launches in the fourth quarter of next year, was designed with this in mind, Scott said: "Its design is motivated by increasingly heterogeneous data centric workloads." With Shasta, one can mix processor architectures, including x86 variants, Arm, GPUs and FPGAs. "And I would expect within a year or two we'll have support for at least one of the emerging crop of deep learning accelerators," Scott added.

"We really do anticipate a Cambrian explosion of processor architectures because of the slowing silicon technology meaning that people are turning to architectural specialization to get performance. They're diversifying due to the fact that the underlying CMOS silicon technology isn't advancing at the same rate it once was. And so we're seeing different processors that are optimized for different sorts of workloads."

“We’ve been working closely with Cray on the Shasta architecture to ensure it has a built-in ability to take full advantage of advancements in our GPU computing platform for high-performance computing of all kinds,” Buck said.

Shasta can use various forms of cooling - from air, to direct liquid, to hot water - with Perlmutter relying on direct liquid cooling. "We've bid systems that have processors of over 500 watts and the direct liquid cooling can accommodate that," Scott said. "We've increased the power and cooling headroom up to 250 kilowatts. That's a quarter of a megawatt in the single rack and we have plans to take that even higher within the first year."

For Perlmutter, NERSC is planning a major power and cooling upgrade in Berkeley Lab’s Shyh Wang Hall to prepare the facility. However, Scott noted that Shasta can work in standard 19-inch data center deployments.

The NERSC system will also feature Cray's new interconnect, Slingshot. "Notably it is Ethernet compatible and we do this for interoperability with third party storage devices and interoperability with the data centers in this increasingly networked data centric world," Scott said. "But Ethernet is not a very good high performance protocol, so we also have a very high performance protocol that runs on the same wires and we can intermix traffic. So it's really designed to straddle those two worlds."

Slingshot also has "a novel and very effective congestion control mechanism," Scott added. "What this does is it dramatically reduces the queuing latency and latency from congestion in the network, which is the primary determinant of network performance and it provides performance isolation between workloads. So different workloads don't affect each other in the way that they have done traditionally on HPC networks."

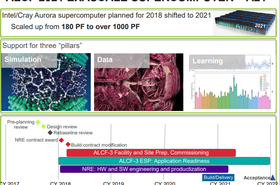

With Perlmutter, Cray has taken to calling the system "exascale-class," however "it wouldn't be feasible in 2020 to deploy an exascale system in itself," Scott admits. Perlmutter, along with Summit and Sierra, are pre-exascale systems, likely the final ones before exascale.

"The next big procurement after the NERSC-9 system is currently ongoing," Scott said. "It's CORAL 2, and they are planning on placing exascale systems in the 2021-22 timeframe at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory. That procurement is ongoing so people have submitted bids and they have not yet announced winners for that system yet."