Ideas can strike at any time. Hot-water cooling will come closer to the mainstream at next week’s ISC event in Frankfurt, Germany, but the birth of the idea came a long time before that.

In 2006, Dr Bruno Michel was at a conference in London, watching former head of IBM UK Sir Anthony Cleaver give a speech about data centers. At the end, attendees were told that there would be no time for questions because Cleaver had to rush off to see the British prime minister.

“He had to explain to Tony Blair a report by Nicholas Stern,” said Michel, head of IBM Zürich Research Laboratory’s Advanced Thermal Packaging Group.

Economics of climate change

The Stern Review, one of the largest and most influential reports on the effects of climate change on the world economy, painted a bleak picture of a difficult future if governments and businesses did not radically reduce greenhouse gas emissions.

“We didn’t start the day thinking about this, of course,” Michel said in an interview with DCD.

“What Stern triggered in us is that energy production is the biggest problem for the climate, and the IT industry has a share in that. The other paradigm shift that happened on the same day is that analysts at this conference, for the first time, said it’s more expensive to run a data center than to buy one: “And this led to hot water cooling.”

Roots of water cooling

IBM’s history with water cooling dates all the way back to 1964, and the System/360 Model 91. Over the following decades, the company and the industry as a whole experimented with hybrid air-to-water and indirect water cooling systems, but in mainstream data centers, energy-hungry conventional air conditioning systems persisted.

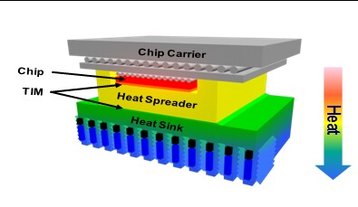

“We wanted to change that,” Michel told us. His team found that hot water cooling, also called warm water cooling, was able to keep transistors below the crucial 85°C (185°F) mark.

Using microchannel-based heatsinks and a closed loop, water is supplied at 60°C (140°F) and “comes out of the computer at 65°C (149°F). In the data center, half the energy in a hotter climate is consumed by the heat pump and the air movers, so we can save half the energy.”

Unlike most water cooling methods, the water is brought directly to the chip, and does not need to be cooled. This saves energy costs but requires more expensive piping, and can limit one’s flexibility in server design.

By 2010, IBM created a prototype product called Aquasar, installed at the Swiss Federal Institute of Technology in Zürich and designed in collaboration with the university and Professor Dimos Poulikakos.

“This [idea] was so convincing that it was then rebuilt as a large data center in Munich - the SuperMUC, in 2012,” Michel said.

“So five and a half years after Stern - exactly on the day - we had the biggest data center in Europe running with hot water cooling.”

Europe’s fastest supercomputer

SuperMUC at the Leibniz Supercomputing Centre (LRZ) was built with iDataPlex Direct Water Cooled dx360 M4 servers, comprising more than 150,000 cores to provide a peak performance of up to three petaflops, making it Europe’s fastest supercomputer at the time.

“It really is an impressive setting,” Michel said. “When we first came up with hot water cooling they said it will never work. They said you’re going to flood the data center. Your transistors will be less efficient, your failure rate will be at least twice as high… We never flooded the data center. We had no single board leaking out of the 20,000 because we tested it with compressed nitrogen gas.

“And it was double the efficiency overall. Plus, the number of boards that failed was half of the number in an air-cooled data center because failure is temperature change driven: half the failures in a data center are due to temperature change and since we cool it at 60°C, we don’t have temperature change.”

The system was the first of its kind, made possible because the German government had mandated a long-term total cost of ownership bid, which meant that energy and water costs were taken into account. As a closed system running with the same water for five years, the water cost was almost zero after the initial installation.

The concept is yet to find mass market appeal, but “all the systems in the top ten of the Top500 list of the world’s fastest supercomputers are using some form of hot water cooling,” Michel said.

Spreading the word

There are signs that the technology may be finally ready to spread further: “We did see a big change in interest in the last 18 months,” said Martin Hiegl, who was IBM’s HPC manager in central Europe at the time of the first SuperMUC.

Hiegl, along with the SuperMUC contract and most of the related technology, moved to Lenovo in 2015, after the company acquired IBM’s System x division for $2.3 billion.

“There are more and more industrial clients that want to talk about this,” Hiegl, now business unit director for HPC and AI at Lenovo, told DCD.

“When we started it in 2010, it was all about green IT and energy savings. Now, over time, what we found is that things like overclocking or giving the processor more power to use can help to balance the application workload as well.”

With hot water cooling, Lenovo has been able to push the envelope on modern CPUs’ thermal design point (TDP) - the maximum amount of heat generated by a chip that the cooling system can dissipate.

“At LRZ, the CPU will support 240W - it will be the only Intel CPU on the market today running at 240W,” Hiegl said. “In our lab we showed that we can run up to 300W today, and for our next generation we’re looking at 400-450W.”

He added: “Going forward into 2020-2022, we think that to get the best performance in a data center it will be necessary to either go wider or go higher, so you lose density but can push air through. Or you go to a liquid cooling solution so that you can use the best performing processors.”

Hot water cooling has also found more success in Japan “as there’s a big push for those technologies because of all the power limitations they have there,” as well as places like Saudi Arabia where the high ambient temperatures have made hot water a more attractive proposition.

Meanwhile under Lenovo, the SuperMUC supercomputer is undergoing a massive upgrade - the next generation SuperMUC-NG, set to launch later this year, will deliver 26.7 petaflop compute capacity, powered by nearly 6,500 ThinkSystem SD650 nodes - now set to be commercialized for the mainstream data center as Neptune.

“The first SuperMUC is based on a completely different node server design,” Hiegl said. “We decided against using something that’s completely unique and niche; our server also supports air cooling, and we’re designing it from the start so that it can support a water loop - we are now designing systems for 2020, and they are planned to be optimal for both air and water.”

Memory cooling

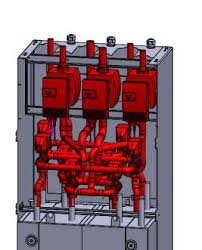

As power densities continued to rise, Lenovo encountered another cooling challenge - memory. “DIMMs didn’t generate that much heat back in 2012, so it was sufficient to have passive heat pipes going back and forth to the actual water loop,” Hiegl said.

“With the current generation 128 Gigabyte DIMMs, you have way more power and heat coming off the memory, so we now have water running between them allowing us to have a 90 percent efficiency in taking heat away.”

The company has also explored other ways of maximizing cooling efficiency: “We take hot water coming out of it that’s 55-56°C (131-133°F), and we put it into an adsorption chiller,” which uses a zeolite material to “generate coolness out of that heat, with which we cool the whole storage, the power supplies, networking and all the components that we can’t use direct water cooling on,” Hiegl said.

The adsorption chiller Lenovo uses is supplied by Fahrenheit, a German startup previously known as SolTech. “We’re the only people in the data center space working with them so far,” Hiegl added.

“We do think that this will be used more often in the future, though. In our opinion, LRZ is an early adopter, finding new technologies, or even just inventing them. The water cooling we did back in 2010 - no one else did that. Now, after a few years, others - be it SGI, HPE, Dell - they have adopted different kinds of water cooling technologies.”

Michel, meanwhile, remains convinced that the core combination of microchannels and direct-to-chip fluid can lead to huge advances. “We did another project for DARPA, where we etch channels into the backside of the processor and then have fluid flowing through these microchannels, reducing the thermal resistance by another factor of four to what we had in the SuperMUC. That means the gradient can then become just a few degrees.”

3D cooling to the chip

The Defense Advanced Research Projects Agency’s Intrachip/Interchip Enhanced Cooling (ICECool) project was awarded to IBM in 2013. The company, and the Georgia Institute of Technology, are hoping to develop a way of cooling high-density 3D chip stacks, with actual products expected to appear in commercial and military applications as soon as this year.

“It is not single phase, it’s two phase cooling, using a benign refrigerant that boils at 30-50°C (86-122°F) and the advantage is you can use the latent heat,” Michel said.

“You have to handle large volumes of steam, and that’s a challenge. But with this, the maximum power we can remove is about one kilowatt per square centimeter.

“It’s really impressive: the power densities we can achieve when we do interlayer cooled chip stacks - we can remove about 1-3 kilowatts per cubic centimeter. So, for example, that’s a nuclear power plant in one cubic meter.”

Shrinking supercomputers

In a separate project, Michel hopes to be able to radically shrink the size of supercomputers: “We can increase the efficiency of a computer about 5,000 times using the same seamless transistors that we build now, because the vast majority of energy in a computer is not used for computation but for moving data around in a computer.

“Any HPC data center, including SuperMUC, is a pile of PCs - currently everybody uses the PC design,” Michel said. “The PC design when it was first done was a well-balanced system, it consumed about half the energy for computation and half for moving data because it had single clock access, mainboards were very small, and things like that.

“Now, since then, processors have become 10,000 times better. But moving data from the main memory to the processor and to other components on the mainboard has not changed as much. It just became about 100 times better.”

This has meant that “you have to use cache because your main memory is too far away,” Michel said. “You use command coding pipelines, you use speculative execution, and all of that requires this 99 percent of transistors.

“So we’re using the majority of transistors in a current system to compensate for distance. And if you’re miniaturizing a system, we don’t have to do that. We can go back to the original design and then we need to run fewer transistors and we can get to the factor of about 10,000 in efficiency.”

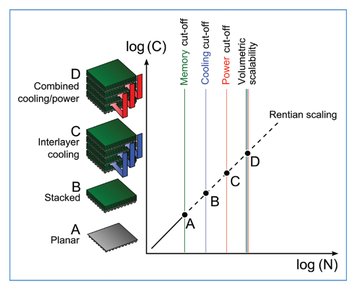

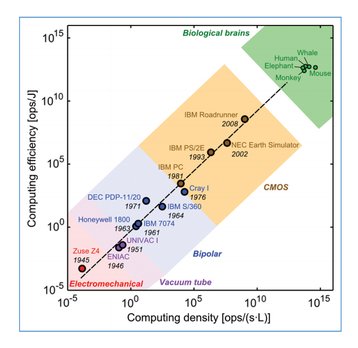

In a research paper on the concept of liquid cooled, ultra-dense supercomputers, ‘Towards five-dimensional scaling: How density improves efficiency in future computers,’ Michel et. al. note that, historically, energy efficiency of computation has doubled every 18-24 months, while performance has increased 1,000-fold every 11 years, leading to a net 10-fold energy consumption increase “which is clearly not a sustainable future.”

The team added that, for their dense system, “three-dimensional stacking processes need to be well industrialized.”

Long term goals

However, Michel admitted to DCD that the road ahead will be difficult because few are willing to take on the risks and long-term costs associated with building and deploying radically new technologies that could upend existing norms.

“All engineers that build our current computers have been educated during Moore’s Law,” Michel said. ”They have successfully designed 20 revisions or improvements of data centers using their design rules. Why should number 21 be different? It is like trying to stop a steamroller by hand.”

The other problem is that, in the short term, iteration on existing designs will lead to better results: “You have to go down. You have to build inferior systems [with different approaches] in order to move forward.”

This is vital, he said: “The best thing is to rewind the former development and redo it under the right new paradigm.”

Alas, Michel does not see this happening at a large scale anytime soon. While his research continues, he admits that “companies like ours will not drive this change because there is no urgent need to improve data centers.”

The Stern Review led to little change, its calls for a new approach ignored. “Then we had the Paris Agreement, but again nothing happened,” he said.

“So I don’t know what needs to happen until people are really reminded that we need to take action with other technologies that are already available.”

This article appeared in the cooling supplement of the June/July issue of DCD magazine. Subscribe for free today: