Businesses can’t afford to hold assets that don’t return value to the business. While there’s no debate about whether the capabilities offered by enterprise data centers are needed, there is far greater ambiguity about how and where that those capabilities are delivered. Hence, we see the increasingly complex mix of owner-operated, colocation and cloud in the modern enterprise infrastructure mix. But this poses questions about whether the traditional enterprise data center is still a valid asset to keep.

Is the data center becoming a toxic asset?

Technology leaders are increasingly asked why they maintain their own data center. Their answer is likely to involve the importance of retaining control over critical workloads and proprietary data. Perhaps their complexity of legacy systems adds to the apparent intractability of the traditional owner-operator model. While it may not always seem cost effective when you look at the balance sheet in abstraction, in reality these are decisions that are the norm across the industry.

Recently, the acceleration of digital transformation seen during the pandemic has piled the pressure on these facilities – and added to the question of how organizations are going to deal with increased use of applications, generation of data, and demand for digital services. At a time when many data centers seem to be bulging at the seams, the ongoing cost (and environmental impact) of power and cooling demands often aren’t improving to any significant degree.

Floor space is also getting squeezed, requiring investment to fund expansion. And IT and facilities teams are asked to deliver more, but without consistent lines of reporting, shared systems or aligned budgets they struggle to do this. Yet the option of migrating everything to colocation or pushing deeper into the cloud is not as straightforward as it sounds and, in any case, simply isn’t viable for everyone.

But this is not a cause for any doom and gloom. Enterprise data centers still have a lot to give.

Defining value from the data center

Part of the problem in resolving these issues is the uncertainty associated with the ‘obvious’ solutions. Is cooling proving uneconomical? The risk of creating thermal stress may deter organisations from trying to optimise their cooling strategy. Hoping to squeeze out some additional capacity to defer a costly facility redevelopment or even a new build? Most data centers today still operate at a conservative level to avoid outages – with redundancy protecting redundancy, for fear of unplanned downtime.

No one is fundamentally at fault for this situation because the balance of operational certainty and cost management will typically go in favour of the former. The financial loss to a business of significant downtime hardly needs reiterating. So what can you do?

For many firms the answer lies in improving their ability to make sensible decisions about how to run their facility closer to its optimal level. If you can remove the fear, uncertainty and doubt (the FUD factor) about key issues such as thermal design and high-density deployments, you can almost certainly improve the value the data center returns to the business.

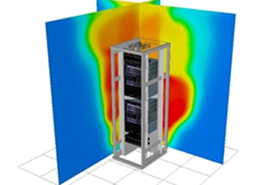

A proven route to these sorts of outcomes lies in the use of simulation to optimise your data center and remove the FUD factor. Because most facilities rely on a combination of experience, assumed rules and monitoring data, the current performance is well-understood. Where simulation steps in is in helping you forecast what comes next.

Uncovering value with simulation

From automotive industries to financial services, simulation is trusted on the frontline of technical performance. In these instances, value is increased when performance is optimised. When we look at the data center, the same is true. In fact a data center lends itself quite conveniently to the capabilities of computational fluid dynamics (CFD) to understand what’s really happening when it comes to all those tricky power and cooling conundrums.

When you have full visibility of the current performance of your components, power and cooling – paired with the ability to accurately predict the outcomes relating to any change you make – it becomes far easier to unearth hidden value. We see this as being a ‘digital twin’ for your data center. The digital twin can show the risks in adjusting thermal profiles. It can simulate the introduction of a high density configuration. And it can set the mind at rest if you’re having to redesign how you use your floor space.

Together, these capabilities enable you to maximise ROI from your facility, and potentially defer the excruciating cost of expanding (or even adapting) your footprint.

An asset to be proud of

When you can integrate tools across facilities and IT teams, and weigh up the risks associated with an ROI-led strategy in your data center, the potential value of the asset changes. You bring clarity to all key stakeholders so decisions are made with eyes very much wide open. Risk and reward find a better balance, which is something too many data center professionals have struggled with to date.

As budget questions become more probing, and performance and resilience demands increase, this type of visibility will become essential. But the enterprise data center still has life in it yet. In fact more than this, as an asset, it has real opportunity and value.

More...

-

Sponsored Get ahead of hindsight: Data center hacks for faster decision making

By implementing a digital twin of your data center, you can model and predict every action you’ll need to take

-

Going virtual: Using physics-based 3D models for troubleshooting and improving efficiency

Identifying inefficiencies in a data center that has to be running 24/7 can be challenging, but using 3D models with built-in physics engines can help, says Future Facilities’ Sherman Ikemoto

-

Sponsored Under the lid of Smart Cities

Businesses are using digital twins across the board to keep our smart cities running smoothly