Throughout history automation has been regarded by many with fear: of lost jobs, lost control, and the belief that in the process something critical will be overlooked.

While many of those fears – at least in the long run – have proven to be unfounded, the idea of fully automating the data center, while appealing, has also turned out to be a real challenge.

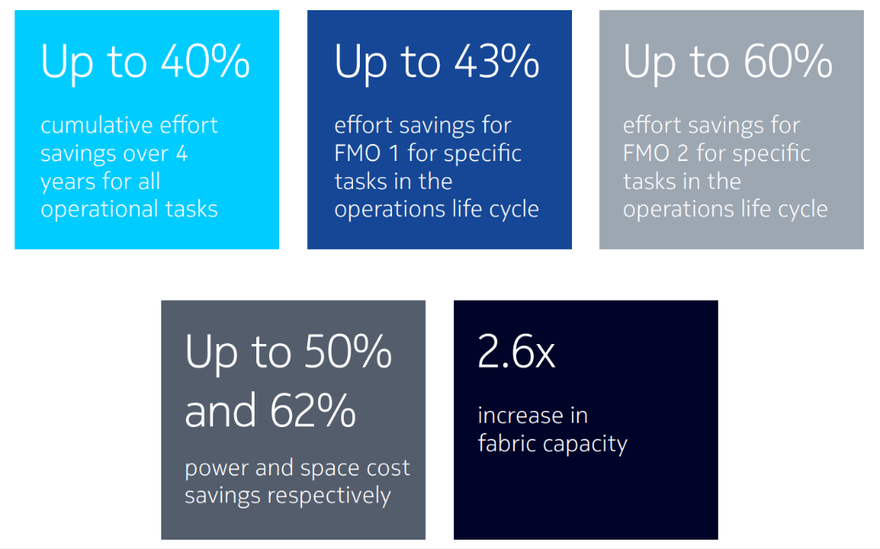

However, Nokia is looking to change that with the introduction of its SR Linux-based Nokia Data Center Fabric solution. This is a set of products that introduces a level of automation to data center network management that can drive operational cost savings of up to 40 percent over four years, across all tasks associated with the data center fabric lifecycle – claims backed up in a business case analysis by Bell Labs Consulting.

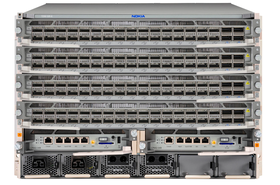

“The Nokia Data Center Fabric solution is a set of products intended to meet the evolving needs of data center networks; indeed, we think it’s a pretty complete solution. It includes switching hardware, as well as an open network operating system and NetOps automation toolkit. The solution fits a variety of different physical leaf-spine architectures in terms of the density of racks, 10GE, 25GE, and 100GE access for servers, and 100GE and 400GE uplinks for spines and so on,” says Jon Lundstrom, director of webscale business development at Nokia.

The Bell Labs report examines Day 0, Day 1 and Day 2+ data center operations scenarios to determine the potential savings available, where Day 0 is the design and build phase; Day 1 is – as you would expect – the opening deployment phase; and Day 2+ is the ongoing operational phase.

And yet, Day 0 is before the data center is even built, so how does the Nokia Data Center Fabric contribute to that?

“Day 0 is the design phase, before you’ve even deployed your network. You’re doing an evaluation of the features you’re planning to have and their configuration. You’re building a high-level fabric design, and you’re doing integration work. All of that can be done using the Fabric Services System automation toolkit,” says Lundstrom.

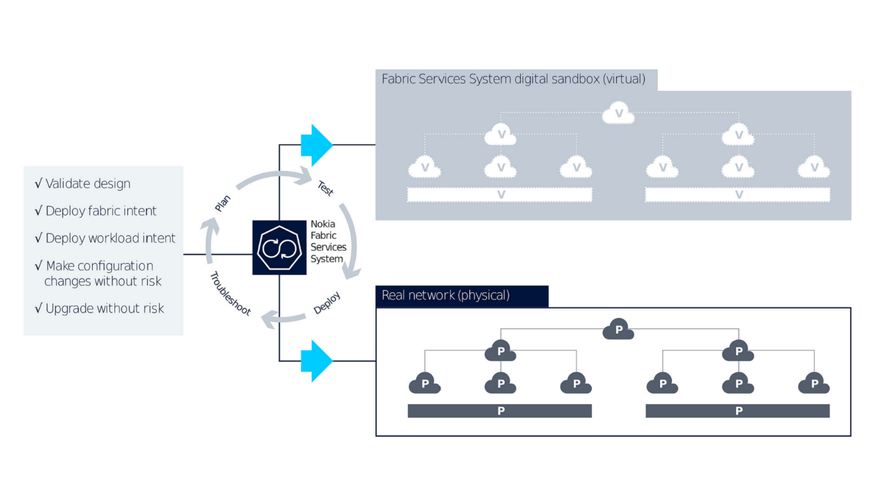

“We have ‘intent templates’ for different types of fabric you might deploy and their capabilities. In addition, using a unique feature called the digital sandbox, you can replicate the anticipated production environment and execute testing on the network.”

In other words, it’s possible to construct a ‘digital twin’ or a series of digital twins of the physical network in order to ascertain the best network configuration before your operations team deploy a single piece of hardware, as well as using automatically generated digital twins post-deployment to run all kinds of new features, troubleshooting, and lifecycle tests in a safe virtual environment.

In addition to the operational cost savings in the various future modes of operation (FMO), the Bell Labs research also reached a number of other startling conclusions, including savings of up to half in terms of data center power costs and 62 percent in terms of space savings as a result of the ability to leverage newer generation hardware optimizations that the Nokia Data Center Fabric solution enables.

Python power

At the heart of the solution is SR Linux, a network operating system developed by Nokia to support its drive into the data center. Based on the CentOS distribution of Linux, it builds on Nokia’s Service Router (SR) IP network operating environment, used in more than 1,200 major service provider, enterprise, and webscale networks around the world.

“SR Linux is based on a model-driven architecture where everything is Yang modelled. Yang is a data modelling language specifically designed to define configuration and operational state data on network elements, which is then shared between systems over networks by management protocols.” says Lundstrom.

“In conjunction with that, we wanted to leverage the capabilities that we've built over many years into our traditional network operating system, SR OS. So, we ported a variety of different protocols, and run them as separate instances and applications inside SR Linux. That really makes up our SR Linux routing stack and gives our customers the comfort that there’s some real battle-hardened code built into SR Linux,” says Lundstrom.

Linux, of course, is a de facto standard operating system, which ought to lighten the learning curve for data center staff, but at the same time SR Linux is architected specifically for data center network use.

“Every command is actually built in Python. We have a full set of CLI commands to access all branches and leaves of SR Linux Yang models, but operators can also build their own CLI commands if they want. If they are used to viewing information from existing equipment in a particular way, they can even replicate their way of viewing the same type of information from our device. It makes it much easier for them to come up to speed with our solution.”

The significance of basing the network operating system on Linux, Yang, and tools like Python is the flexibility they provide to run both in-house developed and third-party applications, ultimately enabling more granular automation of the data center network via the wider Nokia Data Center Fabric solution.

SR Linux uniquely offers native support for third-party applications, and supports an open community of network app development. Examples include a container reachability monitoring app, a legacy protocol interop app, and a node health summarization app.

The flexibility of the SR Linux Network Developer’s Kit (NDK) enables apps to be written in multiple languages and, provided they have a Yang model, they can be loaded into SR Linux, leverage the common northbound APIs and app lifecycle tools, and communicate with other apps internally and externally.

“The final piece of the equation is the Fabric Services System, or the automation toolkit, that sits on top of SR Linux. It's more than an element management system (EMS) because there is a real need for greater automation in the data center,” says Lundstrom.

He continues: “When we talk to customers at all phases in the fabric lifecycle, whether it's the design phase, the deployment, or ongoing operations phase, when we built the automation toolkit and orchestration that sits above the network elements, we made sure to encompass all the job functions and tasks across the entire lifecycle of the fabric.”

Automatic for the data centers

The Nokia Data Center Fabric Solution is very much focused on the network side of operations, stresses Lundstrom.

“That can be split into ‘underlay’ versus the ‘overlays’ that are deployed within data centers. The network element and the SR Linux operating system supports BGP, OSPF and ISIS as potential underlay protocols, and the basic routing of IPv4 and IPv6. But a lot of customers want to leverage overlays, like EVPN, whether for layer 2 Ethernet or layer 3 IP services. Building on our leadership in the EVPN community, these have become a cornerstone of the SR Linux feature set, as well in terms of orchestration,” he says.

He continues: “So when we use the Fabric Services System to design and deploy a data center network environment, operators will leverage intent templates that actually build both the underlay connectivity design, map out how everything needs to be cabled together, as well as define the configuration of network protocols for each node.

“But the type of workload or services needs to be laid on top of that via EVPN to connect different types of workloads running on the servers. We’re primarily focused on the networking side, the integration capabilities of SR Linux provide the flexibility to interact with orchestration solutions for containers or virtual machines running on data center servers, for example.”

While the digital twin technology built into the Nokia Data Center Fabric solution has a role to play in the design stage, it comes into its own in operations.

“Once you've deployed your data center network you can auto-generate a digital twin that you can play with in the digital sandbox. It can help you understand your environment better. You can test-validate exactly the behavior that you’re expecting to see in the production environment and, because we use the exact same code inside that virtual environment as is running on the physical switches, it's actually an exact replica in every aspect except throughput scale,” says Lundstrom.

Instead of running tests, trialling patches and running other experiments on physical hardware in a lab – costly hardware that needs to be procured in the current supply-chain challenged environment – the digital sandbox enables the same activities to be conducted virtually, saving time, money and aggravation.

“It saves tonnes of time in lab setups and the constant churn as you test different things. You can do this all now in a virtual environment, running live traffic at low scale, of course, but also the live control plane to really understand what the behavior is going to be, depending on the scenario you’re testing.

“It’s also a great training tool, but it's primarily a validation tool that can be used at multiple stages in the fabric lifecycle.”

Making the business case

Of course, it’s easy to make bold claims for new technology, but evaluating their impact on something as large and complex as a data center is another matter entirely.

That’s why Nokia has also devised a new tool, the Business Case Analysis (BCA), to enable data center operators to evaluate how the efficiency of their facilities could be improved with the injection of added automation.

Nokia’s BCA tool examines Day 0, Day 1 and Day 2+ operations, a tacit reflection of the fact that implementing new data center management software and systems makes most sense, at least initially, in fresh new builds. Primarily aimed at engineering, operations and senior management staff, the tool highlights the potential savings in time and effort that can be achieved across all three phases of the operational lifecycle by implementing the Nokia Data Center Fabric solution.

The idea of the tool, says Lundstrom, is to cut through the sales nonsense that’s typically deployed when vendors pitch for data center business. “We understand that customers have heard the pitch, not just from us, but other vendors – the cool features, where they’re making their investments – but the big question is how it’s going to affect them on a day-to-day basis.”

From a hardware perspective, the data center fabric is pretty much a commodity: most of the products are ultimately based on a Broadcom chipset, and there’s not a great amount of differentiation. “But the network operating system and the orchestration tools can make a really big impact on the operational expenditure side of the ledger,” says Lundstrom.

When kitting out a new data center, there’s not much that can be shaved off in terms of capital expenditure, but operational expenditure typically accounts for 85 percent or more of the overall cost of running the data center environment, so understanding how a new solution could affect operational costs is what really counts.

“We built the Business Case Analysis tool, which examines costs in all three phases, and breaks down the different types of job functions and tasks that need to be done, whether by the engineering team, security, operations, or deployment teams,” he says.

“The idea is to compare the Nokia-enhanced data center fabric against a pretty common existing environment. So, this isn't about a rip-and-replace on an existing site; it’s about how you’ll run your next-generation data centers and whether you’ll implement and run them with your existing toolkit, with all its limitations, or whether you can save operations time and effort by running a Nokia Data Center Fabric solution.”

The BCA tool offers two scenarios: Option one, with SR Linux running on Nokia networking hardware with an existing limited orchestration solution; or option two, which is SR Linux running on Nokia hardware and using the Nokia Fabric Services System. “The BCA tool tells you what the operational cost model will be and where the potential effort savings in person-hours can be found under those different scenarios,” says Lundstrom.

The methodology of the tool, he adds, is based on a variety job functions, and the savings that can be made per job function. “We looked at it holistically. We wanted to show the complete picture, including job tasks that are not directly affected by the use of our technology. But we think that gives a clearer, more rounded picture as to the overall effort savings that data center operations teams could achieve.”

Of course, as Lundstrom admits, greater automation in the data center will have a greater impact on certain roles more than others, as automation always has throughout history. But that is the price of progress, which is always more manageable when sensibly embraced, rather than resisted.

The benefits, though, are significant: lower costs, even more reliable data centers, greater efficiency, and staff freed up to perform more valuable functions. And these benefits will compound over time, too.

After all, while manually operated railways, for example, employed many more people than today’s highly automated systems, accidents were greater and pay was much lower. In the end, we will all benefit from well-thought and implemented automation, and as data centers get ever-larger, systems such as Nokia’s Data Center Fabric Solution can’t come soon enough.

For more information about the Nokia Data Center Fabric please visit the Nokia solution webpage.

More...

-

Nokia wins Microsoft Azure data center network switch contract

The companies previously worked together on Sonic

-

Sponsored The ‘Brave New World’ of NetOps

Global enterprise applications, mobile apps and games built and maintained on DevOps principles need NetOps to provide reliable, network support, argues Nokia’s Jonathan Lundstrom

-

Nokia vLabs data center in Tampere, Finland connects to district heating

Castellum's Valtatie 30 uses heat pumps to boost temperature of waste heat for sale to the city