It’s never been a more challenging time to work in network operations, especially within the data center environment. With so much that people take for granted now reliant on the data center, the weak links (in some respects) are the network connections.

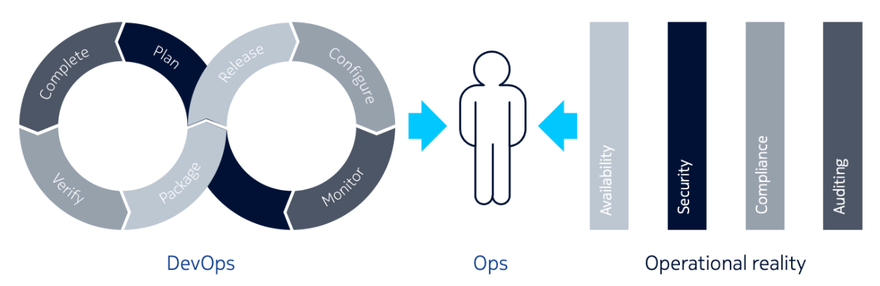

Moreover, the emergence of DevOps processes over the past decade has only increased the pressure: organizations are running more applications and services in the cloud, on the one hand, while data center operators, on the other, are under pressure to provide them with the same kind of flexibility on the network side that DevOps has provided their customers in terms of development and IT operations.

And those kinds of power shifts in the enterprise are mirrored in the data center, too.

“NetOps has taken the same principles as DevOps. That means a lot of automation, toolkits that enable operations to be implemented as code, and some changes in processes and culture in order to provide more flexibility in how networks are deployed,” says Jonathon Lundstrom, North American business development leader for Tier 2 Webscale, at Nokia.

And, adds Lundstrom, Nokia customers in the data center sector have led the way. “A lot of our customers were pushing effectively on their network operations teams, forcing them to change, in order to support the sheer scale and flexibility being demanded of them.

“So to sum up those requirements and call it NetOps, it's about leveraging the same types of DevOps principles of automation, flexibility and rapid development process, but for the network deployment side of the equation, which has had a hard time keeping up with the flexibility that DevOps teams have been demanding.”

The challenge in recent years, says Lundstrom, is that the tools available to support DevOps evolved more quickly than the toolkits to help automate and better manage the network. This is partly due to the pace of IT development, but also due to the legacy of existing network equipment, operating systems and available toolkits.

“Four years ago, maybe five, the teams that were deploying applications owned the server infrastructure and virtualization platforms, but they didn't necessarily own the networking side.

“Now, while they've always got along, the challenge has been that the applications evolved quicker than the toolkit to automate and make the network more flexible. So we’ve seen developments like OpenStack, the various VMware instantiations for VMs and containers, virtualization environments, and so on that have made rapid progress.

“But on the networking side automation has had to try to keep up, and that's where it's been challenging. This is partly due to the legacy of the existing network equipment vendors, network operating systems and toolkits,” says Lundstrom.

Both in the enterprise and the data center cloud, the application development side has very much pushed innovation: in the enterprise, the demand has been for faster, better and more agile development in order to support digital transformation. But in the data center, the kinds of applications demanding support might include multiplayer gaming involving hundreds of thousands or even millions of users playing concurrently, with instances of the same game running out of multiple data centers around the world.

“Legacy applications were typically single-threaded, monolithic installations. Today, whether it’s a video application, games or business, they are all architected very differently; not just to scale ‘horizontally’, but ‘vertically’,” says Lundstrom. “This shifts major new requirements onto the network operations team in terms of the different services required and how they are implemented so that each user enjoys the same experience.”

These significantly greater demands mean that network operations is no longer a ‘fire and forget’ activity – something that can be set-up once and forgotten about until a change is required. Some applications, says Lundstrom, have micro services that only need to subsist for minutes, for the lifespan of a transaction, for example – they do not need to be set-up to run for days, weeks and months. “So the connectivity needs to be able to rapidly change to support that kind of model,” he says.

But while there are challenges all the way across the sector, it is in the hyperscale data center where they are most acute.

“They were some of the pioneers of NetOps, and they’ve done a great job in helping to steer the industry. When we worked with Apple and other large hyperscalers, it was very apparent some of the kinds of challenges they faced with their existing network equipment vendors and solutions that struggled architecturally, as well as in areas such as the support interfaces and the things they needed, such as telemetry, which wasn’t quite where they needed it to be,” says Lundstrom.

Companies like Apple, rolling out data centers to support its iPhone users and sophisticated global applications like Apple Maps, drove a lot of the change in NetOps – they drove the scale, says Lundstrom, but companies below them are now starting to take advantage of everything they helped to pioneer.

Tooling up

Of course, something like DevOps was never possible without the introduction of enabling tools and technologies, like Chef and Puppet for configuration management, so that code could be deployed across multiple servers at the same time, with source code management handled in Git and the different stages of the delivery pipeline managed and automated in Jenkins. Kubernetes and Docker provided the containers enabling the DevOps applications to be easily and securely run in the cloud. All these tools could be tried out in production under open source licenses.

A similar ecosystem has developed over the past decade in NetOps, says Lundstrom, and is now gathering pace. SaltStack, for example, is an open-source IT automation tool for data centers supporting an infrastructure-as-code approach to network deployment and management, as well as providing configuration, orchestrating security operations (SecOps) and hybrid cloud control. “But it really depends on how much configuration you’re going to be doing and how much variance you’re going to have in your environment as to how useful those tools can be,” he says.

Equally powerful for data center management, though, are tools such as Grafana and Telegraf that can help in the analysis of network data. While Telegraf can help in the collection of metrics and events from databases, systems and IoT sensors, Grafana is an interactive visualization tool that can provide charts and graphs, and generate alerts based on network and other data. Again, data center operators can easily get started with such tools as they are available on open source licenses, and can graduate to enterprise licenses if they prove a valuable addition to the NetOps toolset.

Now, says Lundstrom, NetOps pros face the challenge of managing all the information from across the network that the new tools are delivering.

“More information means more flexibility, but how do you determine what is a good state for the network to be in, when so much is changing all the time? And what is a bad state? I would say that the bigger challenge is what you do with all this new information flooding into dashboards.

“It could span multiple network elements, even across different data centers. The overall health of solutions is something that everybody needs to be well aware of, and they don’t want to wait in five, 10, 15 or 20 minute intervals as they previously had to with SNMP,” says Lundstrom.

Of course, the hardware at the center of this new paradigm is the data center switches made by companies like Nokia, alongside the IP edge routers that tie it all together, such as the popular 7750 SR-Series. “They’re ‘battle hardened’ and have done very well where there’s a lot of optical data center interconnect equipment, such as global interconnection data centers such as Equinix and internet peering data centers, such as the London Internet Exchange (LINX) and DE-CIX in Germany,” says Lundstrom.

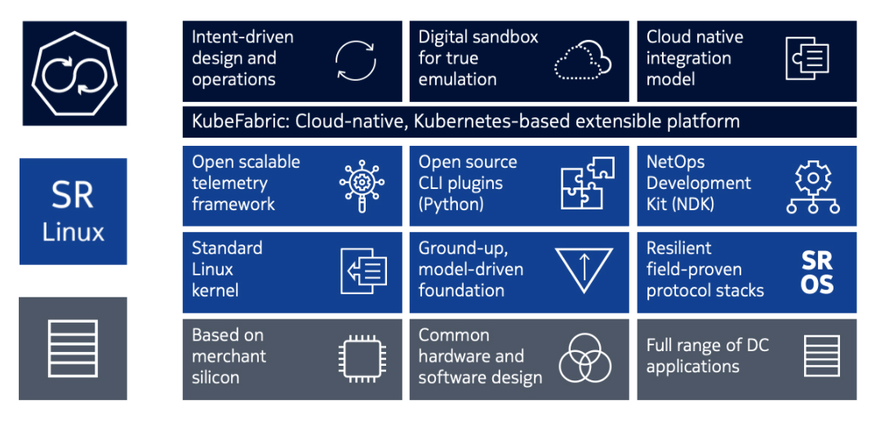

“We’ve also made a big investment in the data center at the spine and leaf layer, with our 7220 and 7250 IXR hardware, SR Linux product set and its accompanying orchestration solution, called the Fabric Services System (FSS). This combination gives data centers the flexibility they need, while also supporting the NetOps team, enabling them to keep up with DevOps,” he adds.

Indeed, while Nokia, Cisco, Juniper and other data center networking equipment providers do all ‘play nice’ together, one of the key points of differentiation is how their respective network operating systems are architected and, hence, how supportive they are of NetOps.

“The capabilities we built into our network operating system does give us an advantage for those customers who need more flexibility, whether that’s in managing telemetry data, provisioning and managing network elements, adding support for 3rd party agents, or how they make fundamental changes to the network operating system themselves – and, hence, the network.

“There’s flexibility that the data center operators need that, we think, is something we’re uniquely positioned to be able to provide,” says Lundstrom.

For more information about Nokia 7220 and 7250 hardware, SR Linux, Fabric Services System and NetOps automation, please visit the Nokia solution webpage

More...

-

Nokia vLabs data center in Tampere, Finland connects to district heating

Castellum's Valtatie 30 uses heat pumps to boost temperature of waste heat for sale to the city

-

Nokia Bell Labs gets US research funding for efficient data centers

ARPA-E funds will target better cooling

-

Nokia links NorthC's Netherlands data centers with Region Connect Ring

SDN creates a single "virtual data center"