Running a successful commercial data center is not for the faint of heart. With increased competition, profit margins are creeping downward. So one might assume data center operators would take advantage of something as simple as raising the equipment operating temperature a few degrees.

Letting the temperature climb can reap four percent energy savings for every degree increase, according to US General Services Administration. But most data centers aren’t getting warmer. Why is this?

The ASHRAE shake-up

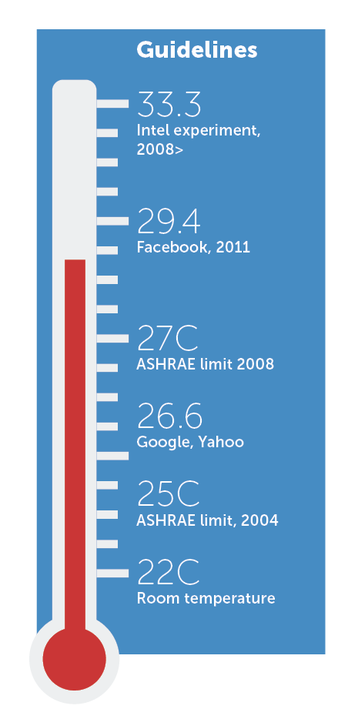

For years, 20°C to 22°C (68°F to 71°F) was considered the ideal temperature range for IT equipment. In 2004, ASHRAE (American Society of Heating, Refrigerating and Air-Conditioning Engineers) recommended the operating temperature range of 20°C to 25°C (68°F to 77°F) based on their study and advice from equipment manufacturers. Seeing the advantage, engineers raised temperatures closer to the 25°C (77°F) upper limit.

ASHRAE shook things up in 2008 with the addendum Environmental Guidelines for Datacom Equipment, in which the organization expanded the recommended operating temperature range from 20°C to 25°C (68°F to 77°F) to 18°C to 27°C (64.4°F to 80.6°F). To ease concerns, ASHRAE engineers mention in the addendum that increasing the operating temperature has little effect on component temperatures, but should offer significant energy savings.

Also during 2008, Intel ran a ten-month test involving 900 servers; 450 were in a traditional air-conditioned environment and 450 were cooled using outside air that was unfiltered and without humidity control. The only recompense was to make sure the air temperature stayed within 17.7°C to 33.3°C (64°F to 92°F). Despite the dust, uncontrolled humidity, and large temperature swings, the unconditioned module’s failure rate was just two percent more than the control, and realized a 67 percent power saving.

In 2012, a research project at the University of Toronto resulted in the paper Temperature Management in Data Centers: Why Some (Might) Like It Hot. The research team studied component reliability data from three organizations and dozens of data centers. “Our results indicate that, all things considered, the effect of temperature on hardware reliability is weaker than commonly thought,” the paper mentions. “Increasing data center temperatures creates the potential for large energy savings and reductions in carbon emissions.”

Between the above research and their own efforts, it became clear to those managing mega data centers that it was in their best interest to raise operating temperatures in the white space.

Google and Facebook get hotter

By 2011, Facebook engineers were exceeding ASHRAE’s 2008 recommended upper limit at the Prineville and Forest City data centers. “We’ve raised the inlet temperature for each server from 26.6°C (80°F) to 29.4°C (85°F)…,” writes Yael Maguire, then director of engineering at Facebook. “This will further reduce our environmental impact and allow us to have 45 percent less air-handling hardware than we have in Prineville.”

Google data centers are also warmer, running at 26.6°C (80°F). Joe Kava, vice president of data centers, in a YouTube video mentions: “Google runs data centers warmer than most because it helps efficiency.”

That’s fine for Facebook and Google to raise temperatures, mention several commercial data center operators. Both companies use custom-built servers, which means hardware engineers can design the server’s cooling system to run efficiently at higher temperatures.

That should not be a concern, according to Brett Illers, Yahoo senior project manager for global energy and sustainability strategies. Illers mentions that Yahoo data centers are filled with commodity servers, and operating temperatures are approaching 26.6°C (80°F).

David Moss, cooling strategist in Dell’s CTO group, and an ASHRAE founding member, agrees with Illers. Dell servers have an upper temperature limit well north of the 2008 ASHRAE-recommended 27°C (80.6°F); and at the ASHRAE upper limit, server fans are not even close to running at maximum.

All the research, and what Facebook, Google and other large operators are doing temperature-wise, is not influencing many commercial data center managers. It is time to figure out why.

David Ruede, business development specialist for Temperature@lert, has been in the data center “biz” a long time. During a phone conversation, Ruede explains why temperatures are below 24°C (75°F) in most for-hire data centers.

Historical inertia

For one, what he calls “historical inertia” is in play. Data center operators can’t go wrong keeping temperatures right where they are. If it ain’t broke, don’t fix it, especially with today’s service contracts and penalty clauses.

A few more reasons from Ruede: data center operators can’t experiment with production data centers, electricity rates have remained relatively constant since 2004, and operator concern about usage surges may adversely affect contracts.

Both Ruede and Moss cited an often overlooked concern. Data centers last a long time (10-25 years), meaning legacy cooling systems may not cope with temperature increases. Moss mentions: “Once walls are up, it’s hard to change strategy.”

Chris Crosby, founder and CEO of Compass Data Centers, knows about walls, since the company builds patented turn-key data centers. That capability provides Crosby with first-hand knowledge of what clients want regarding operating temperatures.

Crosby says interdependencies in data centers are being overlooked. “Server technology with lower delta T, economizations, human ergonomics, frequency of move/add/change, scale of the operation… it’s not a simple problem,” he explains. “There is no magic wand to just raise temperatures for savings. It involves a careful analysis of data to ensure that it’s right for you.

“The benefits when we’ve modeled using Romonet are rounding errors,” adds Crosby. “To save 5,000 to 10,000 dollars annually for a sweatshop environment makes little sense. And, unless you have homogeneous IT loads of 5+ megawatts, I don’t see the cost-benefit analysis working out.”

Some people are warming: Hank Koch, vice-president of facilities for OneNeck IT Solutions, gave assurances there are commercial data centers, even colocation facilities, running at the high end of the 2008 ASHRAE-recommended temperature range. Koch says OneNeck’s procedure regarding white-space temperature is to sound an alarm when the temperature reaches 25.5°C (78°F). However, temperatures are allowed to reach 26.6°C (80°F).

So why aren’t data centers getting warmer when there’s money and the environment to be saved? The answer is, it’s not as simple as we thought. Data centers are complex ecosystems and increasing operating temperatures require more than just bumping up the thermostat setting.

This article appeared in the March 2015 issue of DatacenterDynamics magazine. We will return to cooling in the July/August issue