Uptime Institute’s data on power usage effectiveness (PUE) is a testament to the progress the data center industry has made in energy efficiency over the past 10 years. However, global average PUEs have been largely stalling at close to 1.6 since 2018, with only marginal gains. This makes sense: for the average figure to show substantial improvement, most facilities would require financially unviable overhauls to their cooling systems to achieve notably better efficiencies, while modern builds already operate near the physical limits of air cooling.

Looking to liquid

A growing number of operators are looking to direct liquid cooling (DLC) for the next leap in infrastructure efficiency. But a switch to liquid cooling at scale involves operational and supply chain complexities that challenge even the most resourceful technical organizations. Uptime is aware of only one major operator that runs DLC as standard: French hosting and cloud provider OVHcloud, which is an outlier with a vertically integrated infrastructure using custom in-house water cold plate and server designs.

When it comes to the use of liquid cooling, an often-overlooked part of the cooling infrastructure is heat rejection. Rejecting heat into the atmosphere is a major source of inefficiencies, manifesting not only in energy use, but also in capital costs and in large reserves of power for worst case (design day) cooling needs.

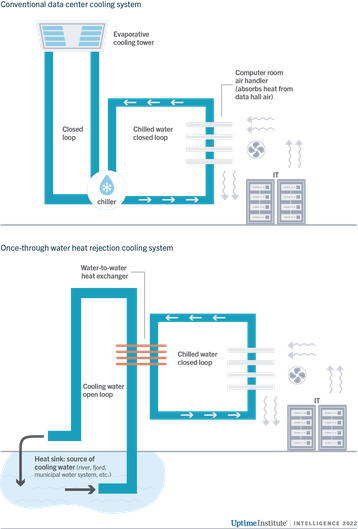

A small number of data centers have been using water features as heat sinks successfully for some years. Instead of eliminating heat through water towers, air-cooled chillers or other means that rely on ambient air, some facilities use the closed chilled water loop, which rejects heat through a heat exchanger that’s cooled by an open loop of water. These cooling designs using water heat sinks extend the benefits of water’s thermal properties from heat transport inside the data center to the rejection of heat outside the facilities.

The idea of using water for heat rejection, of course, is not new. Known as once-through cooling, these systems are used extensively in thermoelectric power generation and manufacturing industries for their efficiency and reliability in handling large heat loads. Because IT infrastructures are relatively smaller and tend to cluster around population centers, which in turn tend to be situated near water, Uptime considers the approach to have wide geographical applicability in future data center construction projects.

Uptime’s research has identified more than a dozen data center sites, some operated by global brands, that use a water feature as a heat sink. All once-through cooling designs use some custom equipment — there are currently no off-the-shelf designs that are commercially available for data centers. While the facilities we studied vary in size, location and some engineering choices, there are some commonalities between the projects.

Operators we’ve interviewed for the research (all of them colocation providers) considered their once-through cooling projects to be both a technical and a business success, achieving strong operational performance and attracting customers. The energy price crisis that started in 2021, combined with a corporate rush to claim strong sustainability credentials, reportedly boosted the level of return on these investments past even optimistic scenarios.

Rejecting heat into bodies of water allows for stable PUEs year-round, meaning that colocation providers can serve a larger IT load from the same site power envelope. Another benefit is the ability to lower computer room temperatures, for example, 64°F to 68°F (18°C to 20°C) for “free”: this does not come with a PUE penalty. Low-temperature air supply helps operators minimize IT component failures and accommodate future high-performance servers with sufficient cooling. If the water feature is naturally flowing or replenished, operators also eliminate the need for chillers or other large cooling systems from their infrastructure, which would otherwise be required as backup.

Still, that these favorable outcomes outweigh the required investments were far from certain during design and construction, as all undertakings involved nontrivial engineering efforts and associated costs. Committed sponsorship from senior management was critical for these projects to be given the green light and to overcome any unexpected difficulties.

We predict a more mature market

Encouraged by the positive experience of the facilities we studied, Uptime expects once-through cooling to gather more interest in the future. A more mature market for these designs will factor into siting decisions, as well as jurisdictional permitting requirements as a proxy for efficiency and sustainability. Once-through systems will also help to maximize the energy efficiency benefits of future DLC rollouts through “free” low-temperature operations, creating an end-to-end liquid cooling infrastructure.