There are 6.5 times as many connected devices as people on Earth in 2021. Industry projections anticipated enormous growth, but the boom has exceeded even those high expectations. To handle this excess of data at ultra-fast speeds, many organizations are turning to Edge computing.

According to a recent survey by STL Partners, 49 percent of enterprises in certain industries are actively exploring Edge computing, which aligns with the responses to the Vertiv Data Center 2025 survey in which participants expected the number of Edge sites they will need to support to grow by 226 percent from 2019 to 2025.

As the edge of the network proliferates, and becomes more sophisticated and mission-critical, the need to streamline the process of configuring and deploying Edge computing sites increases.

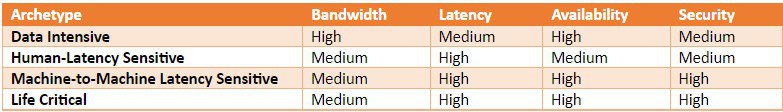

The first step in that process was to categorize Edge use cases based on their latency, bandwidth, availability, and security requirements. To that end, Vertiv analyzed established and emerging Edge use cases and identified four archetypes for Edge computing.

The four archetypes brought clarity to the broad and sometimes confusing Edge computing landscape and created the foundation for more in-depth analysis focused on infrastructure requirements.

Using the archetypes, organizations considering Edge deployments could quickly identify the key attributes of their Edge networks based on whether their use cases were primarily: data intensive, human-latency sensitive, machine-to-machine latency sensitive or life critical.

Introducing Edge computing models

The next step involves defining a framework for categorizing Edge infrastructure into specific models, to help organizations make practical decisions around physical infrastructure at the Edge.

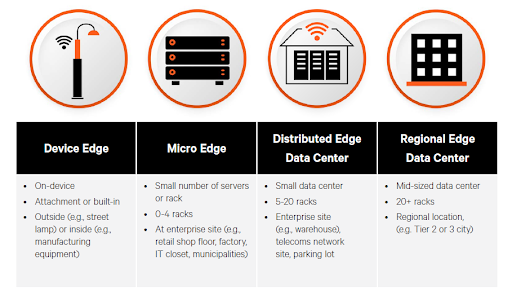

Today’s Edge encompasses a spectrum that extends from regional Edge data centers down to devices. Depending on the specific requirements of the use case, the Edge network may include all or some of these Edge computing models.

The need for regional Edge data centers is being driven by growth in data intensive and human-latency sensitive use cases, such as high-definition content distribution and cloud gaming. Most enterprises will leverage multi-tenant data centers to enable regional Edge capabilities, and colocation providers are actively expanding their networks to support this evolution.

However, as select use cases in the human-latency sensitive and machine-to-machine latency sensitive archetypes continue to expand — think virtual reality and smart grids respectively — some capacity at regional Edge data centers will migrate to distributed Edge and micro Edge data centers.

While similar in function, these two data center models each play a distinct role in the Edge ecosystem. The distributed Edge model offers a higher level of availability than can typically be achieved at the micro Edge but sacrifices some of the latency reduction provided by that model because these sites aren’t located as close to the data source.

Situated at an enterprise site, a telco network facility, or a multi-site facility, distributed Edge sites can bring computing closer to large groups of users than a regional Edge site, for use cases that are latency-sensitive but don’t require ultra-low latency and where it isn’t practical to deploy the number of micro Edge sites that would be required.

The micro Edge data center, due to its smaller size, enables deployment closer to data sources to minimize latency for use cases that don’t rely on the device Edge. The size of these Edge sites, which may be deployed in back offices, on shop floors, or at the base of a telco tower, can limit the physical infrastructure to essential power protection and distribution, cooling, and a cabinet or enclosure, providing less redundancy than can be achieved at the micro or regional Edge.

Their ability to minimize latency will, however, make them critical in enabling human-latency sensitive, machine-to-machine latency sensitive and data intensive archetypes. Many of the industries that will benefit most from the expansion of the Edge in the short term will need to deploy micro Edge data centers, including retail, manufacturing, education, healthcare, telecommunications and government.

In these industries, the local compute provided by the micro Edge enables faster data collection and processing that can be used to increase efficiency, reduce equipment downtime, improve customer experiences, and introduce new applications.

This is expected to drive deployment of thousands of micro Edge data centers in the coming years, and this model in particular, will benefit from standardized infrastructure solutions that integrate compute, power protection and distribution, cooling, and enclosures in use-case-specific systems that enable fast deployment.

Accelerating Edge deployments

No two Edge sites will be exactly alike, but they share key characteristics that establish parameters for important decisions around equipment selection and architecture.

Since similar use cases often have similar requirements, identifying the Edge archetype that most accurately represents a use case can guide what Edge infrastructure model may be best, and the models can then inform decisions around physical location, further narrowing equipment decisions and configuration options.

To learn more about edge data center models, read Vertiv’s new report, Edge Archetypes 2.0: Deployment-Ready Edge Architecture Models

Vertiv has also built an interactive Edge infrastructure selection tool that details the specific workload and infrastructure characteristics based on user-selected industries and use cases. Find the Edge Archetypes 2.0 Tool link in the top menu to give it a try.