Energy efficiency is the unsung hero of computing.

We all know the performance story. Cutting-edge mobile technology once meant a calculator with a dedicated square root button. Soon, autonomous cars, smart medical devices and smart buildings will change the fabric of daily life.

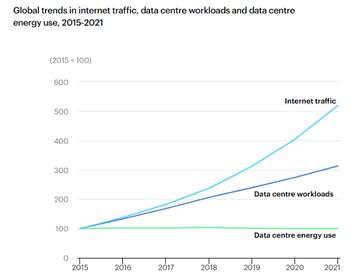

But those advances have only been possible because mobile phones, servers and other devices have been doubling the amount of work they perform watt of power roughly every 2 to 2.5 years. Take data centers. Data center workloads have nearly tripled since 2015 while Internet traffic has grown 5x, yet data center power consumption - thanks to a number of background breakthroughs that may not make the front pages - has stayed relatively flat at around 198 billion kilowatt hours.

Is this unusual? Does your car’s gas mileage double every few years?

Another way to look at the energy efficiency phenomenon is through the lens of compute density. Increasing compute density increases available resources per data center, which in turn means a potentially better ROI for carriers, better pricing for applications taking advantage of the infrastructure and more rapid adoption by customers. A rack, however, can only accommodate a finite amount of power and heat: either productivity increases via work-per-watt or more space and servers are required. The virtuous cycle suddenly starts creaking.

The coming wave of intelligent devices adds a new level of urgency to the performance-per-watt quest. Electricity is often 30-50 percent of the operating costs of data centers. However, the State of the Edge 2020 report estimates that 102GW of new power capacity, or basically a California-size grid, will be needed by 2028 to serve these new applications. As a result, a number of industries could struggle or swim depending on what happens with efficiency and density.

What are the some of the ideas for bending the curve?

More Cores

Over the past 15 years, most have followed an “outside in” path to better efficiency and density. First, companies retrofitted buildings to improve air flow. Then, plastic sheeting over racks further brought down cooling budgets. Then they went inside the racks to root out zombie servers running in place. Next came GPUs and flash accelerators to boost operations-per-watt.

The next layer of the onion revolves around the crown prince of computer components. Yes, the processor. Data centers deploying servers with processors powered large numbers of energy-efficient compute cores instead of smaller numbers of beefier cores. Put another way, they are shifting from racing in a lower gear at high RPMs to a higher gear at lower RPMs.

The new Graviton2 system-on-chip (SoC) processor from Amazon Web Services (AWS) consists of 64 compute cores that can be combined with other purpose-built cores for hundred, if not thousands, of cores per rack. Fujitsu last November claimed the #1 spot on the Green 500 list with Fugaku, a high-performance supercomputer containing nearly 37,000 energy efficient cores that can perform 16.876 gigaflops per watt. Ampere recently showed how it can fit 3680 cores into a rack, or more than 2.5x more than normal.

Add NPUs

Like 1970s Stan Smiths shoes, the co-processor is back. Neural network processing technology - which can come in the form of vector extensions, integrated circuitry or a dedicated NPU - is specifically designed for performing matrix multiplication in a compute (and thus energy) efficient manner. Classic CPUs are not. Deloitte predicts NPU shipments will grow by 20 percent per year, more than 2x the long term CAGR for semiconductors.

NPU technology could be critical for reducing the resources required for extracting insights from video and images with AI. Video already accounts for an estimated 75 percent of Internet traffic today and will gobble up 82 percent by 2022. Facial recognition and real-time analysis will only add to the heft.

Leverage the Edge

Cloud-based data centers - in the abstract - are the most efficient place to place computing loads. Workloads can be aggressively consolidated, and the most efficient equipment can be used to populate them.

Unfortunately, we don’t live in an abstract world. A 15-minute surge in power demand at a cloud, for instance, could lead to annoying (and exorbitant) peak-power charges which wouldn’t occur if loads were spread to the periphery. Meanwhile, autonomous driving and AI-enabled factories will also require a sophisticated edge infrastructure.

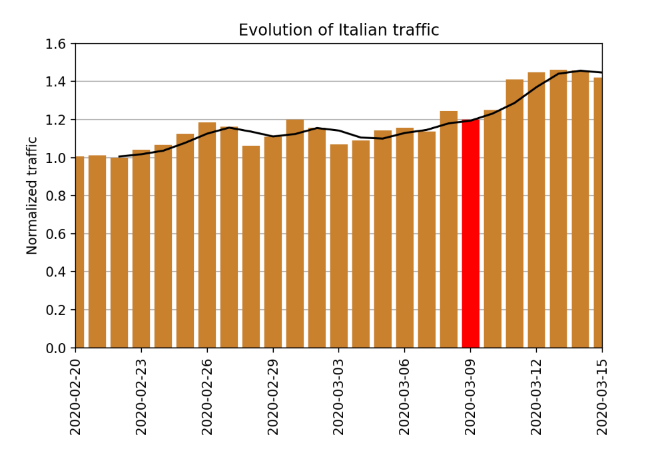

And in a national or global crisis, like the current Covid-19 pandemic, a cloud-centric outlook could mean slow connections and spotty video feeds. For example, Internet traffic in the U.S. temporarily spiked by 20 percent after a national state-of-emergency was declared on March 13, according to Cloudflare, while Italy’s daily traffic surged 20-40 percent (see image).

And with videoconferencing likely to be more common in a post-pandemic world, the need for a deep edge is even more apparent.

This trend will require carriers and others to develop techniques for fluidly shifting loads to maximize utilization and minimize power and network traffic. Ehsan Ahvar, Anne-Cécile Orgerie and Adrien Lebre at the Inria Project Lab recently wrote that managing IoT-related tasks on distributed servers can consume 14 percent to 25 percent less power than a cloud-based data center. Why? Fewer network hops, lower cooling loads, and better use of fallow computing assets.

Similarly researchers of the University of Leeds found that the power consumption for surveillance video tasks could be cut by up to 32 percent through harvesting the available capacity from nearby IoT and fog computing assets during slow periods.

Calculate the Ancillary Effects

More work-per-watt potentially means a better use of real estate, which can be a premium cost in cities or at the edge. A shift to renewables can reduce power and water costs as well as reduce regulatory fees. Employees, consumers and investors are demanding companies reduce their own carbon footprint as well as buy from greener suppliers.

Data centers aren’t facing a crisis yet, but they want to start thinking about the changes coming their way now.

Further reading

-

Broadcast DCD>Energy Smart VIRTUAL

-

-