With increasing focus on sustainable operations, data center operators have three – at times conflicting – business mandates to uphold: maximizing uptime, capacity utilization, and energy efficiency. These mandates often impact one another, as well as the entire data center system’s stability.

As organizations work to strike the right balance, many operators find themselves stuck in a reactive cycle of firefighting issues as they arise – in no small part due to manual processes and a lack of integrated tools.

Digital twin technology can help data center operators break through this reactive cycle. Cadence DataCenter Digital Twin enables proactive practices with always-ready computational fluid dynamics (CFD) models that can perform capacity planning and energy optimization analysis in the form of predictive what-ifs.

In this article, I’ll cover how an automated data entry workflow can produce routinely updated CFD analysis and simplify the decision-making process in data center operations.

Overcoming the scalability challenge

For over two decades, the data center industry has used CFD to predict airflow and temperature distribution in the whitespace. CFD analysis enables data center designers and operators to model how a data center will perform under any operating conditions.

However, until recently, the technology was primarily used during the design and commissioning phases or mid-life for retrofits and optimization projects. Use in day-to-day operations was largely seen as a pipe dream. The rationale was that CFD models are difficult to maintain without an impractical commitment of resources.

In other words, what good are predictions from an outdated model? It is a valid concern, given that most data centers undergo constant change during daily operations. Additionally, the data required for modeling is typically siloed across multiple teams, and many organizations may not have the in-house expertise to perform CFD analysis regularly.

To overcome these challenges and achieve up-to-date predictive analysis, Cadence data center software automates model upkeep by integrating data feeds from toolsets that organizations already use for day-to-day operations – tools such as data center infrastructure management (DCIM), environmental and electrical monitoring systems, and CSV spreadsheets.

By synchronizing with these toolsets, digital twin models can pull all relevant, necessary data and update accordingly. The data includes objects on the floor plan, assets in the racks, power chain connections, historical power, and environmental readings, and perforated tile and return grate locations.

Therefore, the digital twin model is always ready to run the next predictive scenario with current data and minimal supervision from the operational team. As part of the routine output from the software, DataCenter Digital Twin produces Excel-ready reports, capacity dashboards, CFD reports, and go/no-go planning analysis.

Teams can then use this information to evaluate future capacity plans, conduct sensitivity studies (such as redundant failure or transient power failure), and run energy optimization studies as needed. Much of this functionality is available through an intuitive and accessible web portal.

Choosing the right software for your organization’s needs

We know that every organization has a unique set of problems, priorities, and workflows. As such, we’ve split DataCenter Insight Platform into two offerings – DataCenter Asset Twin and DataCenter Digital Twin.

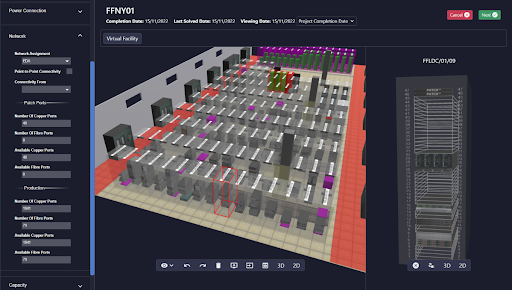

DataCenter Asset Twin (pictured above) has a 3D interface with drag-and-drop functions to manage IT moves, adds, and changes (MACs). Operators can use this offering to track capacity utilization and availability, as well as manage point-to-point network port connections, panel schedules, and power connectivity. The software integrates real-time monitoring, configuration management databases (CMDB), and more.

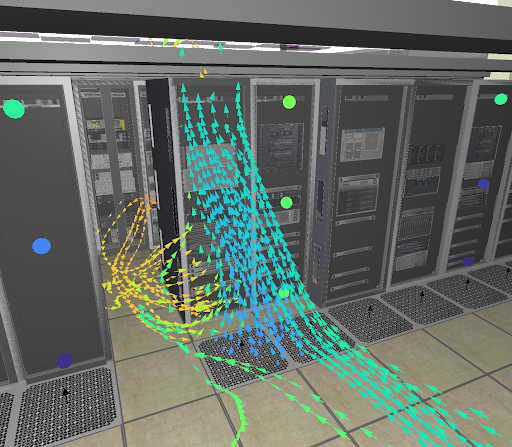

DataCenter Digital Twin (pictured below) builds upon the Asset Twin feature set with automated CFD simulation and reporting capability. The templated reporting in this offering includes result planes/thermal maps, zone-level analysis, and industry metrics such as ASHRAE compliance and power usage effectiveness (PUE.)

Operators can make proactive and optimized decisions with side-by-side simulation result comparisons or plot 3D streamlines to trace the path of airflow and uncover the “why?” behind thermal issues. Airflow streamlines (pictured below) are particularly useful because they visualize the delivery of airflow to devices, often uncovering issues that would otherwise go undetected by environmental monitoring alone. Streamlines lend invaluable perspective that can inspire solutions to the problem at hand. Operators can use this offering to stress test future deployments to ensure thermal compliance in production.

Successful implementation and proactive practices

The true value of a digital twin model lies in its ability to mirror the operational activity of its physical counterpart accurately. For digital twins to maximize their usefulness in operations, they must have seamless access to high-quality data and be integrated into an organization’s existing workflows.

As such, Cadence has created data center digital twin solutions that facilitate this integration process to ensure successful implementation and achieve proactive practices in operations. See the software in action by viewing our recent webinar.

More from Cadence

-

Why are we just accepting capacity loss?

We know it happens, so why aren’t we doing something to prevent it?

-

DataCenter Design Software and Insight Platform Release 17

Data Center Design and Operations Management Software

-

DCD>Talks Preventing Fragmentation & Capacity Loss with Hassan Moezzi, Cadence

Tune into this DCD>Talk from our Silicon Valley event, where we dive into the strategies operators can adopt to prevent fragmentation and capacity loss, with Hassan Moezzi, Cadence