I have a bone to pick with the data center world. I have lived in the data center my entire professional career. I’ve worked in ASPs (remember those?), colos, enterprise and hyperscale data centers and the one thing that ALWAYS confounded me was the complexity of racking servers and then having to remote manage them while ensuring that the application stayed unaffected.

Hot under the collar

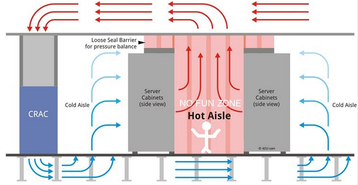

First off let me tell you about hot aisle containment. I recently read that there are two kinds of jobs - those where you shower before and those where you shower after. Whoever wrote that has never worked in a data center. If you’ve ever had to plug anything into the back of the rack, you have experienced discomfort.

It makes absolutely no sense to have to manage a rack from the front and the back. The Open Compute has shown a better way - and even though 21 inches is weird, it’s a form factor.

Barbaric management

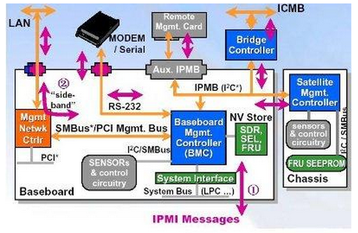

Next comes hardware management. This diagram (left) shows the Intelligent Platform Management Interface (IPMI). It is worse than SNMP and this is just high level architecture. If I showed you IPMI packet structure you’d be shocked!

To quote my VP of Engineering, “It is barbaric”. It’s not just arcane, old and scary. It’s hardly useful in the modern data center beyond pretending to be a person standing next to the server who you occasionally call to do some physical action against it. If you’ve worked in this industry for as long as I have, “hit it on the head” has been said by you or within earshot of you while referring to rebooting a server. Barbaric indeed.

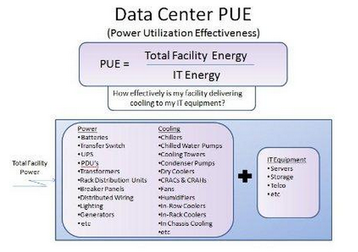

What is PUE for?

Last but certainly not least we have efficiency. From the definition of Power Usage Effectiveness (PUE), you could literally plug a rack full of hair driers in and measure PUE. What exactly does PUE have to do with your cost per sq ft? Cost per kWh? Nothing!

You are in a data center to run a workload, so why shouldn’t your efficiency put that workload into context? Why can’t we take PUE and server metrics and sensors and give you PWD (Performance per watt per dollar)?

/end rant

/begin solution

Open Rack: front access

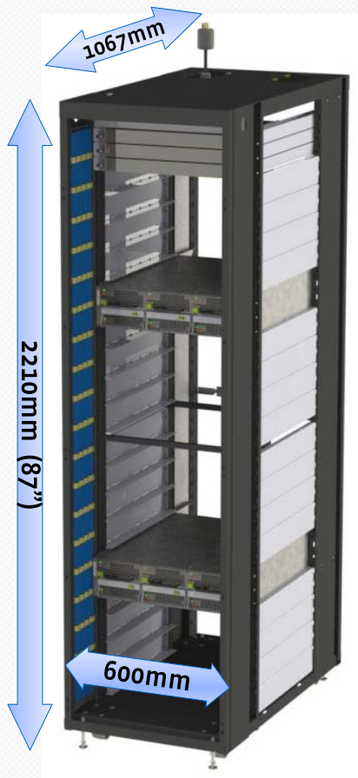

Open Rack is a great starting place. By moving serviceability and IO to the front there is no longer a need to shower both before AND after work (unless you go to the gym after work, then a shower is advisable).

I think many improvements have been made to the rack but we shouldn’t stop there. The rack is a unit of scale and from where I sit that unit is too small (Sorry Intel). Vapor IO will completely isolate all of your critical environment support gear, power and backup while freeing up the rack to actually house IT gear and free up your building to be anything you want it to be.

Open DCRE fixes IPMI

Free as in code, free as in thought, Open DCRE does away with all the complexities of IPMI by a) making it simple to look at your infrastructure as an abstraction layer and b) making it easy to put that data into context.

Limits of PUE

Finally we have good ol’ PUE. It is sort of useful, no its own. But below 1.1? Probably not.

As basis for PWD (performance per Watt dollar)? Of course. As an industry, this could be the holy grail for DCSO (Data Center Service Optimization).

There are several companies that will tell you they can give this to you today. In reality they have done one of three things)

- They’ve taken a best effort engineering exercise gluing old technology together to make something look integrated but really have just created additional management domains and complexity.

- They’ve focused on just the software.

- They’ve focused on just the hardware.

Houston, we have a problem. We first need to integrate features and as in industry I give us a B+ on that but after that we need to take a holistic and native view of the data center, and here is where we have massive room to improve.

Remember, Integration is where innovation is born. Native is always where innovation takes us. Help us bring the data center into the 21st century!

Cole Crawford is CEO of Vapor IO