Controls on the digital infrastructure behind artificial intelligence systems could be an effective way to prevent AI from being misused, a new report claims.

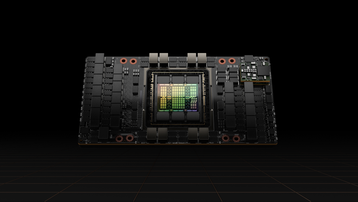

The paper, written by a group of 19 academics and backed by ChatGPT-maker OpenAI, suggests policy measures such as a global registry for tracking the flow of chips destined for AI supercomputers, and “compute caps” – built-in limits to the number of chips each AI chip can connect with.

Regulating AI and the role of compute power

The regulation of AI has become a hot topic among politicians over the last 18 months as interest in the technology has boomed.

Different approaches to controlling large language AI models such as OpenAI’s GPT-4 and Google’s Gemini, which have the potential to produce harmful outcomes, are being pursued in different parts of the world, with the European Union drawing up an overarching AI act to regulate the systems, while countries such as the US and UK have taken a more hands-off approach.

Most regulation has focused on the AI systems themselves, rather than the compute power behind them. The new research, entitled Computing Power and the Governance of Artificial Intelligence, says AI chips and data centers offer more effective targets for scrutiny and AI safety governance. This is because these assets have to be physically possessed, it said, whereas the other elements of the “AI triad” – data and algorithms – can, in theory, be endlessly duplicated and disseminated.

Authoring of the report was co-led by three Cambridge University institutes – the Leverhulme Centre for the Future of Intelligence (LCFI), the Centre for the Study of Existential Risk (CSER), and the Bennett Institute for Public Policy – along with OpenAI and research organization the Centre for the Governance of AI.

“Artificial intelligence has made startling progress in the last decade, much of which has been enabled by the sharp increase in computing power applied to training algorithms,” said Haydn Belfield, a co-lead author of the report from Cambridge’s LCFI.

“Governments are rightly concerned about the potential consequences of AI, and looking at how to regulate the technology, but data and algorithms are intangible and difficult to control.”

In contrast, Belfield said computing hardware is visible and quantifiable, and “its physical nature means restrictions can be imposed in a way that might soon be nearly impossible with more virtual elements of AI.”

How AI compute power could be regulated

The report provides possible directions for compute governance. Belfield used the analogy between AI training and uranium enrichment.

“International regulation of nuclear supplies focuses on a vital input that has to go through a lengthy, difficult, and expensive process,” he said. “A focus on compute would allow AI regulation to do the same.”

Policy ideas suggested in the report are divided into three areas: increasing the global visibility of AI computing, allocating compute resources for the greatest benefit to society, and enforcing restrictions on computing power.

The authors argue a regularly-audited international AI chip registry requiring chip producers, sellers, and resellers to report all transfers would provide precise information on the amount of compute possessed by nations and corporations at any one time. A unique identifier could be added to each chip to prevent industrial espionage and “chip smuggling,” though they concede this could also lead to a black market of untraceable, or “ghost” chips.

“Governments already track many economic transactions, so it makes sense to increase monitoring of a commodity as rare and powerful as an advanced AI chip,” said Belfield.

Other suggestions to increase visibility – and accountability – include reporting large-scale AI training by cloud computing providers and privacy-preserving “workload monitoring” to help prevent an arms race if massive compute investments are made without enough transparency.

“Users of compute will engage in a mixture of beneficial, benign, and harmful activities, and determined groups will find ways to circumvent restrictions,” said Belfield.

“Regulators will need to create checks and balances that thwart malicious or misguided uses of AI computing.”

These controls, the academics suggested, could include physical limits on chip-to-chip networking, or cryptographic technology that allows for remote disabling of AI chips in extreme circumstances.

AI risk mitigation policies might see compute prioritized for research most likely to benefit society – from green energy to health and education. This could even take the form of major international AI “megaprojects” that tackle global issues by pooling compute resources.

Is regulating AI compute power a realistic prospect?

The involvement of OpenAI in the paper (Girish Sastry, a policy researcher at the AI lab, is listed as a co-author) may raise some eyebrows, given that Sam Altman, the company’s CEO, is said to be attempting to raise $7 trillion to create a vast network of AI chip production facilities, a move which could lead to the market being flooded with even more hardware.

The report’s authors said their policy suggestions are “exploratory” rather than fully fledged proposals and that they all carry potential downsides, from risks of proprietary data leaks to negative economic impacts and the hampering of positive AI development.

But LCFI’s Belfield added: “Trying to govern AI models as they are deployed could prove futile, like chasing shadows. Those seeking to establish AI regulation should look upstream to compute, the source of the power driving the AI revolution.

“If compute remains ungoverned it poses severe risks to society.”