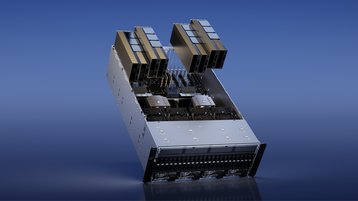

Oracle Cloud Infrastructure (OCI) has made Nvidia H100 Tensor Core GPUs generally available on OCI Compute.

The H100 GPUs will be available as OCI Compute bare-metal instances, targeting large-scale AI and high-performance computing applications.

The H100s have been found to improve AI inference performance by around 30 times, and AI training by four times compared to that of Nvidia's A100 GPUs, though are notoriously hard to get hold of.

The OCI Compute shape includes eight H100 GPUs, each with 80GB of HBM2 GPU memory and 3.2Tbps of bisectional bandwidth. The shape also includes 16 local NVMe drives with 3.84TB each and the 4th Gen Intel Xeon CPU processors with 112 cores and 2TB of system memory.

Customers can also use the OCI Supercluster to spin up tens of thousands of H100s for a high-performance network.

In addition to the H100 launch, the Nvidia L40S GPU based on Ada Lovelace architecture will be generally available in early 2024.

A universal GPU for the data center, the chip is designed for Large Language Model (LLM) inference and training, visual computing, and video applications.

These instances will offer an alternative to the H100 and A100 instances for small to medium size AI projects, and graphics and video compute tasks.

The L40S can offer a 20 percent performance increase for generative AI workloads and a 70 percent improvement in fine-tuning AI models compared to the A100.

OCI is not alone in deploying the H100s. In August, Google announced that it was launching new A3 virtual machines similarly featuring H100 GPUs and 4th Gen Xeon Scalable processors that can grow to 26,000 H100s, and AWS began offering the GPUs in July as part of its EC2 P5 instances.

Nvidia has seen its valuation soar past $1 trillion due to the AI demand, but there has also been a significant GPU shortage. Nvidia offered priority access to smaller cloud companies due to the shortage, such as CoreWeave and Lamda Labs. The chip maker has since invested in the former, and is in talks to invest in the latter.