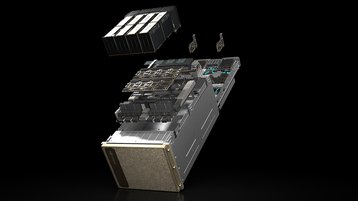

Amazon Web Services now offers customers access to Nvidia's latest H100 GPUs.

The GPUs, which are in short supply, are available as Amazon EC2 P5 instances. With P5 instances, machine learning applications can use the Nvidia Collective Communications Library to use up to 20,000 H100 GPUs in a single EC2 UltraCluster.

Nvidia and Amazon claim that the P5 instances are up to six times faster at training large-language models than the A100-based EC2 P4 instances, and can cut training costs by 40 percent.

“AWS and Nvidia have collaborated for more than 12 years to deliver large-scale, cost-effective GPU-based solutions on demand for various applications such as AI/ML, graphics, gaming, and HPC,” said Adam Selipsky, CEO at AWS.

“AWS has unmatched experience delivering GPU-based instances that have pushed the scalability envelope with each successive generation, with many customers scaling machine learning training workloads to more than 10,000 GPUs today. With second-generation EFA, customers will be able to scale their P5 instances to over 20,000 Nvidia H100 GPUs, bringing supercomputer capabilities on demand to customers ranging from startups to large enterprises.”

Amazon cited a number of customers that are already using P5 instances, including Cohere, Hugging Face, and Pinterest. Notably, AWS also quotes Anthropic, an AI startup that said that it was partnering with Google Cloud after the company invested in it.

“While the large, general AI systems of today can have significant benefits, they can also be unpredictable, unreliable, and opaque, so our goal is to make progress on these issues and deploy systems that people find useful,” said Tom Brown, co-founder of Anthropic.

“We expect P5 instances to deliver substantial price-performance benefits over P4d instances, and they’ll be available at the massive scale required for building next-generation LLMs and related products.”

Hyperscalers have struggled to procure Nvidia's latest GPU due to supply shortages. Nvidia, in turn, has offered priority access to smaller cloud companies that aren't building their own AI chips, such as CoreWeave and LamdaLabs. It then invested in them.