Microsoft is secretly developing its own internal artificial intelligence chip, codenamed Athena.

The Information reports that the semiconductor has been in the works since 2019, and is available to a small group of Microsoft and OpenAI employees for testing.

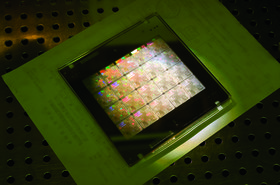

The 5nm-process node Athena is built for training software such as large-language models (LLMs), which are core to the generative AI surge seen in recent months. But the growth of those models has been held back by GPU shortages at Nvidia, the market leader in AI training chips.

The Information previously reported that the shortages led Microsoft to ration its GPUs for some internal teams.

Nvidia processors are also sold with a high mark-up, so internal chips could be a cheaper way to run the same workloads. However, beyond sheer horsepower, Nvidia's chips have a significant software advantage, with the majority of AI workloads designed for them, and decades of developer heritage.

Microsoft could deploy Athena for wider use within Microsoft and OpenAI next year, but is reportedly debating whether to also make them available to Azure customers more broadly.

Cloud rival Google has developed its own AI chip family, the TPUs, which are widely seen as the only current rival chip for developing LLMs. Amazon has its own alternative product line, Trainium.

Both are only available over their respective clouds, and have found customers. Beyond their own use, it is also believed that they help the companies negotiate better deals with Nvidia.

For the latest issue of DCD Magazine, we spoke to Microsoft, Google, AWS, Nvidia, half a dozen chip companies, and others, about generative AI and what it means for the future of compute. Read it for free today.