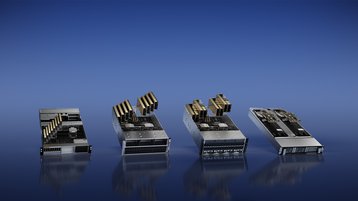

Content delivery network (CDN) company Cloudflare plans to deploy Nvidia GPUs across its global Edge network.

The platform is targeted at artificial intelligence applications, in particular generative AI models like large-language models. The model of Nvidia GPU was not disclosed.

"AI inference on a network is going to be the sweet spot for many businesses: private data stays close to wherever users physically are, while still being extremely cost-effective to run because it’s nearby," Matthew Prince, CEO and co-founder of Cloudflare, said. "With Nvidia’s state-of-the-art GPU technology on our global network, we’re making AI inference - that was previously out of reach for many customers - accessible and affordable globally."

Cloudflare will also deploy Nvidia Ethernet switches, and use Nvidia's full stack inference software, including Nvidia TensorRT-LLM and Nvidia Triton Inference server.

The GPUs will be deployed in over 100 cities by the end of 2023, and "nearly everywhere Cloudflare’s network extends" by the end of 2024, the company said. It operates in data centers in more than 300 cities across the world.

"Nvidia's inference platform is critical to powering the next wave of generative AI applications," said Ian Buck, VP of hyperscale and HPC at Nvidia.

"With Nvidia GPUs and Nvidia AI software available on Cloudflare, businesses will be able to create responsive new customer experiences and drive innovation across every industry."

At launch, the AI Edge network will not support customer-provided models, and only support Meta's Llama 2 7B and M2m100-1.2, OpenAI's Whisper, Hugging Face's Distilbert-sst-2-int8, Microsoft's Resnet-50, and Baai's bge-base-en-v1.5.

Cloudflare plans to add more models in the future, with the help of Hugging Face.