At the core of enterprise data centers for the past few decades have been clusters of commercial, off-the-shelf processors based, in large measure, upon the architecture of processors built for PCs. But the PC market worldwide is waning; mobile processors are moving to new, lower-power designs; and cloud architectures are starting to have a physical impact on the architecture of data centers.

Nowhere is this fact more evident than in a group of announcements from the recent Computex conference in Taiwan. At the center of the spotlight was Cavium, the maker of LiquidIO adapters that cloud service providers use to deploy software-defined network (SDN) applications. Cavium announced it was entering the 64-bit ARMv8 processor business just last October.

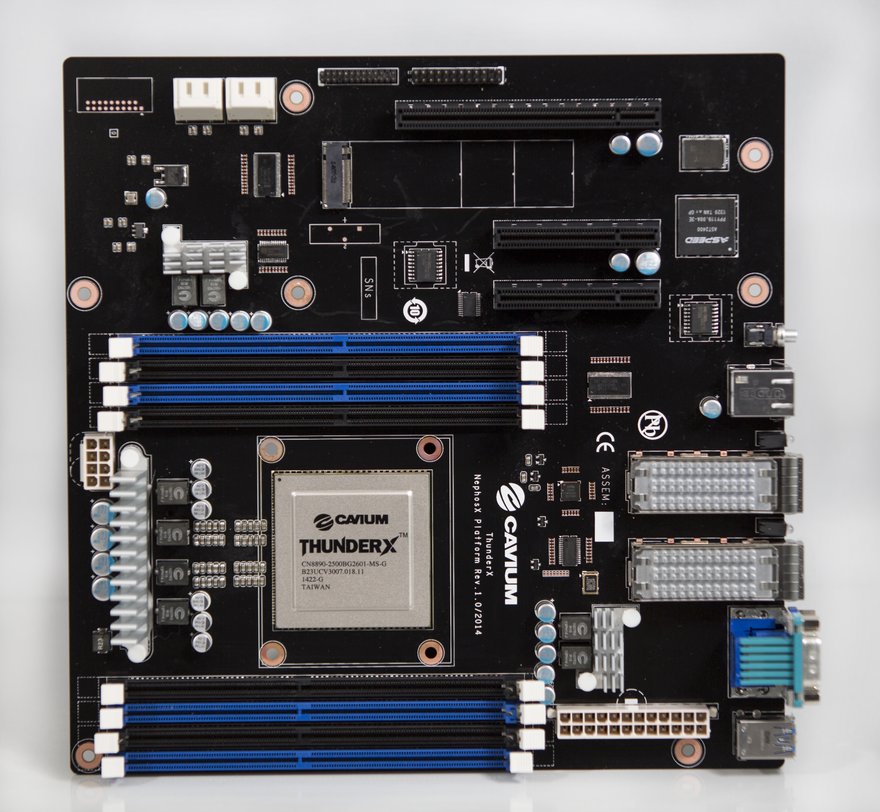

That fact in itself might not count for much, were it not for Cavium’s forthcoming ThunderX processors, which will be workload-optimized. This means they will be delivered with System on Chips (SoCs) containing libraries of functions commonly used applications in the public cloud can put to use at hyperscale. This means potentially hundreds of millions of threads public cloud providers can easily cultivate at any one time, essentially offloaded from the software stack and the overburdened CPU.

Drawing on PCI

This is what makes a ThunderX-endowed server inherently different from a typical Intel Xeon or AMD Opteron-based cloud server cluster. It will be specifically designed to perform cloud applications. “Data centers traditionally have been very homogeneous,” says Gopal Hegde, VP and general manager for Cavium’s data center processor group. Every server in the data center to date is based on the same x86/x64 platform, he says (although IBM would beg to differ), and customization typically comes only by way of plug-in PCIe cards. Cavium knows — PCI cards are the business that put it on the map.

PCI cards introduce latencies that might not amount to much lost time when scaled to the thousands, or even tens of thousands but at much higher orders of magnitude they become bottlenecks. “Doing what the traditional servers did does not give you the best-in-class experience,” Hedge says.

He adds that cloud architectures are demanding processors become adaptable in more innovative, practical and potent ways than just hanging more cards out the side of the server box.

Optimizing for applications

“They were one-size-fits-all, and they were customized using add-in cards,” Hegde says. “It did not make sense for somebody to build a processor that was very specific for a workload, because there are so many applications, and each application is used by fewer people. Now, the Cloud is changing all that. And when you have an application that is being used by hundreds of millions of users, it makes sense to physically optimize the user experience for that particular application. People are building huge data centers, and putting hundreds of thousands — in some cases millions — of servers inside to address one particular application that is used by so many users.

Just what specific workloads? The answer to that is why Cavium’s announcements came in a well-orchestrated group.

First, Cavium will be working with the Xen Project, joining the Xen Advisory Board to learn about and to contribute to Xen hypervisors. To that end Cavium will also be working with Xen’s principal technology contributor, Citrix. Second, Cavium will be working with Oracle to create workload optimization for the forthcoming Java SE 8. This could be huge for cloud Platform-as-a-Service providers such as Amazon’s Elastic Beanstalk and Salesforce’s Heroku. Third, OpenSUSE announced Tuesday it has already been partnering with Cavium on workloads that may already be in the process of being optimized. It mentioned both Xen and KVM hypervisors. The two groups began their partnership a full two years ago, OpenSUSE said. Fourth, as if to ice the proverbial cake, Cavium said it has joined the Open Compute Project to create open-rack compatible motherboard specifications for ARMv8 architecture, and it will contribute insights and guidance along the way.

“If you step back and look at the number of cloud workloads, it’s not huge,” Hegde says. “And if you look at the lowest-common-denominator requirement for these workloads, and you implement them in hardware, that means it’s a workload-optimized processor. What are all the things that a distributed storage application needs? It needs lots of storage interfaces to support things like data protection or encryption capabilities or compression. In a traditional server, these are done in software. When you run these in software you’re essentially using up the CPU core capacity that could have been used for your compute workload.”

It may not have made sense five years ago to build SoCs for these specific functions because the scale of payoff from reclaimed latencies would not have been great. The public cloud multiplies that payoff tremendously, meaning Cavium has a chance of becoming the first semiconductor manufacturer to effectively monetize it.

This article first appeared in FOCUS 36. To read the digital edition, click here.