Let Soni Jiandani talk, and she’ll tell you stories from the early days of the Internet.

“I witnessed the Internet revolution, it took about a decade and a half and it was unbelievable,” she recalls. Now, as she builds out AMD’s networking infrastructure group and ramps up competition against Nvidia, she sees echoes of that transformative time.

“I could not be more excited,” she says. “When you get older, it's harder to get excited, but this is an exciting moment for our industry.”

Joining AMD

After nearly two decades at Cisco, between stints at various networking businesses, Jiandani cofounded Pensando Systems in 2017.

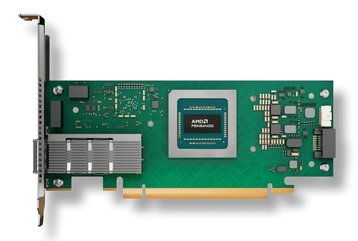

The company develops data processing units (DPUs), also known as SmartNICs, which shift networking compute away from the CPU and GPU, freeing them up to focus on their actual workloads.

Following Nvidia's acquisition of larger networking rival Mellanox, AMD acquired Pensando in 2022 for $1.9 billion, a year before the ChatGPT moment proved the need for tightly networked systems.

"It's been quite a whirlwind experience for us," Jiandani says. "Overall, the journey has been a good one - we had customers who have looked at us as a startup and said, 'I'm not too sure I want to do business with a startup, because I don't know whose hands you will be in.' So by being part of the AMD family, they know that AMD is serious about building AI systems."

'Systems' is a word Jiandani repeats throughout our conversation. "What makes this team very unique is that we are system-level people,” she says. “We don't just think software, device, or componentry, we think systems.”

She continues: “When you're building a distributed system, which is what a network is, you cannot think about it in isolation. You have to think about ‘how will these talk to each other? How will they interoperate with each other? What do I do at the time of failure? How do I recover from failure? How do I make it serviceable? How do I give them observability? How do I make it a feedback loop so that I can add more intelligence?’ It's a unique skill that we have in our organization. AMD has been wonderful by bringing their CPU expertise and their GPU expertise together with our DPU expertise.”

Pensando and AMD's networking efforts

Pensando is now key to AMD’s networking efforts, but remains a small team. "I think it's very important that we're a smaller team, so we can move,” Jiandani says. “We are more nimble. We're not thousands of people trying to get integrated into tens of thousands of people. We are hundreds of people that are being brought in, and so we have the ability to be more agile.

"It's happening at such a high speed that I need to be agile."

Of course, as we speak, AMD is preparing to integrate a significantly larger business. The company last August announced plans to acquire ZT Systems for $4.9bn, with the deal closing this March.

The hyperscale server maker has several thousand employees, but AMD said that it will spin out and sell ZT’s manufacturing assets, focusing instead on the systems design capabilities.

"ZT Systems is going to help us at a company level to think about rolling the system out at a rack level," Jiandani says.

"So that is the team that brings it all together, brings our GPU systems, our networking assets, our CPU assets, the whole liquid cooling and air cooling systems, into a reference design architecture. They're all part of the data center group."

As a division, AMD’s data center business has been an oddity. The company has posted record revenue growth, nearly doubling it in 2024 alone, but is still seen as an also-ran when compared to the blowout earnings of rival Nvidia.

But, just as it hopes to eat away at Nvidia’s GPU lead by pushing for a more open approach, AMD hopes to push for a more open systems approach to gain customers. “Just to touch on what is different to Mellanox,” colleague Eddie Tan adds, “when markets say ‘I need a choice,’ I don't need to say, ‘this is the rack, take it or leave it.’ I'm not going to say ‘you need to buy every component from me.’”

Nvidia has primarily favored InfiniBand, the high-performance computing networking standard that Mellanox is the only remaining purveyor of, while AMD has gone all in on ethernet.

“Ethernet has proven to be a scalable solution,” Jiandani says. “Some of the customers are abandoning the proprietary just because it's not scalable. Ethernet, given the sheer consumption, is coming at a completely different cost point compared to InfiniBand. So at the minimum, yeah, you get a cost benefit.”

Post generative AI products

The company is one of the most vocal members of the Ultra Ethernet Consortium (UEC). And, while the UEC has delayed the release of version 1.0 of specification, AMD has already unveiled the UEC-compatible Pollara 400 AI NIC. It should be noted that Nvidia also offers ethernet products, and quietly joined the UEC last year. With InfiniBand giving it more control, and higher margins, it did not publicly announce the move.

“If you are an enterprise or a tier two cloud provider, you want an out-of-box experience as you are building scale-out networks,” Jiandani says. “So, with Pollara 400 being UEC-compliant from day one, you don't have to worry about whether your developers have to build a scalable implementation of a back-end network. Whereas, if you are a tier one hyperscale customer, you have your own unique transport and you want that AI system to plug into that and into your preferred choice vendor of ethernet switches. We can cater to both.”

The Pollara 400 for backend, and Salina 400 DPU for frontend networking, are Pensando’s first truly post-generative AI products. “We have been shipping [Salina-predecessor] Elba since 2022; it's in production at Oracle Cloud, Microsoft Azure, and IBM Cloud,” Jiandani says.

“The bulk of these implementations have gone live at scale over the last year to 18 months and, in some cases, we expect that DPU will be in production with ongoing implementation through calendar year 2027, so five years. In other cases, the customer will be cutting over to Salina within the next 12 months, so the cutover could be three years as a life span.”

In Microsoft’s case, how long it will use Elba and Salina is unclear. The company has long developed its own FPGA system: “It is no secret Microsoft has an in-house FPGA SmartNIC,” Tan says. “The problem with FPGA is that it's not as flexible or fast, and it can’t help you support concurrent services at a scale. That's why, even despite the fact they already have FPGA SmartNIC, they still come to us to address some challenges they're facing.”

However, soon after our conversation, Microsoft announced that it was deploying its own in-house DPU, after acquiring DPU maker Fungible some two years ago. It is too early to say if it will move over fully to custom silicon, or if it will use it to keep pressure on pricing, as cloud companies have used their own AI chips to extract concessions from GPU makers.

What is clear, however, is that Microsoft and other cloud and AI rivals are set to deploy clusters at a scale previously unfathomable. “We know that the 100,000-GPU number cluster is pretty well documented,” Jiandani says. “And when we are talking to some of the largest hyperscalers, we're hearing of numbers where they want to get up to a million GPUs, based on the growth of the large language models.”

Tan adds: “And very soon you will find the cluster is going to spend even across the data center campus. How do you even support that? Ubiquitous ethernet networking.”

Achieving such rapid growth, Jiandani argues, requires thinking about it at an ecosystem level, not with just the vision of one company. “I think with the models getting larger and larger, our ability to build a more balanced system is very important,” she argues. “No one company is going to be able to do this alone. And that's why partnerships and the ecosystem and the close working relationships we have collectively as an industry are key.”