As rack densities rise and chips get hotter, some are turning to immersion-based, open-tub liquid cooling to beat the heat.

In an open-tub scenario, there are two distinct types of coolant - single phase and two-phase - the phase meaning the state the coolant is in at any given moment during the cooling loop.

Single-phase coolants will remain in a liquid state while two-phase coolants will change from a liquid state to a gaseous one as the heat transfer occurs. We will explore both examples through two real-world deployments.

This feature appeared in the latest issue of the DCD Magazine. Read it for free today.

Single-Phase - DUG McCloud Data Center - Houston TX

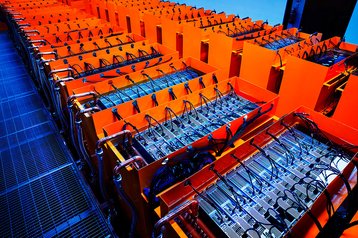

Oil and gas computing specialist DownUnder GeoSolutions (DUG) opened its 15MW 'Bubba' supercomputer in a 22,000 square foot (2,044 sq m) data hall built in partnership with Skybox Data Centers in Houston, Texas. It was deployed in 2019.

At 250 petaflops (single precision) once fully deployed, DUG's 15MW high-performance computing requires unique power and heat rejection systems to operate.

Designed in-house, the DUG HPC’s compute elements are entirely cooled by complete immersion in a dielectric fluid, specifically selected to operate at raised temperature conditions. Slotted vertically in an open-tub – essentially a rack on its back, the heatsinks are removed and the chips are in direct contact with the fluid.

The fluid is non-toxic, non-flammable, biodegradable, non-polar, has low viscosity and, crucially, will not conduct electricity.

The heat exchangers are submerged in the tank with the computer equipment, meaning that no dielectric fluid ever leaves the tank, and it has a centralized power supply.

The deployment comes with a swathe of benefits, from considerably reducing total power consumption of the facility, to massively reducing the cooling system’s complexity. Mark Lommers, chief engineer at DUG and the designer of this solution, told DCD that “for every 1MW of real-time compute you want to use, you end up using 1.55MW of power or thereabout” for traditional chilled water-cooling systems.

In an immersion cooling system, lots of power-hungry equipment is removed. A prime example of this is the server fans. Lommers added that “there are no chilled water pumps and there are no chillers that get involved because there's no below room temperature water involved,” concluding that “the actual total power that we get from that is only 1.014MW, which is a big change over the 1.55MW that we had before.”

The cooling loop is massively simplified and thus more reliable. Even more so because it must deal with fewer changes in temperature, the overall system is more robust as fewer controllers need to work in tandem for an efficient operation.

As the components sit below the fluid level, the company claims that there is no is component oxidation and fouling. “We see a very, very high benefit in reduced maintenance costs and reduced equipment failure rate as well,” Lommers said.

Two-Phase Immersion Cooling – Microsoft Public Cloud

In 2021 Microsoft deployed a two-phase immersion cooling solution for their public cloud workloads, developed in partnership with Taiwanese server manufacturer Wiwynn.

At the time, the company said that “emails and other communications sent between Microsoft employees are literally making liquid boil inside a steel holding tank packed with computer servers at this data center on the eastern bank of the Columbia River.”

Inside Microsoft’s steel holding tank the heat generated by the bare chips makes the fluid boil. As vapors rise, they meet a condenser coil found in the lid of the tank. Vapors hit the coil and condense, turning back into a liquid state and falling back into the tub, effectively creating a closed-loop cooling system.

As with the previous example, the cooling infrastructure is greatly reduced as no air handlers or chillers are needed - a dry cooler (basically a large radiator) circulates warm coolant into an open-bath immersion tank, and provides waterless cooling, no need to lose or evaporate water to cool.

Special server board designs are used that are smaller in size and blind-mate to their power connectors in the bottom of the tank, as in the other example effectively being slotted in and stacked horizontally.

Another less known aspect of this cooling approach is its ability to concentrate heat due to its use of radiators, this in turn enables real heat reuse scenarios like district heating where often air-cooled latent heat is too low grade to be of any real use.

Furthermore, Microsoft is also recognizing the overclocking opportunity such a solution brings. Husam Alissa, director of advanced cooling & performance, at Microsoft explained: “We could go as high and as low with densities as we want to, we'd be able to support hundreds of kilowatts in single tank as we densify hardware.”

Microsoft has shared the designs and the learning behind this project with the wider industry through the Open Compute Project as its looking to grow the ecosystem of this technology.

But there’s a reason why Microsoft hasn’t deployed this system in all of its data centers - the ecosystem is not quite there yet, staff aren’t trained for the new approaches, and critical questions around cooling solution supplies, security, and safety have yet to be answered.

More crucially, it is not yet clear how large the market for the ultra-dense systems will be, with many racks still happy humming away at below 10kW.

Should that density rise, operators currently have a number of different approaches to choose from, and within that a variety of form factors and pathways they could take. There are no agreed standards, and no settled consensus, as the technology and its implementation remain in the early stages.

It will take projects like these, and their long term success, for others to feel comfortable to take the plunge.