Intel wants you to know it has a lot of products for the data center: CPUs, FPGAs, different types of memory, Ethernet, you name it, they probably do it.

Today, at its Data Centric Event in San Francisco, Intel unveiled its updated portfolio range, spanning the breadth of the data center. Ahead of the official announcement, DCD traveled to Intel’s Oregon offices to get a low-down on the technologies, and talk to the people behind them.

I was initially trepidatious about the event, as my last experience with the company had been rather disappointing. 2018’s Data Centric Innovation Summit missed any real reveals, and had the air of a magician, mid-trick, realizing he had forgotten to hide a dove up his sleeve.

But this time, the workshop was more assertive, if still lacking in grand reveals. Exotic technology like Foveros microbumps were left in the future, exciting new architectures like the Xe GPUs and Sunny Cove CPUs were for another time - this was about the bread and butter of what Intel hopes to sell in the year ahead. We’re talking new Xeons, FPGAs, and Optane.

Strap in for a perfectly normal ride.

Xeon SP's second generation

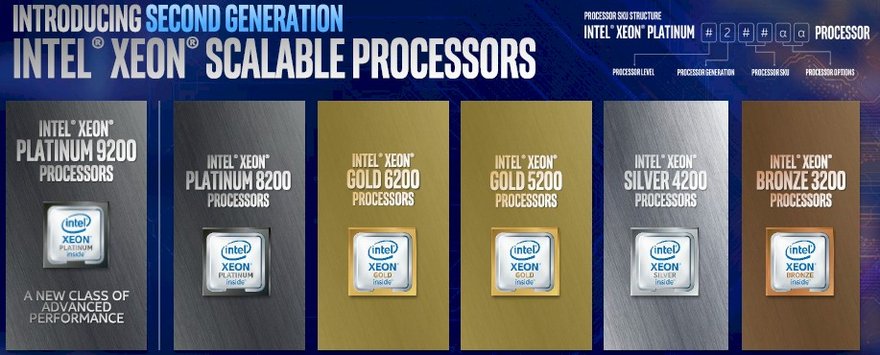

Starting today, the second generation of Intel Xeon Scalable processors (née Cascade Lake) are available, coming in some 50 different SKUs, all at 14nm. They feature up to 48 lanes of PCIe 3.0, and up to six memory channels per processor die. There’s 1MB dedicated L2 cache per core, and up to 38.5 MB (non-inclusive) shared L3 cache.

“We're talking about the ability to address all of the workloads across this entire edge back to the cloud,” Lisa Spelman, VP & GM of Xeon products and data center marketing, told DCD. “We really have examples across pretty everything that either an enterprise or a cloud service provider or a network customer needs to do - we have a silicon foundation for that.”

The Cascade Lake products, clocked at 1.9GHz for the Xeon SP 6222V to 4.4GHz in Turbo mode for the Xeon SP 6244, will come in at the same price as the first generation Xeon SP chips.

“The mainstream gold, the mainstream silver, you're seeing 20 to 40 percent performance improvements, just from the last gen, Skylake. That's within the same node and within the same platform,” Spelman said.

Ian Steiner, principal engineer and Cascade Lake lead architect, concurred: “We've built a really good foundation on Skylake. And we've been trying to take advantage of that foundation - this is more than a CPU.”

Core to the upgrades is the integration of 'Intel Deep Learning Boost,' a technology the company says is optimized to accelerate AI inference workloads, with a focus of frameworks like TensorFlow, PyTorch, Caffe, MXNet and Paddle Paddle.

"When people said we’re 100x slower [at deep learning workloads], there was some truth with that a few years ago," Indu Kalyanaraman, AI performance manager at Intel, said. "It’s a software thing… Intel realized there was a problem, we stepped in, we made huge progress in optimization. Going forward, we no longer have the excuse that software was not optimized."

With the specter of Spectre still looming in people's minds, the chips will include several hardware-enhanced security features, including side channel protections built directly into hardware, although complete Spectre mitigation would have required a complete redesign.

Additionally, Cascade Lake CPUs come with support for the company's Optane DC persistent memory - but more on that later.

"We expect this to be one of the fastest Xeon transitions in our history," Spelman said. "The market is ready for this. The system vendors are ready, the end customers are ready."

One of the most interesting Xeon variants is the 9200 processor, Intel's most powerful high-end chip featuring a whopping 56-cores and 112 threads - compared to 28 cores and 56 threads for the standard Cascades. Here’s the thing, unlike all the rest of the product family, you won’t actually be able to buy it on its own, you have to buy a whole rack.

"These are going to get delivered in a data center block known as the S9200WK - in a 2U system, you can get up to 248 cores in the densest configuration that we will be providing," Kartik Ananth, senior principal engineer at the DCG, said.

"We will be providing different SKUs, and OEMs like Lenovo will be providing SKUs," with customization on memory capacity, storage and liquid or air cooling. “Air cooling can go up to 350 watts thermal design point, and the highest 56-core SKUs will be liquid cooled, at up to 400 watts TDP,” Ananth said.

“I think you will see us push the envelope on thermals for the markets and customers that desire it and have that capability,” Spelman told DCD. “Clearly, that's not a mainstream thing that everybody is going to deploy, but there's certainly an interest level and a market for it.”

Considering the power and density of the 9200, it is aimed at the areas one would expect: “HPC, AI, and memory-bandwidth sensitive workloads,” Ananth said. While the rest of the Xeons are generally available now, the 9200s are expected "to start shipping in the first half of 2019, ramping in the second half of the year."

At the other end of the market, Intel has Xeons for 5G, and for edge. The 5G Xeons are network-optimized processors built in collaboration with communications service providers with the company promising they will be able to deliver more subscriber capacity and reduce bottlenecks in network function virtualized (NFV) infrastructure.

As for the edge, Intel has unveiled the Xeon D-1600 processor, the latest in the D family of SoCs designed for locations where power and space are limited.

Rajesh Gadiyar, CTO of the network platforms group, claimed that it will bring a roughly 50 percent performance improvement over the D-1500. “If you look at telco aggregation sites, central offices, some of the enterprise designs for virtualizing their customer premises - those places tend to be more power and thermally constrained, and that is where actually this product really shines.”

Altera's children

Next up, let’s look at Intel’s FPGAs - the company has finally hit 10nm, albeit not in its CPUs. Its new Intel Agilex FPGA family is the first since it acquired Altera for $16.7bn in 2015, this time with added technology from its 2018 eASIC acquisition.

The chip family should, the company claims, provide up to 40 percent higher performance, or up to 40 percent lower total power compared with Stratix 10 FPGAs, and will support Compute Express Link, a cache and memory coherent interconnect to future Xeons.

“The entire family line is based on a chiplet architecture base,” Jose Alvarez, CTO Office PSG, said. “So essentially, in the center, we have the FPGA logic, the fabric with the high speed interfaces - DDR, etc - into one package, and then around it we're using Embedded Multi-die Interconnect Bridge (EMIB) technology, so we have the capability of bringing in chiplets from either other suppliers or our own.”

He added: "Agilex will address many, many markets - vision analytics, smart cities, surveillance, 5G, network function, virtualization and storage and acceleration in the cloud."

They will start sampling in the second half of 2019.

Persistence is key

"This is an exciting technology, it really is a once in a career hardware change." Lily Looi, a senior principal engineer at Intel, is talking about the company's Optane DC Persistent Memory. Perhaps it is because of the number of times the technology has been touted and previewed to journalists, assembled hacks did not react with immediate reverence.

But Optane DC Persistent Memory, which is finally available today, is indeed an intriguing product. Based on 3D Xpoint technology the persistent, non-volatile, memory is almost as fast a DRAM, but continues to store data when the system is switched off.

“In some sense, it is like memory, and it's also like storage,” Mohamed Arafa, a senior principal engineer at Intel, said. “So it's getting genes from both sides - on the memory side, it's getting very comparable latencies to DRAM. On the storage side, it's giving you that capacity and persistence he which is new to the memory subsystem.”

When combined with traditional DRAM, it will provide up to 36TB of system-level memory capacity. The company does not want to replace DRAM, but augment it, and intelligently shift data to the most appropriate memory at a given time. It comes in capacities of 128GB to 512GB per module, with up to 8.3 GB/s reads and up to 3.0 GB/s writes,

Intel claims it will reduce system restart reduction from minutes to seconds, allow for up to 36 percent more virtual machines, up to 2x system memory capacity; up to 36TB in an eight-socket system. The product is expected to be deployed in Aurora, the US’ first exascale supercomputer, while Google Cloud previously announced it would use the memory product.

It will not, however, be deployed in conjunction with rival chips - it only works with the second gen Intel Xeon Scalables.

In other storage news, the company announced Optane SSD DC D4800X (Dual Port) and Intel SSD D5-P4326 (Intel QLC 3D NAND).

Rounding it out, Intel introduced its Ethernet 800 Series adapter with Application Device Queues (ADQ) technology, with up to 100Gbps port speeds.

The wolf is far from the door

To regular followers of Intel product announcements, nothing here should surprise you - the reveals were primarily either expected product launches, or expected product iterations. But it still provides a bevy of goods that will help the company keep a strong grip on a market it dominates, despite impressive efforts by AMD and, to a lesser extent, Arm.

Even though the company has some struggles - namely in reaching 10nm for its server CPUs, and in convincing the world that its wares are the ones to use for AI, the company only has to be concerned about marginal market share losses. Its products, software support and, frankly, inertia, keep it safe - for now.