With widespread digitization now affecting every industry, capturing the value of new technologies to deliver new services and drive better customer experiences across online channels is not only lucrative but increasingly essential. Organizations are under intense pressure to be better, faster, cheaper or safer than the competition. But those companies encumbered with legacy systems can find it impossible to even stay relevant with more nimble opposition. Aged infrastructure restricts the speed of development, the quality of the final product and, crucially, the ability to make changes as you go.

Fortunately, there are multiple approaches to enabling legacy modernization. In this article I want to make the case that a combination of people, processes, and platforms should provide the foundation to transform development methods and systems.

People: the productivity problem

First let’s look at the most critical element: people. While developers are now broadly recognized as key drivers of enterprise innovation, companies are failing to effectively capitalist on their skills and abilities. Ensuring that you have processes that enable them to work productively and effectively should be the priority of any legacy modernization project.

A recent Stripe and Evans Poll survey is evidence of this, with software developers stating that they spend an average of 42% of their time on maintenance and poorly developed legacy software.

About half of the respondents say that the loss in productivity of the developers is due to legacy applications. Breaking this time down more closely, 9% is spent on repairing faulty code and 33% on technical debt - meaning maintenance issues such as debugging and refactoring.

This is only represents one part of the picture however. This year, MongoDB conducted our own research with Stack Overflow into developer productivity, uncovering a host of challenges that developers are dealing which hold them back from delivering to their full potential. We found that 41% of a developer’s working day goes towards the upkeep of infrastructure, instead of innovation or bringing new products to market.

Creaking infrastructure and a focus on monotonous, backend coding are costing developers valuable time and diminishing the value that they can provide to a business. It is essential to redirect the development strategy now and migrate legacy applications to modern data platforms and software. Otherwise you’re putting your most valuable resource, your developers, in shackles and not exploiting the value captured in new data sources flowing into the business.

There are many better ways of working, such as agile software development, DevOps and microservices. This results in higher quality software that can be developed faster and can be maintained more easily while scaling better in the cloud. Let’s take a closer look at what these terms are and why businesses should be making the move.

Processes: moving to microservices patterns

The large, monolithic codebases that traditionally powered enterprise applications make it difficult to move quickly. The monolithic approach also presents organisational alignment problems which hinder agility. As products evolve, the complexity of the code can become extremely hard to manage and since monoliths must be developed as one unit, each code change requires coordination that slows development.

To build better applications and to build them faster, modern development teams are changing methodology. DevOps and agile software development is seeing the composition of cross functional teams, comprising developers, ops, security, and the business itself - essentially self-contained units that have all of the skills required to build and evolve new services in one place.

Such teams are breaking monolithic applications down into smaller, discrete components known as “microservices” that are autonomous and typically aligned with a particular business or objective. By using the microservices approach, a large company can launch new products and services faster and align development teams with business objectives.

Services are typically focused on a specific, discrete objective or function and decoupled along business boundaries. Separating services by business boundaries allows teams to focus on the right goals and also ensures autonomy between services. Each service is developed, tested, and deployed independently, and services are usually separated as independent processes that communicate over a network via standardized APIs.

Given that a typical characteristic of microservices is that each autonomous service maps to its own data store, rather than a shared database, it is important to find a platform that is flexible enough to support microservices effectively. Let’s now discuss the how and why of this migration from a relational, tabular database to a modern data platform.

Platforms: the advantages of a document-oriented data model

Some legacy applications are difficult to lift onto modern data and software platforms because they are highly critical to day-to-day operations. Examples include banks or airlines that run on mainframes – modifying these systems to include new functions can be like open-heart surgery. One false move and the application dies, and the business can’t function.

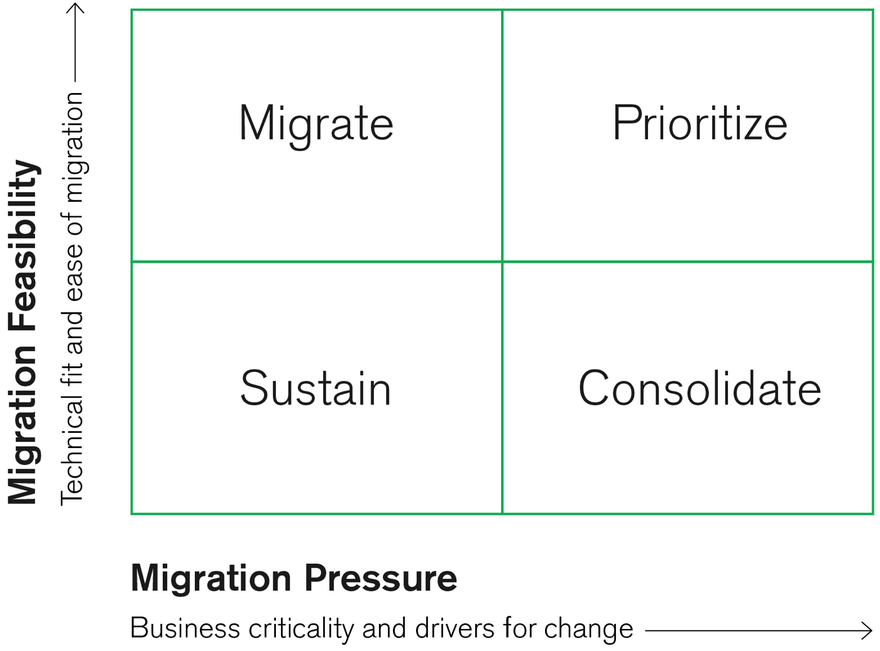

Nevertheless, there are practicable ways to renovate legacy applications, adhering to an established and effective methodology. The first step is to assess the portfolio of existing applications based on dimensions of technical fit, business criticality and an organizations drivers for change. High-scoring applications are further analyzed and a high-level design and estimation of effort is constructed for re-platforming. This is then reported back to the relevant application owners for a go/no-go decision.

Existing tabular databases – best-of-breed a decade or two ago – were never designed for the data management demands of today’s digital economy. These tabular databases, which have been used for years have one major disadvantage: they are too inflexible if new functionality needs to be added to the software. It is difficult to add new types of data to a rigid schema of linked two-dimensional tables.

Documents are a much more natural way to describe data. They present a single data

structure, with related data embedded as sub-documents and arrays. This allows documents to be closely aligned to the structure of objects in the programming language. As a result, it’s simpler and faster for developers to model how data in the application will map to data stored in the database. It also significantly reduces the barrier-to-entry for new developers who begin working on a project – for example, adding new microservices to an existing app.

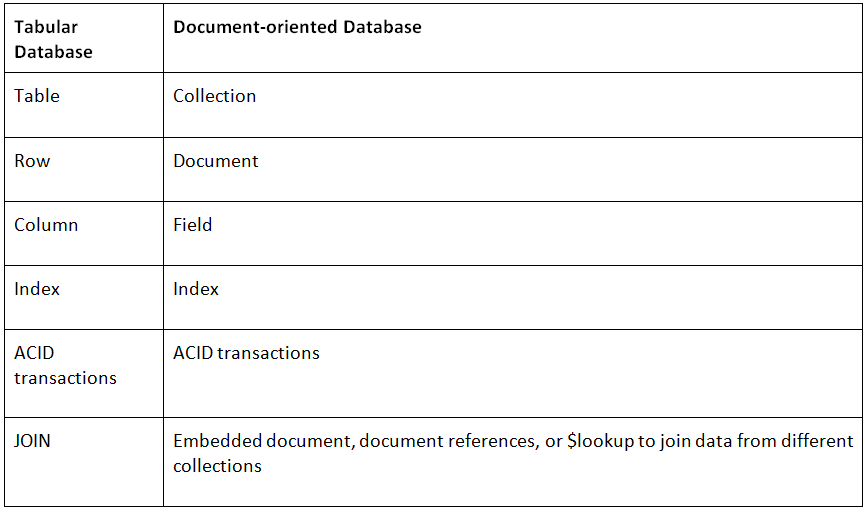

The most fundamental change in migrating from a tabular database to a document-oriented database such as MongoDB is the way in which the data is modeled and the schema which represents those models. As with any data modeling exercise, each use case will be different, but there are some general considerations that you apply to most schema migration projects.

Schema design requires a change in perspective for data architects, developers, and DBAs. It means moving from the legacy relational data model that flattens data into rigid two-dimensional tabular structures of rows and columns to a natural and flexible document data model with embedded sub-documents and arrays that map closely to objects in code, and to new types of rich data generated by online and mobile apps.

The below table provides a useful reference for translating terminology from the tabular to document-oriented database.

To conclude, while legacy technologies such as tabular databases, and methodologies such as ‘waterfall development’, have a long-standing position in most organizations, businesses now need to go faster and meet demands that exceed the limits of what’s possible with technology that is more than 30 years old., while meeting the data sovereignty requirements demanded by new data privacy regulations.

Today’s database generation offers application developers lower cost, greater efficiency, and superior performance in comparison to its more traditional relational counterparts. A key driver behind the lower cost is the ability to scale out your system over commodity hardware. Older relational database technology, on the other hand, is expensive to scale because you need to buy a bigger server to meet the greater volume demand.

To meet the needs of apps with large data sets and high throughput requirements, these distributed databases provide horizontal scale-out for databases on low-cost, commodity hardware or cloud infrastructure using a technique called sharding. Sharding automatically partitions and distributes data across multiple physical instances called shards, allowing developers to seamlessly scale the database as their apps grow beyond the hardware limits of a single server, and it does this without adding complexity to the application.

Tabular databases that have been designed to run on a single server are also architecturally misaligned with modern cloud platforms, which are built from low-cost commodity hardware and designed to scale out as more capacity is needed. To reduce the likelihood of cloud lock-in, teams should build their applications on distributed databases that will deliver a consistent experience across any environment.

To conclude, while legacy technologies such as tabular databases, and methodologies such as ‘waterfall development’, have a long-standing position in most organisations, businesses now need to go faster and meet demands that exceed the limits of what’s possible with technology that is more than 30 years old.

Adopting new architectures by moving to microservices patterns and migrating to modern data platforms should now be on the agenda for any business looking to transform business agility and unlock developer productivity.

Organizations must work hard to maximize the innovation that developers can provide by modernizing to platforms and processes that are intuitive and natural to work with, instead of creating additional speed bumps in the development process.

It’s crucial to not be daunted by the prospect of moving on from ineffective, monolithic architectures. It doesn’t need to be a “big bang”, all-or-nothing approach. By implementing the three P’s - a combination of people, processes, and platforms - businesses can not only stay competitive but be better, faster, cheaper and safer.