As networks are increasingly stressed at the edges to support more users, more devices, more apps, and more services, the data center interconnect application has emerged as a critical and fast-growing segment in the network landscape.

Asia-Pacific is set to have interconnection bandwidth grow at a CAGR of 51 percent to over 2,200Tbps by 2021, with Singapore’s interconnection bandwidth alone expected to more than quadruple in the same timeframe. Capacity demand is set to further increase as businesses increasingly go digital. In order to facilitate real-time communication and transmission of data, whether between data centers or inside data centers, high-speed reliable interconnectivity is essential.

In this era where the ability to deliver ultra-fast transactions is essential to business, we explore best practices for data center interconnectivity and the technologies available today to extend the capabilities of your network.

Flatter network architecture for increased connectivity and scalability

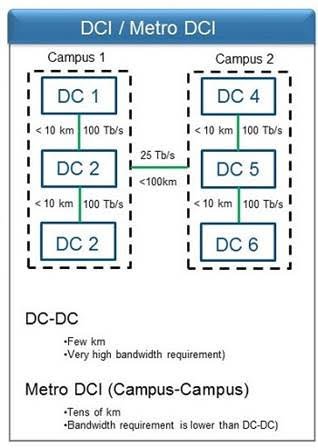

Huge data growth has driven the growth of data center campuses, notably hyperscale data centers. Now, all these buildings on a campus must be connected with adequate bandwidth. To keep data flowing between the data centers in a single campus, each data center could be transmitting to other data centers at capacities of up to 200Tbps today, with higher bandwidths necessary for the future (see Figure 1).

Bandwidth demands can range as high as 100Tbps and even 200Tbps.

In response to increasing workload and latency demands, today’s hyperscale data centers are migrating to spine-and-leaf architecture (see Figure 2). With spine-and-leaf, the network is divided into two stages. The spine stage is used to aggregate and route packets towards the final destination, and the leaf stage is used to connect end-hosts and load balance connections across the spine.

Ideally, each leaf switch fans out to every spine switch to maximize the connectivity between servers, and consequently, the network requires high-radix spine/core switches. In many environments, the large spine switches connect to a higher-level spine switch to tie all the buildings in the campus together. As a result of this flatter network architecture and the adoption of high-radix switches, we expect to see the network getting bigger, more modular, and more scalable.

Optimizing bandwidth delivery with extreme-density networks

What is the optimal and most cost-effective technology to deliver this amount of bandwidth between buildings on a data center campus? Multiple approaches have been evaluated to deliver transmission rates at this level, but the prevalent model is to transmit at lower rates over many fibers. To reach 200Tbps using this method requires more than 3,000 fibers for each data center interconnection. When you consider the necessary fibers to connect each data center to each data center in a single campus, densities can easily surpass 10,000 fibers. Cables need to be extremely dense in order to transmit at lower rates over many fibers.

Now that we have established the need for extreme-density networks, it is important to understand the best ways to build them out. New cable and ribbon designs available today have essentially doubled fiber capacity from 1,728 fibers to 3,456 fibers within the same cable diameter or cross-section. These generally fall into two design approaches: one uses standard matrix ribbon with more closely packable subunits, and the other uses standard cable designs with a central or slotted core design with loosely bonded net design ribbons that can fold on each other (see Figure 3).

Leveraging these extreme-density cable designs enables much higher fiber concentration in the same duct space. Figure 4 illustrates how using different combinations of new extreme density style cables enables network owners to achieve the fiber densities hyperscale-grade data center interconnections require.

The future of interconnectivity

Current market trends suggest there will be requirements for fiber counts even beyond 5,000. To maintain infrastructure that can still scale, there will be increased pressure to downsize cable sizes. With fiber packing density already approaching its physical limits, the option to further reduce cable diameters in a meaningful way will become more challenging.

Development has also focused on how to best provide data center interconnect links to locations spaced much farther apart, and not co-located within the same physical campus. In a typical data center campus environment, typical data center interconnect lengths are 2km or less. These relatively short distances enable one cable to provide connectivity without any splicing points. However, with edge data centers being deployed around metropolitan areas to reduce latency times, distances are increasing and can approach up to 75km.

It will continue to be a challenge in the industry to develop products that can scale effectively to reach the required fiber counts while not overwhelming existing duct and inside plant environments. As the fiber-intensive 5G era looms, network operators need to look towards optimizing data center interconnectivity today to address current and future data rates - all the way to 400G.