The data center sector has boomed considerably over the past few years thanks to the increasing demand for cloud applications, digitization, IoT devices and 4G and 5G Edge computing.

According to an IDC forecast, the quantity of global data will reach 175 zettabytes by 2025. In addition, total investment in data centers is expected to increase from $244.74 billion in 2019 to $432.14 billion in 2025, at a compound annual growth rate (CAGR) of 9.9 percent.

But as the growth of data continues, the question of the impact of data centers on the environment also needs to be addressed. Decarbonization is a key factor, as it brings sustainability goals and renewable energy into focus.

One of the main objectives is to build more sustainable data center operations with energy efficient cooling solutions. Direct-tochip liquid cooling could become the primary cooling method, driven by rack density, electricity costs and sustainability activities.

The challenge

Air-cooled heat sinks currently dominate the sector, as data center operators are more familiar with chilled water and direct expansion systems.

However, there is a limit to power consumption and carbon emissions in terms of the total energy efficiency and potential savings with air-cooled heat sinks, as a large portion of power consumption in current air-cooled data centers is due to air chillers.

Given the more stringent sustainability targets and introduction of the new carbon tax for data center owners, direct liquid cooling may eventually become the primary cooling technique employed by data center operators.

At present, direct liquid cooling is a niche activity and only applicable to high-performance servers with special infrastructures or a warranty in place.

One of the main barriers to the entry of liquid cooling heat sinks is the potential risk of leakage, which may result in damage to the servers.

Hyperscale DC owners, operators and server OEMs are currently all exploring the possibilities of deploying leak-free direct liquid cooling solutions to meet the demands for higher rack density while improving total energy efficiency.

The solution

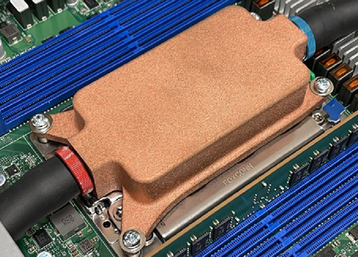

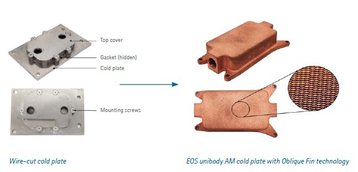

State of the art additive manufacturing (AM) technology from EOS enabled CoolestDC to design and build an integrated, leak-free unibody liquid cooled heat sink to address the concerns and requirements of industry.

Using the AMCM M 290 1kW system together with the validated EOS Copper CuCP process (based on commercially pure copper), we manufactured a highly dense copper heat sink that can withstand water pressures of 6 bar and over, with no joints, assemblies or gaskets.

Moreover, the AM process is versatile and able to produce thin-walled internal structures (0.2 mm and over), while the patented oblique fins are printed using an optimized process to maintain their accuracy and high-resolution features.

This all-in-one build not only addresses and eliminates leakage concerns which might otherwise lead to catastrophic failures of the server boards, but also drastically reduces manufacturing complexities (with no assembly and brazing required) thus increasing the part’s service life.

The results

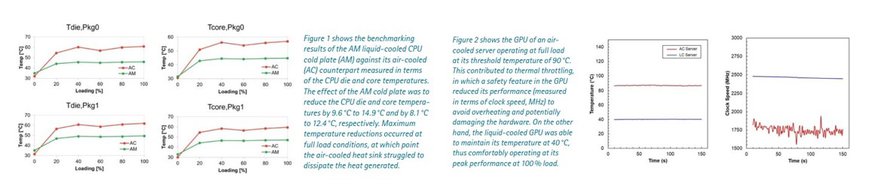

CoolestDC’s unibody AM cold plate was tested against industry leading air-cooled heat sinks at a fully operational colocation facility belonging to Digital Realty. The findings were:

- Reduction of CPU die and core temperatures by 10 °C (Fig 1)

- Significant reduction in operating temperature of GPU by almost 50 percent (Fig 2)

- Ability of GPU to run at full load at 40 percent higher clock speeds, with no throttling (Fig 2)

- PUE reduced to 1.2 (calculated).

Benefits to data center providers and stakeholders

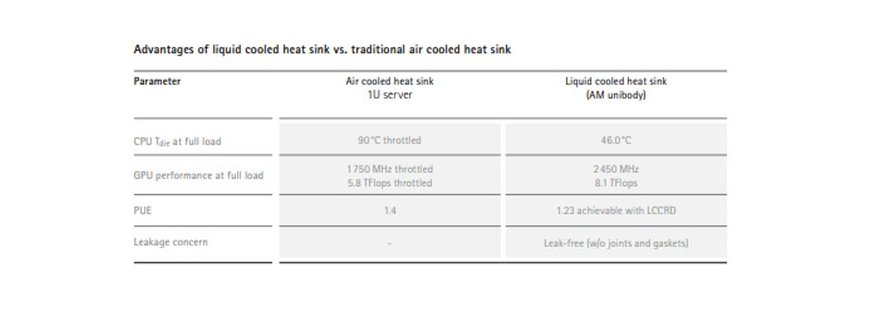

Based on the GPU performance in the table below, a fully populated liquid-cooled rack with 25 1U servers (HPC servers with four GPUs) produces 810 TFlops.

To achieve the same performance would require 30 air-cooled servers, which would have benefits to data center providers and stakeholders to be separated into two racks of 15 servers each, as it would otherwise exceed the maximum capacity of 15 kW of an air-cooled rack.

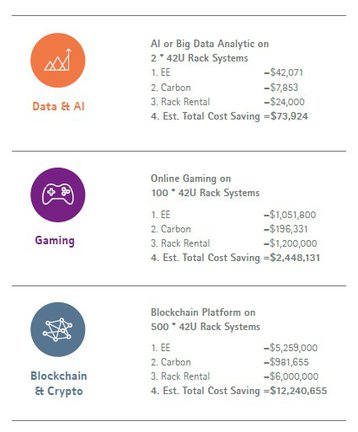

We therefore estimate the value propositions and total cost savings for end customers as follows:

- 40 percent improvement in IT performance

- 30 percent reduction in carbon footprint

- 20 percent savings in rack space cost

- 15 percent capex savings (elimination of chiller, raised floor, etc)

- Savings of $9.5K/mW through water reduction.

In collaboration with the EOS Additive Minds team, CoolestDC has developed an AM-centric design to further optimize product performance and reduce manufacturing costs by over 50 percent. CoolestDC is now also exploring a digitized workflow that will better support and accelerate the mass customization of cold plates for different customer requirements.

Using AM, no tooling costs were incurred for mass-customized cold plates and no minimum order quantity is required for customers. With traditional manufacturing (machining, wire EDM and brazing, etc.), there is a minimum setup cost of around 3K for each new CPU and GPU model.

This AM solution is suitable for both greenfield and brownfield applications including Edge computing. We envisage, that this next generation of design will be even more cost effective and efficient, which will lead to greater savings and performance gains for the end user.

For further information, please click here.