It is no secret that High Performance Computing (HPC) is evolving towards data-intensive computing, which means larger data volumes, higher reliability requirements, and more workload types. Therefore, customers in the HPC industry need more professional storage systems to better cope with these challenges.

As one of the world's leading HPC storage performance rankings, the IO500® list has been released at the top HPC conferences – SC in the USA and ISC in Germany since November 2017. Against this backdrop, I/O performance has become an important indicator for measuring the efficiency of supercomputer applications.

The IO500 list simulates different I/O models to measure storage performance using two key indicators: data bandwidth (GIB/s) and metadata (KIOP/S). An overall score is calculated from the geometric mean of the two indicators.

Meanwhile, the 10-node list evaluates the maximum performance of a storage system by limiting the benchmark to 10 compute nodes.

This rule makes the 10-node list a better reference framework as it reflects the real-world I/O performance of a storage system more accurately, helping users evaluate storage systems more easily.

With a fine equilibrium of powerful performance, Huawei OceanStor Pacific Storage ranked second in both the IO500 and 10-node list.

How does IO500 benchmark test a storage system?

The benchmark simulates different I/O models and workloads of HPC applications – from light to heavy workloads. It covers both bandwidth and IOPS to evaluate a storage system's ability for supporting multiple workloads.

The total score is not an arithmetic mean, but a geometric mean of the performance values obtained from all scenario test cases. This ensures that the value calculated for each performance scenario is equally weighted, preventing outstanding performance in a single scenario from significantly increasing the overall score.

However, if a storage system performs poorly in a certain test case, its total score will also be held back significantly. Therefore, a high total score in IO500 requires a storage system to perform well in all test cases with both high bandwidth and IOPS.

The advanced architecture and enhanced parallel file system behind the performance

The OceanStor Pacific is a data center-standard, intelligent distributed storage system with superb scale-out and elastic scaling capabilities.

The system software organizes local resources of storage nodes into a distributed storage pool, which provides upper-layer applications with distributed file, object, HDFS, and block storage services as well as a vast array of service functions and value-added features.

The OceanStor Pacific adopts a fully symmetric distributed architecture that allows for easy expansion to thousands of nodes and EB-level capacity, meeting customers' workload growth needs.

The automatic load balancing policy enables data and metadata to be evenly distributed across all nodes, eliminating metadata access bottlenecks and ensuring system performance in large-scale expansion scenarios.

In addition, the storage system uses the FlashLink® technology (which includes intelligent stripe aggregation and I/O priority-based scheduling) and other key technologies such as multi-level cache acceleration and big data pass-through to deliver high bandwidth and low latency.

The key technologies designed for high performance include Distributed Parallel Client (DPC), an intelligent many-core technology provided by FlashLink® and Data flows adaptive to large and small I/Os.

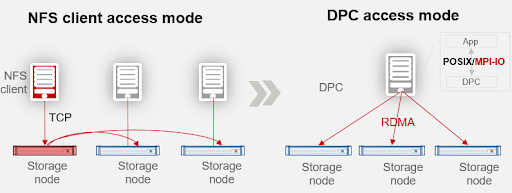

The graph below shows how DPC differs from standard NFS when processing multiple storage nodes.

Most traditional HPC applications use the NFS protocol to read and write data. However, only one connection can be established for an NFS file due to protocol restrictions. As a result, large-file read and write create a performance bottleneck for the entire system.

DPC is the parallel file system client provided by OceanFS, a file system running on OceanStor Pacific. In addition to traditional NAS services like NFS and SMB, OceanFS offers parallel file system services that support POSIX and MPI-IO interfaces.

Running on a compute node and exchanging data with back-end storage nodes over a network protocol, the DPC technology enables one compute client to connect to multiple storage nodes, eliminating the performance bottleneck caused by the storage node configuration and unleashing the performance of compute nodes.

NFS determines load balancing in the mounting phase. By contrast, DPC implements global load balancing to bring the performance of the entire storage cluster to a new level.

With years of industry practice and R&D, Huawei launched the OceanStor Pacific next-generation storage for high-performance data analytics (HPDA) to help customers eliminate HPC bottlenecks by accelerating the evolution from traditional HPC to HPDA (High Performance Data Analytics).

The storage is now widely used in research institutes, universities, and enterprises across Asia Pacific and Europe.

To learn more about OceanStor Pacific products and how Huawei is reshaping the data storage industry, click here.

More from Huawei

-

Sponsored Building a low-carbon, smart society with technological innovation

How Huawei plans to build a greener society

-

Onto a winner

Simple and green: a winning formula for digital transformation

-

Sponsored Talking tech for a better planet

Huawei shares how to combat climate change through technology innovation at COP26