In the ever-expanding landscape of digital infrastructure, hyperscale data centers stand as the titans of technological prowess.

These sprawling facilities, characterized by their massive scale and unparalleled efficiency, have become the bedrock of the modern digital economy. As we peer into the future, the trajectory of hyperscale data centers promises to be one defined by innovation, resilience, and transformative potential.

According to Synergy Research Group, by the end of 2023, the number of hyperscale data centers surged to 992 from 728 in 2021, hitting over a thousand early in 2024.

As the volume of digital data continues to rise unabated, driven by trends such as the IoT, AI, and big data analytics, the demand for hyperscale infrastructure will only intensify.

To meet this burgeoning demand, hyperscale data centers must expand their footprint, both in terms of physical size and computational capacity, at an unprecedented pace.

The rise of hyperscale data centers

Hyperscale data centers represent a seismic shift in the way we conceptualize and manage data infrastructure. Unlike traditional data centers, which are characterized by a fixed capacity and infrastructure, hyperscale data centers are built to be flexible, scalable, and, most importantly, efficient.

Hyperscale facilities are recognized as data centers that are at least 10,000 square feet in size, exceed 5,000 servers, and provide at least 40MW of IT capacity that typically serve enterprise customers.

Edge computing

One of the most significant trends shaping the future of hyperscale data centers is the rise of Edge computing. As latency-sensitive applications proliferate and the need for real-time data processing grows, there is a growing imperative to move compute and storage resources closer to the Edge of the network.

Edge computing introduces a distributed architecture that complements the centralized nature of hyperscale data centers. Rather than relying solely on a few large data centers located in centralized locations, Edge computing extends the reach of computing infrastructure to the network’s Edge, closer to where data is generated. This distributed architecture enables faster response times, reduces latency, and improves reliability by processing data locally, without the need to transmit it back to centralized data centers for analysis.

An Edge model also creates new opportunities for scalability and flexibility in hyperscale data center design. By deploying smaller, modular data center facilities at the Edge of the network, hyperscale operators can extend their reach and capacity to meet the growing demand for compute and storage resources in geographically diverse locations.

Sustainability

Today’s data centers require larger fans running at increasingly higher speeds to handle heat generation.

This not only creates deafening noise, it also consumes excessive energy. According to IDC, the worldwide energy consumption of data centers will reach 803 terawatt hours by the year 2027, up from 340 terawatts in 2022.

Advanced liquid cooling technology was developed to deal with the supercomputer thermal challenge and is being applied to next-generation data centers’ transport network architecture to significantly lower operating temperatures and cut acoustic noise in half.

A popular strategy among data center owners is direct-to-chip cooling. This method circulates a liquid coolant directly over a CPU or other heat-generating components, absorbing heat from the equipment, then uses a heat exchanger to dissipate heat to ambient air or water.

Similarly, immersion cooling involves submerging servers and other IT equipment in a dielectric liquid that is non-conductive. This process can be either single phase, where the coolant stays in liquid form through the entire process, or two-phase, where it’s converted to a gas and then back to a liquid when it is cooled.

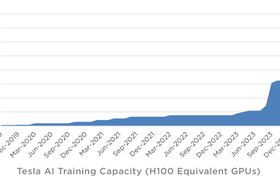

AI-driven operations

AI and machine learning (ML) will play an increasingly prominent role in the future of hyperscale data centers. By leveraging vast amounts of operational data and telemetry, AI-powered systems will enhance performance, reliability, and security while reducing operational costs and downtime.

For example, AI-driven predictive maintenance uses ML algorithms to analyze vast amounts of operational data, such as temperature, humidity, power usage, and equipment performance metrics. By identifying patterns and anomalies in this data, AI systems can predict potential equipment failures before they occur, allowing data center operators to proactively schedule maintenance activities and minimize unplanned downtime.

Similarly, AI-driven security monitoring and threat detection can be used to analyze network traffic, system logs, and user behavior patterns to identify potential security threats and anomalies. By continuously monitoring for suspicious activities and deviations from normal behavior, AI systems can quickly detect and mitigate security breaches, such as unauthorized access attempts, malware infections, or data exfiltration attempts. This proactive approach to cybersecurity enhances the overall resilience and integrity of hyperscale data center infrastructure.

The future of hyperscale data centers holds immense promise and potential, fueled by innovation, scalability, and adaptability.

As digital transformation accelerates and the demand for compute and storage capacity continues to soar, hyperscale data centers will remain at the forefront of powering the digital economy.

By embracing emerging technologies, adopting sustainable practices, and automating processes, hyperscale data centers will continue to evolve and thrive in the dynamic landscape of the digital age.