Tesla's artificial intelligence infrastructure capex was $1 billion in the first quarter, the company said in its latest earnings report.

In a call during which the electric vehicle company reported falling profits and negative cash flow, Tesla pointed to its AI investment as an opportunity for future growth.

"Over the past few months, we've been actively working on expanding Tesla's core AI infrastructure," CEO Elon Musk said.

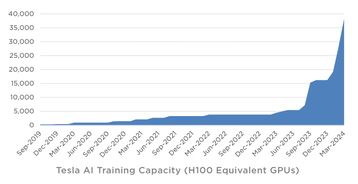

"For a while there, we were training constrained in our progress. We are, at this point, no longer training-constrained, and so we're making rapid progress. We've installed and commissioned - meaning they're actually working - 35,000 H100 computers or GPUs... roughly 35,000 H100s are active, and we expect that to be probably 85,000 or thereabouts by the end of this year just for training."

In a presentation alongside the call, the compute is referred to as 'H100 equivalent GPUs,' so it may also include other GPUs. It is not clear if the number also takes into account Tesla's own D1 chip, which is not a GPU.

The same presentation said that Tesla had increased AI training compute by more than 130 percent in Q1.

Musk also suggested that, at some undefined point in the future, Tesla cars could operate as Edge systems when they are not moving. "So kind of like AWS, but distributed inference," he said. "Like it takes a lot of computers to train an AI model, but many orders of magnitude less compute to run it.

"So if you can imagine future, perhaps where there's a fleet of 100 million Teslas, and on average, they've got like maybe a kilowatt of inference compute. That's 100 gigawatts of inference compute distributed all around the world. It's pretty hard to put together 100 gigawatts of AI compute. And even in an autonomous future where the car is, perhaps, used instead of being used 10 hours a week, it is used 50 hours a week.

"That still leaves over 100 hours a week where the car inference computer could be doing something else. And it seems like it will be a waste not to use it."

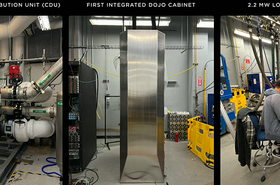

It is not clear how concrete the idea is: Musk in 2022 claimed that the company's Dojo supercomputer (which uses its D1 chip and GPUs) would likely be made available to other businesses "in like an Amazon Web Services manner." He has also repeatedly promised products that have yet to launch.

Earlier this month, the company announced that it planned to lay off 10 percent of its global workforce, or about 14,000 people.