For decades, the data center has been considered the nexus of the network. For enterprises, telco carriers, cable operators and, more recently, service providers like Google and Facebook, the data center was the heart and muscle of IT. In addition, the emergence of the cloud has emphasized the central importance of the modern data center.

But listen closely and you’ll hear the rumblings of change. As networks ramp up their support for 5G and IoT, IT managers are focusing on the edge and the increasing need to locate more capacity and processing power closer to end users. As they do, they are re-evaluating the role of their data centers.

In 2018, Gartner predicted that by 2025, 75 percent of enterprise-generated data will be created and processed at the edge—up from just 10 percent in 2018. At the same time, the volume of data is getting ready to hit another gear. A single autonomous car is expected to churn out an average of 4,000 GB of data per hour of driving!

Networks are now scrambling to determine how best to support huge increases in edge-based traffic volume as well as the demand for single-digital latency performance – without torpedoing the investment in existing data centers. A heavy investment in east-west network links and peer-to-peer redundant nodes is part of the answer, as is building more processing power where the data is created. But what about the data centers? What role will they play?

The AI/ML feedback loop

The future business case for hyperscale and cloud-scale data centers lies in their massive processing and storage capacity. As activity heats up on the edge, the data center’s power will be needed to create the algorithms that enable the data to be processed. In an IoT-empowered world, the importance of artificial intelligence (AI) and machine learning (ML) cannot be overstated. Neither can the role of the data center in making it happen.

Producing the algorithms needed to drive AI and ML requires massive amounts of data processing. Core data centers have begun deploying beefier CPUs teamed with tensor processing units (TPUs) or other specialized hardware. In addition, AI and ML applications often require high-speed, high-capacity networks, with an advanced switch layer feeding banks of servers that are all working on the same problem. AI and ML models are the product of this intensive effort.

On the other end of the process, AI and ML models need to be located where they can have the greatest business impact. For enterprise AI applications like facial recognition, for example, the ultra-low latency requirements dictate they be deployed locally, not at the core. But the models must also be adjusted periodically, so the data collected at the edge is then fed back to the data center to update and refine the algorithms.

Playing in the sandbox or owning it?

The AI/ML feedback loop is one example of how data centers will need to support a more expansive and diverse network ecosystem. For the largest players in the hyperscale data center space, this means adapting to a more distributed, collaborative environment. They want to enable their customers to deploy AI or ML at the edge, on their platform, but not necessarily in their owned facilities.

Providers like AWS, Microsoft and Google are now deploying their cloud hardware closer to customer locations—including central offices and on-premise private enterprise data centers. This enables customers to build and run cloud-based applications in any of these facilities, using the hyperscale’s platform and a mixture of edge facilities. Because these platforms are also embedded in many of the carriers’ systems, customers can also run their applications anywhere the carrier has this presence. This model, still in its infancy, provides more flexibility for the customer while enabling providers to better support the edge.

Another ecosystem approach implemented by Vapor IO, provides a business model featuring hosted data centers with standardized compute, storage and networking resources. Smaller customers—a gaming company, for example—can locate a virtual machine near their customers and run their applications using the Vapor IO ecosystem. Services like this might operate on a revenue sharing model, which could be an attractive paradigm for a small business trying to develop the edge service ecosystem.

Foundational challenges

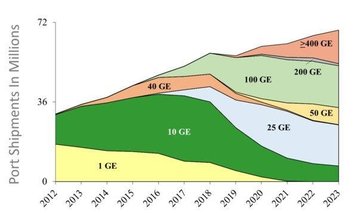

As the vision for next-generation networks comes into focus, the industry must meet the challenges of implementation. Within the data center, we know what that looks like: Server connections will go from 50Gb per lane to 100Gb, switching bandwidth will increase to 256Tb, and migration to 100Gb technology will take us to 800Gb pluggable modules.

What is less clear is how the industry designs the infrastructure from the core to the edge—specifically, how we execute the DCI architectures and metro and long-haul links and support the high-redundancy peer-to-peer edge nodes. The other challenge is developing the orchestration and automation capabilities needed to manage and route the massive amounts of traffic. These issues are front and center as the industry moves toward a 5G/IoT-enabled network.

Getting there together

What we do know for certain is that the job of building and implementing next-generation networks will involve a coordinated effort. The data center—whose ability to deliver low-cost, high-volume compute and storage cannot be duplicated at the edge—will certainly have a major role to play. But, as more distributed facilities located at the edge shoulder more of the load, the data center’s role further evolves to become a part of this larger and distributed ecosystem.

Around 10 percent of enterprise-generated data is created and processed outside a traditional centralized data center or cloud. By 2025, Gartner predicts this figure will reach 75 percent.

Tying it all together will be a faster, more reliable fiber network, beginning at the core and extending to the furthest edges of the network. It will be this cabling and connectivity platform—powered by PAM4 and coherent processing technologies, featuring co-packaged and digital coherent optics and packaged in ultra-high stranded, compact cabling—that will provide the continuous thread of consistent performance throughout.

Whether you’re a hyperscale data center, a player focused on the edge, or an infrastructure provider, in the next-generation network, there will be plenty of room—and work—for everybody. The slices aren’t getting smaller; the pie is only becoming larger.