In the data center industry, water has long been a primary source of the cooling needed to keep our high-power servers running. The business case for evaporative cooling is simple – water is cheap and readily available, and using water for cooling saves electricity, which reduces carbon emissions. Given the industry’s focus in recent years on reducing or eliminating climate impact, water cooling seems like an excellent choice.

When concerns about water use are raised, the response from the industry is to point out that electricity generation also consumes large amounts of water, so any water consumed on-site for cooling prevents consumption in the electrical supply chain, and that it all “comes out in the wash,” so to speak.

We can't take water for granted

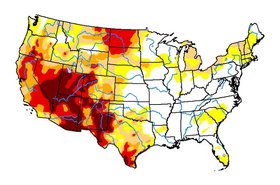

However, as our global understanding of the impacts and risks of climate change evolves, it is becoming more and more clear that we can no longer take water for granted. As the saying goes, “climate change is water change." Traditional weather and rainfall patterns no longer apply. While greenhouse gas emissions are a global issue, water is inherently local. Some regions have plentiful water, while others are already experiencing water scarcity with the expectations of increased water stress in the next decade. In addition to differences in local water availability, water consumption from electricity generation also varies. Data centers that rely on water for cooling are vulnerable to water shortages, risking conflict with the community or a sudden need for expensive retrofits to continue operation. Therefore, it is important for data center operators to evaluate the risks and benefits of water consuming cooling at each facility, based on local conditions.

Fortunately, the World Resources Institute (WRI) provides useful resources to evaluate both regional water stress (the Aqueduct Water Risk Atlas) and the water consumption intensity of regional electrical grids (a recent paper titled Guidance for Calculating Water Use Embedded in Purchased Electricity). At CyrusOne, we use these tools to understand how our facilities consume water, both directly and through the electricity supply chain.

Over the last three years, we’ve had the opportunity to conduct a case study in the tradeoffs between onsite water consumption for cooling and the “embodied water” of our electricity supply chain.

Doing the math in Carollton

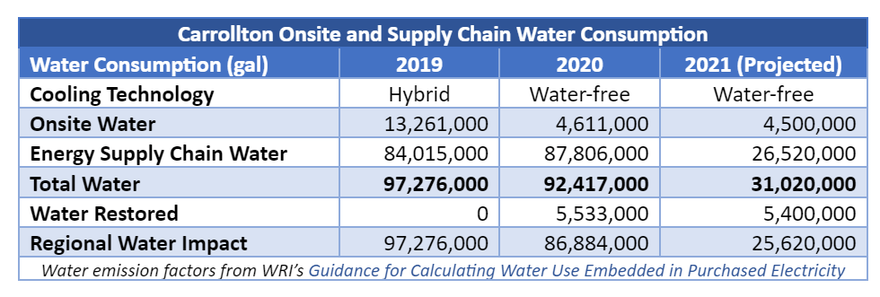

In 2019, our largest data center in Carrollton, Texas consumed 13.3 million gallons of water onsite through its hybrid air- and water-cooled system. Our Water Risk Assessment indicated that North Texas is a high water-stress region, so in 2020 we upgraded the facility to a 100 percent water-free cooling design. This had the impact of slightly raising the average power usage effectiveness (PUE) from 1.37 to 1.39 while reducing the onsite water use by 65 percent to 4.6 million gallons. Only a portion of this 4.6 million gallons is actually consumed through irrigation of our onsite landscaping, while the rest is discharged to the water treatment works after use in fire systems maintenance, breakrooms, and restrooms, but for our case study we counted it all as water consumption to be conservative.

This 65 percent decrease looks great in theory, but we wondered if the supply chain water consumed to generate the extra electricity we used as a result of the new cooling system would mean that the total water consumed by the facility stayed the same or even increased due to the upgrade. To answer that question, we turned to the World Resource Institute’s 2020 guidance, linked above, which allowed us to estimate the water consumed during electricity generation for Carrollton in 2019 and 2020, based on its source: the ERCOT grid. While we discovered that supply chain water greatly outstripped the water used onsite even before our upgrade to water-free cooling, by switching to water-free cooling Carrollton’s overall water use still decreased by more than 5 million gallons between 2019 and 2020. This result challenges the conventional wisdom that consuming water for cooling saves total water, at least in today’s supply chain.

Moreover, a new renewable electricity source that we invested in during 2020 came online this year. We expect this project to supply approximately 70 percent of Carrollton’s annual power consumption with renewable solar electricity. Based on the WRI’s tool, solar electricity has a water consumption factor of zero, thus reducing our estimated energy supply chain water consumption by 70 percent. As you can see in the table below, the total water consumed at Carrollton in 2021 will be less than a third of the consumption in 2019, demonstrating the promise of our onsite water-free cooling technology enabling a truly water-free cooling future for this facility.

Also in 2020, we began purchasing Water Restoration Credits to offset our onsite water use at Carrollton, restoring 20 percent more water than we consumed in order to achieve our net positive water designation. From here, it’s easy to imagine a future when the facility uses 100 percent renewable electricity for the full promise of net zero carbon with net positive water.

To summarize, water is a regional issue that should be evaluated separately at each facility. The choices we made at Carrollton were based on our understanding of the regional water risk and the water intensity of the local grid. At other facilities, it may be more water-efficient to consume water on site due to a more water-intensive supply chain. However, as both electrical grids and industrial consumers transition to solar and wind power, the water-intensity of electricity moves toward zero. Therefore, in the long run, we will no longer be able to justify consuming water onsite in terms of saving water at the power plant. Instead, water use should be evaluated based on regional supply and the risk of local scarcity. It is important that we begin to think of water not as a globally inexpensive, abundant resource that is always a reasonable trade-off for electricity, but with greater nuance that takes into account local environmental conditions and future projections of water availability.

The data center industry has some of the most aggressive carbon targets of any industry. We are clearly committed to reducing our climate impact. It is time to turn a critical eye on our use of water as well, to prevent damage to the habitats and communities where we operate due to the scarcity of this critical resource.