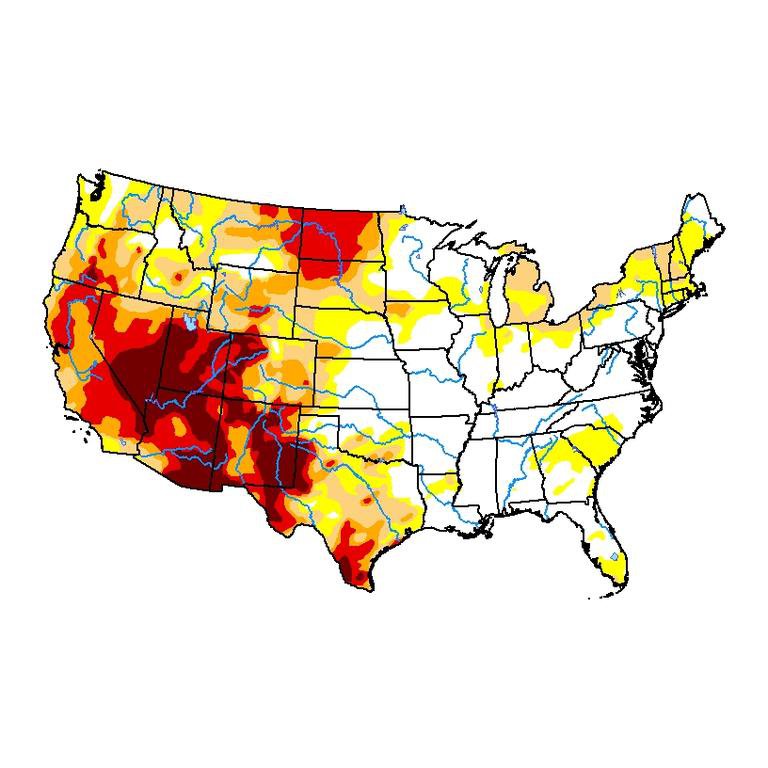

This September, as a brutal drought dragged on in the Southwestern United States, the National Oceanic and Atmospheric Administration issued a stark warning: This is only going to get worse.

The region is suffering the worst water shortage on record. Reservoirs are at all-time lows. Drinking supplies, irrigation systems, hydropower generation, fishing stocks, and more are at risk of collapse.

NOAA linked this drought, and others across the US, to climate change - a man-made problem that we appear unlikely to adequately combat any time soon.

Every sector will have to face this reality - that they will need to reduce water usage as supplies dwindle, and they will need to become better citizens in struggling communities.

The booming data center industry is no different. As facilities have cropped up across the country, they have added extra pressure to regions already challenged with meeting public, agricultural, and industrial needs.

It begs the question - how much water do US data centers use?

"We don't really know," Lawrence Berkeley National Laboratory research scientist Dr. Arman Shehabi explained.

This article appeared in Issue 42 of the DCD>Magazine. Subscribe for free today

Best known in the industry for his landmark work on quantifying how much power US data centers consume (around 205TWh in 2018), Shehabi is now trying to do the same for data center water.

"I never thought it could be worse transparency than on the energy side, but we actually know less," he said.

In a study recently published in Environmental Research Letters, researchers Shehabi, Landon Marston, and Md Abu Bakar Siddik tried to estimate the data center industry’s water use in the US, with what data is currently available.

When calculating water use, it's important to not only look at the water used directly to cool data centers, but also at the water used by power plants to generate that 205TWh.

The researchers also tracked the water used by wastewater treatment plants due to data centers, as well as the water used by power plants to power that portion of the wastewater treatment site's workload.

Server manufacturing and wider data center lifecycle aspects were not included as they are comparatively smaller.

"The total annual operational water footprint of US data centers in 2018 is estimated at 5.13 × 108 m3," the paper states, with the industry relying on water from 90 percent of US watersheds.

"Roughly three-fourths of US data centers’ operational water footprint is from indirect water dependencies. The indirect water footprint of data centers in 2018 due to their electricity demands is 3.83 × 108 m3, while the indirect water footprint attributed to water and wastewater utilities serving data centers is several orders of magnitude smaller (4.50 × 105 m3)."

Overall, the researchers estimated that 1MWh of data center energy consumption required 7.1 m3 of water, but that there is a huge variation at the local level based on the power plants used.

For example, while there are not that many data centers in the Southwest subbasin, the disproportionate amount of electricity from water-intensive hydroelectricity facilities and the high evaporative potential in this arid region means that the facilities are responsible for much more water usage.

Direct water consumption of US data centers in 2020 is estimated at 1.30 × 108 m3.

"Collectively, data centers are among the top-ten water-consuming industrial or commercial industries in the US," the paper states.

A lot of that water is potable - that is drinking - water coming straight from the utility. "That's pretty unusual for an industry,” Shehabi said.

“If you put data centers in the same category as other sorts of manufacturing sectors, well you don’t have a steel plant or a textile plant using water from the local utility. It means that there's more embodied energy associated with that water, because it has gone through the whole treatment process.”

Unfortunately, that large water use disproportionately impacts drier areas. A water scarcity footprint (WSF) is a calculation of the pressure exerted by consumptive water use on available freshwater within a river basin and determines the potential to deprive other societal and environmental water users from meeting their water demands.

"The WSF of data centers in 2018 is 1.29 × 109 m3 of US equivalent water consumption,” the researchers found.

Data centers are more likely to "utilize water resources from watersheds experiencing greater water scarcity than average," particularly in the Western US.

Changing the location of a data center can help reduce water use and impact, but may require tradeoffs with efforts to reduce carbon footprint. Equally, some areas could lead to less water usage - but draw it from subbasins facing higher levels of water scarcity.

"In general, locating a data center within the Northeast, Northwest, and Southwest will reduce the facility's carbon footprint, while locating a data center in the Midwest and portions of the Southeast, Northeast, and Northwest will reduce its WSF."

Strategically placing hyperscale and cloud data centers in the right regions could reduce future WSF by 90 percent and CO2 emissions by 55 percent, the researchers said.

"Data centers' heavy reliance on water-scarce basins to supply their direct and indirect water requirements not only highlight the industry’s role in local water scarcity, but also exposes potential risk since water stress is expected to increase in many watersheds due to increases in water demands and more intense, prolonged droughts due to climate change."

Unfortunately, there is no accurate metric for balancing water usage, versus the water stress of a region, versus the carbon intensity of the power. It gets even more complicated when you consider the differences in water used (treated or untreated). “It’s something we need to work on, we need to come up with that metric, and start taking into account how water is being used,” Shehabi said.

The estimates were based on publicly available data on water usage, cooling requirements, and extrapolations based on data center power usage. But for the problem to be tackled, the industry needs to be more forthcoming with data, Shehabi implored.

“I consider it now a major sector like textiles and chemical industry, and for these other industries a lot of data is collected by the federal government,” he said. “And there’s a lot that's even put out by trade groups globally. We know how much electricity steel manufacturing uses in the world.

“In the US, we have a good sense of textile industry power usage, and we have that because it's reported. We don't need a bunch of PhDs coming up with sophisticated models to estimate it.”

Data center companies are often cautious about sharing any information they don’t have to. Many are not even collecting data at all.

This summer, a survey by industry body the Uptime Institute found that only around half of data center managers track water usage at any level. “Most say it’s because there is no business justification, which suggests a low priority for management — be it cost, risk, or environmental considerations," Uptime found. "Yet even some of those who do not track say they want to reduce their water consumption.”

"We can't even get a baseline of what 'good' water consumption is," Shehabi said. "Google or Facebook may claim they are very efficient in terms of water usage, but there's no average.

"That's one of those things that I'm hoping we can shed more light on. Because without us being able to know how they're doing, there's really no push for them to improve in that way."

Google often makes local cities sign non-disclosure agreements prohibiting them from disclosing how much water they use. Sometimes glimpses slip out: Google's Berkeley County data center campus in South Carolina is permitted to use up to 549 million gallons of groundwater each year for cooling, a fact that was only revealed after a two-year battle with local conservation groups to stop the company taking water from a shrinking aquifer.

Facebook is a little more open about how much it consumes.

Planning documents disclosed the scale of its demands at a planned Mesa, Arizona, data center: Initially, the data center will use 550 acre-feet of water per year increasing to 1,100 acre-feet per year for Phase 2 and 1,400 acre-feet per year for Phase 3. An acre-foot is 325,851 gallons of water, so the Phase 1 facility would consume 180 million gallons of water a year, while Phase 3 would require 500 million gallons of water.

This year, Arizona's Department of Water Resources said that water use "is over-allocated and the groundwater is overcommitted. The amount of groundwater rights issued and the amount we are pumping far exceeds our capacity, and Arizona will not reach its 2025 safe yield goal of preserving groundwater."

One of the worst hit by the ongoing droughts, Arizona faces a stark future. In August, the US declared the first-ever water shortage from the Colorado River, triggering mandatory cuts to its supply. The agricultural industry, already suffering, is preparing for a difficult few years as more cuts are planned.

"As we form Mesa's climate action plan and embark upon the first phase of the seven-state drought contingency plan, making cutbacks to agriculture, I cannot in good conscience approve this mega data center using 1.7 million gallons per day at total build-out, up to 3 million square feet on 396 acres," Mesa Vice Mayor Jenn Duff said, in a vote over the Facebook project. She was the only one to vote against the facility.

That's partially because, for all their flaws and lack of transparency, hyperscalers like Facebook, Google, and Microsoft are at least promising to tackle their water usage - even as they target desert regions for their largest facilities.

Facebook claims that it will restore more water than the new data center will consume. It has invested in three water restoration projects that will together restore over 200 million gallons of water per year in the Colorado River and Salt River basins.

However, such efforts are not without caveats. Some of these projects technically do not replenish lost water - instead, they minimize water loss elsewhere, by planting different crops or fixing leaky pipes. While beneficial, they are ideally things that should be done in and of themselves, and not to offset new water usage.

There is also a finite number of such fixes, which reduces the space for other companies (including smaller data center operators) to do the same.

Facebook hopes to be water positive by 2030, while Microsoft said it would replenish more water than it uses by that date, and Google said it would add 120 percent of its water usage by the end of the decade. In each case, the goal appears to target direct water usage.

Again, there is little transparency into these efforts. "Not all water is the same," Shehabi said. "The water that's being used for irrigation - is that the same water that's actually being used at the data center? Is it coming from the same source? Maybe, maybe not."

None of the companies have to disclose how they are replenishing water, and there is no independent analysis of whether any projects are successful in returning water to the affected regions.

Shehabi hopes some of the companies will be more open about their usage and mitigation efforts. Those worried about disclosing proprietary data, he said, could share it with him in an anonymized fashion.

If not, he warned, spreading droughts and growing public awareness of data centers could lead to regulation that forces disclosure.

"Water might be the resource that pushes there to be more transparency, because it's more critical than energy," he said.

"Things feel more severe when water starts going down, and there's a drought, and people need to start rationing and there are concerns about how that's affecting the entire economy and people's own livelihoods.”