The steady rise of server power levels is one of the major data center trends over the past 10 years, and one which Uptime Intelligence has discussed regularly.

The interplay of underlying forces that drive this escalation are complex, but the net effect is clear: with every new semiconductor technology generation, server silicon is pushing air cooling equipment close to its operational limits.

Standard-setting industry body ASHRAE’s 2021 thermal guidelines warned of temperature restrictions for some future servers. More recently, another catalyst to this rising power consumption is the industry’s ongoing efforts in training large machine learning models, spearheaded by generative AI applications.

These developments have, once again, brought the issue of high-powered racks to the fore and with it a renewed interest in the benefits of direct liquid cooling (DLC) in data centers. There is a significant opportunity to maximize compute power using DLC, both in terms of IT capacity and outright server performance.

But there are also drawbacks associated with deploying liquid cooling in mainstream data center facilities to consider. One major concern is the common requirement to connect DLC systems to a facility water supply. This can make installation and maintenance more costly and complex, making DLC a non-starter to many operators that already have an effective air cooling system.

This is a false dichotomy. A growing number of DLC designs have evolved to offer the option of using air, rather than facility water, to remove heat. These air-assisted DLC systems offer a trade-off between performance and ease of deployment/operation that many operators may find attractive.

Hyperscalers upgrade AI infrastructure with air-assisted DLC

For a recent validation of the air-assisted DLC approach, look to Microsoft’s announcement in November 2023 about its new Azure AI hardware. The technology giant has deployed its bespoke AI-accelerator (Maia) and main processor (Cobalt) in non-standard, large liquid-cooled racks to better manage its Azure AI workloads.

While AI hardware can be bulkier and more power-hungry, similar hardware from other manufacturers (such as the air-cooled version of Nvidia’s Grace Hopper Superchip) can be integrated into standard racks.

Why, then, are hyperscalers choosing these customizations? It boils down to thermal management for compute performance: liquid cooling eliminates the need for large heat sinks and fans to cool high-powered silicon. This means a more compact chassis and less energy wasted by server fans. The result is more performance for the same data center footprint — and the same power capacity.

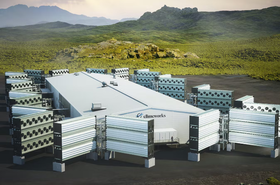

Microsoft’s custom rack incorporates DLC via a closed-loop between cold plates directly attached to the hottest server components and the adjacent heat rejection unit, which they call a “sidekick.” Importantly, the rack-level DLC system is a standalone structure. There are no plumbing connections external to the rack, but the hot fluid passes through a heat exchanger in the sidekick where fans reject the hot air to the white space. The Open Compute Project (OCP) refers to this category of liquid cooling as “air-assisted.”

There are various factors to consider when selecting this implementation of DLC over water-cooled options:

- Many facilities do not have chilled-water systems but rely on large air economizers. The infrastructure modifications to retrofit data centers to support water connections could be costly and disruptive.

- Even if facility water is present, additional equipment and an extensive plumbing network would be required to connect many racks or multi-rack coolant distribution units (CDUs), and to distribute the coolant between racks. This also creates additional maintenance needs and adds to the structural weight.

- A standalone, air-assisted design is easier to deploy in colocation facilities. This is a potentially important consideration for any organization that wants to deploy a standardized IT infrastructure across its footprint, whether it is on-premises or colocated.

Air-assisted DLC comes with some compromises, chiefly performance and overall heat rejection efficiency. Liquid-to-air heat exchangers require a larger surface area and, as a result, rack footprint to provide the same cooling capacity. Coolant loop temperatures, however, can be elevated for more effective heat transfer by modulating the flow rate. In addition, because the system uses existing facility air-cooling infrastructure, there is neither an option to elevate temperatures to optimize for free cooling, nor the possibility to run at very low coolant temperatures for peak silicon performance.

Despite these compromises, air-assisted DLC addresses the key issue in the data hall: removing large concentrations of heat from the surface of high-performance silicon. Air-assisted DLC allows data center operators to take advantage of most of the cooling performance and IT efficiency benefits of DLC without the need for facility water connections.

A DLC option to consider for most data center operators

Microsoft’s engineering decisions both draw attention to and validate air-assisted DLC. The approach has already been gaining in popularity as a short- to medium-term method of adopting liquid cooling, while not necessarily requiring customized hardware.

There are multiple opportunities associated with adopting air-assisted DLC:

- Installation flexibility: Established equipment vendors (such as Motivair, Schneider Electric, Stulz, and Vertiv, among others) offer air-assisted DLC systems that can be fitted to standard racks providing flexibility in installation. The system can be located inside or outside the rack, transferred between racks, disconnected for maintenance or upgrades, and are generally rack vendor agnostic. Because these systems have been developed commercially, the associated coolant plumbing typically integrates easily with standardized manifolds and quick-disconnect couplings. This installation flexibility means that enterprise and colocation operators can test liquid cooling integration on a small scale — as little as one server in one rack — before considering wider deployment.

- Densification and more capacity in legacy facilities: As deployments increase, compute power is densified into fewer racks, freeing up white space real estate. Air-assisted DLC makes it easier to roll out a densification program at scale in legacy data centers — even those without facility water. This is because the system can help to manage high-density racks by taking the pressure off computer room air handlers that need to deliver cold air. An air-cooled CDU, for example, removes heat from high-powered server electronics, such as processors, accelerators, and memory banks, and exchanges heat over a larger surface area compared with server heat sinks. This makes heat transfer more effective, and easier to handle for air-cooling systems.

The facility still needs to operate within the constraints of total power supply and total cooling capacity, no matter what equipment they operate in their white space. Early adopters of DLC are realizing they are unable to take advantage of any reclaimed square footage, without increased power supply from the local grid (or on-site power generation in the future if the grid is limiting) and upgraded power distribution. Densification with air-assisted DLC systems together with power equipment upgrades creates an opportunity for data center operators to expand compute capacity within the same building footprint. Even without these upgrades, the reduction of server fan power, a parasitic component in the IT load, will allow for larger compute capacity within the same power envelope.

- Building experience: Understanding rack-level liquid cooling is a prerequisite for embarking on a heat reuse system. Operators facing new regulatory pressures should gain experience with operating liquid-cooled racks, before investing in larger fluid distribution systems. Although both Google and Microsoft continue to operate legacy air-cooled facilities, their newer data centers in Europe (such as Microsoft’s Kirkkonummi site in Finland) employ heat reuse and use renewable energy.

There are, of course, challenges with air-assisted DLC deployments:

- Temperature fluctuations: For liquid-to-air heat exchangers, one challenge is achieving optimal approach temperatures because both the speed and temperature of air moving through the heat exchanger are critical. Under certain conditions, additional fan power may be required to cool the hot liquid adequately. A significant increase in temperature can lead to cascading impacts on heat transfer in the air-cooled DLC heat exchanger, requiring more compressor power or water usage to cool the air in the data hall further.

- Resiliency: The common lack of redundancy in close-coupled coolant loops means that if a liquid loop component fails, there is an immediate loss of cooling to the associated IT component. If a leak occurs, the impact can be worse in the form of damage to the IT hardware. Liquid cooling adds several components to the rack that must be maintained to guarantee uptime. Operators need to ensure staff are trained to manage these new responsibilities. Resiliency considerations are a work in progress for all types of DLC, but air-assisted systems are less likely to be designed with concurrent maintainability or fault tolerance in mind.

- Rack space and footprint. Liquid-to-air heat exchangers need to be larger than their liquid-to-liquid counterparts for the same capacity, and therefore they take up relatively more floor space. Smaller, rack-integrated, air-assisted CDUs will take up valuable rack space.

In addition to their limitations in fluid distribution, air-assisted DLC systems arguably strain power delivery more than water-cooled systems due to their use of fans. Data halls can be crammed with ever-increasing rack densities, but there needs to be electrical infrastructure to support the loads. Significant cabling and busbar (metal bars used to carry current) upgrades can be required depending on the rack power draws. This may involve using higher distribution voltages (three-phase 400V to 480V) and higher-rated breakers (e.g. 60A), as well as additional circuits to the rack. OCP and Open19 recommend a shift from 12V to 48V in-rack power distribution to improve efficiency. As operators deploy new server technologies, they need to ensure that these electrical requirements can be met.

Outlook

Microsoft's decision to adopt a bespoke, closed-loop liquid cooling system, while eschewing standard hardware ecosystems, reflects a strategic move to balance thermal efficiency with the constraints of existing infrastructure. This approach not only addresses the immediate thermal challenges posed by high-performance AI/machine learning workloads, but also sets a precedent for the industry. It illustrates the feasibility and benefits of adopting liquid cooling solutions at scale and speed, even within the limitations of retrofitted data centers.

For other data center operators, Microsoft's move offers examples of both the opportunities available and the hurdles to overcome. The growing popularity of sidekick solutions offers a practical pathway for data centers to transition towards more efficient cooling methods (particularly when IT fan power is taken into account) without extensive infrastructure overhauls. These solutions can be incrementally adopted, to allow for scalability and flexibility.

However, the challenges of optimal heat exchange, the need for enhanced power distribution, and the risks associated with liquid cooling systems should not be underestimated. Thorough planning for flexibility in expectation of uncertain IT power and cooling trends, investment in exploring additional facility techniques, and the requisite staff training are essential to successfully navigate these complexities.