HPC company Penguin Computing has announced the release of Open Compute-based Tundra Relion X1904GT and Tundra Relion 1930g servers.

The X1904GT and 1930g servers pack in the newly released Nvidia Tesla P40 and P4 GPU accelerators, respectively, primarily for deep learning and artificial intelligence applications.

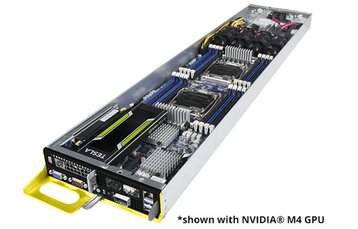

A deep learning server

“Pairing Tundra Relion X1904GT with our Tundra Relion 1930g, we now have a complete deep learning solution in Open Compute form factor that covers both training and inference requirements,” William Wu, director of product management at Penguin Computing, said.

“With the ever evolving deep learning market, the X1904GT with its flexible PCI-E topologies eclipses the cookie cutter approach, providing a solution optimized for customers’ respective applications. Our collaboration with Nvidia is combating the perennial need to overcome scaling challenges for deep learning and HPC.”

Roy Kim, director of accelerated computing at Nvidia, added: “Penguin Computing’s Open Compute servers, powered by NVIDIA Pascal architecture Tesla accelerators, are leading-edge deep learning solutions for the data center.

“Tesla P40 and P4 GPU accelerators deliver 40x faster throughput for training and 40x responsiveness for inferencing compared to what was previously possible.”

The Tundra Relion X1904GT previously supported the Nvidia Tesla P100 and M40, and incorporates Nvidia NVLink high-speed interconnect technology for faster peer-to-peer performance.

With deep learning becoming increasingly important in the data center, so are GPUs, which owe much of their existence to video games.