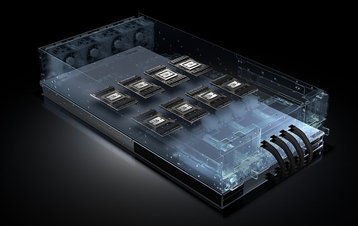

Nvidia has joined the Open Compute Project, revealing a new hyperscale GPU accelerator, the HGX-1.

The appliance consists of eight Tesla P100 GPUs connected via NVLink, a high-performance interconnect technology that sits between GPU and CPU.

HGX-1 was announced in partnership with Microsoft, which will use the accelerator as part of its Project Olympus open server design.

Accelerating AI

“The deep learning and AI revolution, even though it is huge, is also fairly young. A few years ago people were still asking the question ‘What is deep learning?’,” Roy Kim, director of Tesla Product Management, said at the OCP Summit.

“Now every cloud vendor is asking ‘How it can be AI-ready?’”

Kim said that the answer for cloud companies is the HGX-1, which was designed “to meet the exploding demand for AI computing in the cloud — in fields such as autonomous driving, personalized healthcare, superhuman voice recognition, data and video analytics, and molecular simulations.”

The Project Olympus design allows for four HGX-1s to be connected at the same time, giving a total of 32 GPUs.

“HGX-1 is the first instance of what we hope to be a roadmap,” Kim said.

“Standards evolve and workloads evolve, and we’ll continue to work with Microsoft. Our goal was to provide a standard that we believe [is] the best design for hyperscalers today.”

While the accelerator is not exclusive to Project Olympus, it was designed to the server framework’s program guidelines. Olympus, which was originally introduced in October at the DCD Zettastructure event in London, saw several additional partnerships announced at the OCP Summit.

The server will support AMD’s new Naples CPUs, as well as ARM-based chips from Qualcomm and Cavium.