Facebook has announced a complete refresh of all of its servers, and shared details of the new range with the Open Compute Project, the open source hardware group which it established in 2011.

The four models include a storage server, two compute servers and a specialist appliance designed to train neural networks. They will be launched at the OCP Summit in Santa Clara by Vijay Rao, Facebook’s director of technology strategy, and shared with the OCP community so other data center builders can adopt and develop them further.

Unlocking software from hardware

“Open hardware increases the pace of automation,” said Rao. “It makes it possible for everyone to work at the speed of software.” The impact of open hardware on software was a major theme in the OCP keynotes, and Rao illustrated the purpose of the new servers with examples of software in use by Facebook.

Facebook currently consumes around 7.5 quadrillion Instructions per second: “That’s one million instructions every second for every single person alive. That’s crazy,” he said.

The Bryce Canyon storage server is intended for high-density storage, including photos and videos. It has more powerful processors and increased memory, with 20 percent higher HDD density and a fourfold increase in compute capability over its predecessor, Honey Badger.

“The Bryce Canyon storage system supports 72 3.5-inch hard drives (12 Gb SAS/6 Gb SATA),” Jason Adrian, storage hardware systems engineer at Facebook said in a blog post.

“The system can be configured as a single 72-drive storage server, as dual 36-drive storage servers with fully independent power paths, or as a 36/72 drive JBOD. This flexibility further simplifies and streamlines our data center operations, as it reduces the number of storage platform configurations we will need to support going forward.”

The Yosemite v2 compute server is intended for scale-out data centers. It’s designed so that the sled can be pulled out of the chassis for components to be serviced, without having to power the servers down. The servers continue to operate thanks to this ‘hot service’ feature.

“Yosemite v1 has become a workhorse within Facebook,” said Rao, listing new features of v2 and promising some “hidden gems”, and boasting that the HHVM software which runs on it compiles PHP efficiently and is available as open source.

“With the previous design, repairing a single server prevents access to the other three servers since all four servers lose power,” Arlene Murillo, Facebook technical program manager, said on a Facebook blog.

Tioga Pass is a new compute server with dual-socket motherboards and more I/O bandwidth for its flash, network cards, and GPUs than its predecessor Leopard.

Rao described the RocksDB software which run on Leopard, and has now been merged with MySQL, to create the Myrocks database which he said would actually halve the amount of storage and the number of server instances required for Facebook’s databases.

It also allows disaggregated flash, so a Tioga Pass server can be configured with varying amounts of flash in a sled called Lightning, effectively getting away from the limited SKUs of previous server modules.

Murillo said: “The addition of a 100G network interface controller (NIC) also enables higher-bandwidth access to flash storage when used as a head node for Lightning. This is also Facebook’s first dual-CPU server to use OpenBMC after it was introduced with our Mono Lake server last year.”

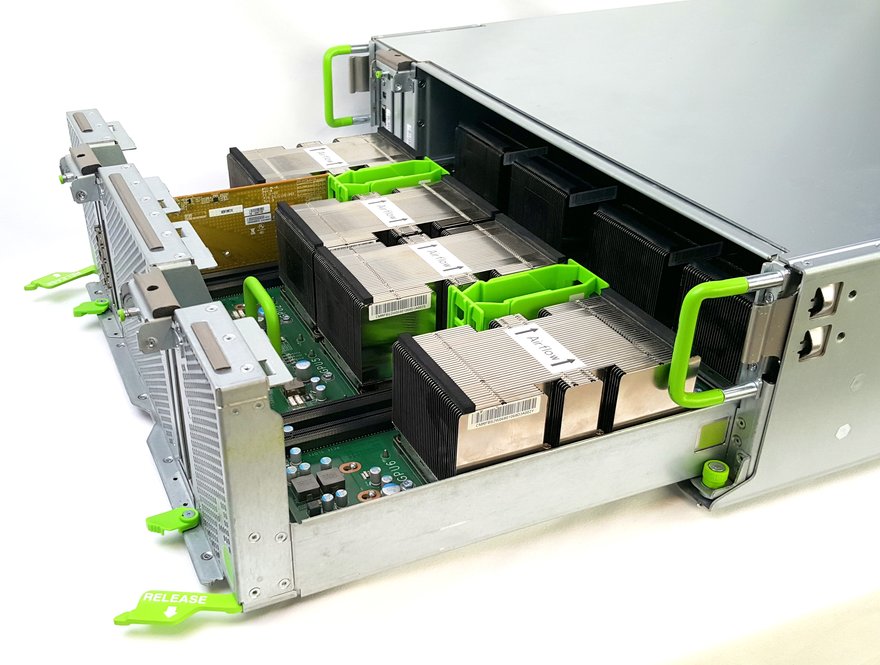

Big Basin is the server that trains neural networks - machine learning systems that mimic brain structures. Neural nets handle tasks such as identifying images by examining huge amounts of data. Big Basin, replaces the earlier Big Sur, offering more memory and processor power.

“We are committed to advancing AI,” said Rao, showing a video in which three blind women were able to like and respond to images on Facebook, because a machine learning algorithm had identified features of the pictures and described them in words.

“Compared with Big Sur, Big Basin will bring us much better gain on performance per watt, benefiting from single-precision floating-point arithmetic per GPU increasing from 7 teraflops to 10.6 teraflops,” Kevin Lee, Facebook’s technical program manager, said.

“Half-precision will also be introduced with this new architecture to further improve throughput.”