Nvidia has developed a version of its H100 GPU specifically for large language model and generative AI development.

The dual-GPU H100 NVL has more memory than the H100 SXM or PCIe, as well as more memory bandwith, key features for large AI models.

The existing H100 comes with 80GB of memory (HBM3 for the SXM, HBM2e for PCIe). With the NVL, both GPUs pack in 94GB, for a total of 188GB HBM3.

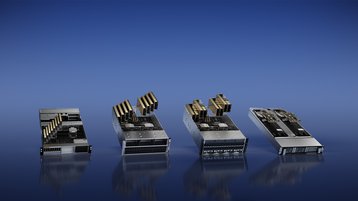

It also has a memory bandwidth of 3.9TBps per GPU, for a combined 7.8TBps. For comparison, H100 PCIe has 2TBps, while the H100 SXM has 3.35TBps. The new product has three NVLink connectors on the top.

Each GPU also consumes about 50W more than the H100 PCIe, and is expected to use 350-400W per GPU.

Compared to the A100 GPU that was used by OpenAI to develop GPT-3, Nvidia claims that it delivers up to 12× faster inference performance.

“This amazing GPU will considerably lower the total cost of ownership (TCO) for running and performing inference on GPT-based and large language models," Nvidia's VP of hyperscale and HPC Ian Buck said. "It will play a crucial role in democratizing GPT and large language models for widespread use."