Facebook has shared some more on the tools it uses to manage the huge networks in its data centers. This time, it has open-sourced the code for its Katran load balancer, and described the principles behind a network provisioning system which works like a vending machine.

The social network has previously shared a lot of design principles and open-sourced network switch hardware and software through the Open Compute Project, which it founded in 2011. The latest item to be shared as open source code is the Katran forwarding plane software library, which powers Facebook’s network load balancer and is described in a blog published this week. Meanwhile, the social giant has published another blog which describes the principles behind an “aero touch” provisioning system.

Serving the masses

Katran is deployed in Facebook’s points of presence (PoPs) and has helped make Facebook’s network load balancer more scalable and efficient, say Nikita Shirokov and Ranjeeth Dasineni in the blog, hoping that by sharing it, Facebook can help others ”improve the performance of their load balancers and also use Katran as a foundation for future work.”

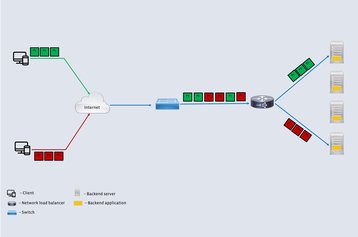

Facebook runs applications which serve a large numbers of users, but to the outside world, they appear to be run on single servers. The load balancers take communications addressed to a single virtual IP address, and share them out amongst a large fleet of back-end servers, so each users requests go consistently to a single server (the red and green packets in the diagram).

Load balancers operate at Layer 4 of the network, and so have to handle individual packets. This means they have to work fast. ”Traditionally, engineers have preferred hardware-based solutions for this task because they typically use accelerators such as application-specific integrated circuits (ASICs) or field-programmable gate arrays (FPGAs) to reduce the burden on the main CPU,” say the auhors.

However, hardware based accelerators are less flexible and scalable than ones which can be implemented in software on general purpose hardware, running alongside other applications in the data center.

Fcebook built a high performance load balancer using the IPVS kernel module in Linux, which worked well for over four years, but has now improved on this by using new Linux kernel features: the eXpress Data Path (XDP) framework and the BPF virtual machine (eBPF), which allowed the engineers to run the software load balancer and the backends on a large number of machines.

“We believe that Katran offers an excellent forwarding plane to users and organizations who intend to leverage the exciting combination of XDP and eBPF to build efficient load balancers,” say the authors.

The network slot-machine

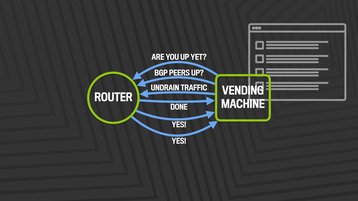

Facebook also described a zero-touch network provisioning scheme, designed to help it keep up with the rapid growth of its networks but did not share code for it. More and more capacity is needed on Facebook’s network, and it became difficult to provision new network servers by hand - hence the “vending machine” approach described in a blog by Joe Hrbek, Brandon Bennett, David Swafford and James Quinn.

“Facebook’s rapidly growing backbone traffic demands strained our ability to keep up, and the manual approaches were no longer enough. Pushing to build network more quickly led to increased errors and escalating impacts to our services,” says the blog.

“Ultimately, these challenges drove Facebook’s network engineers to develop a completely new approach for network deployment workflows. We called it Vending Machine, a name inspired by the machines that dispense candy and soft drinks. In the case of Facebook’s Vending Machine, the input is a device role, location, and platform, and out pops a freshly provisioned network device, ready to deliver production traffic.”