Decades in the making, construction has finally begun on one of the largest scientific endeavors in human history.

The SKA Observatory, better known as the Square Kilometer Array, will be the largest and most sensitive radio telescope in the world, collecting more data on our universe than ever before.

And all that data will need to be processed.

This article appeared in Issue 42 of the DCD>Magazine. Subscribe for free today

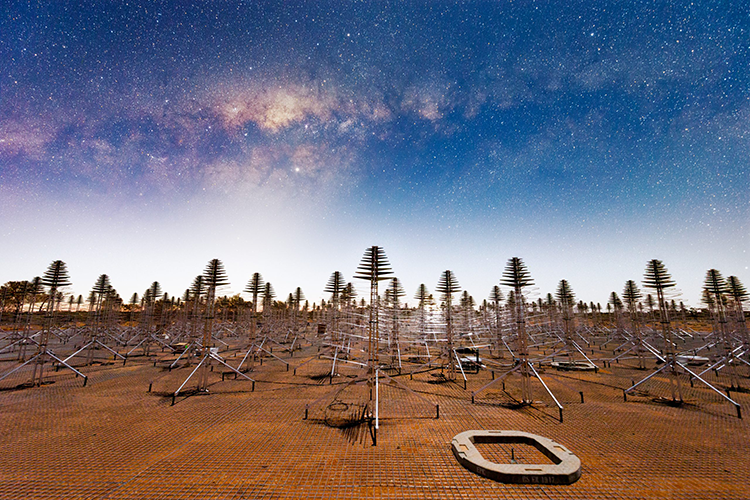

Across a huge tract of land in Australia, hundreds of thousands of Christmas tree-shaped antennas are set to be installed to process signals from the dawn of time, in the low portion of the radio spectrum. Over in South Africa, hundreds of large satellite dishes will record mid-spectrum, also unlocking the mysteries of the universe.

SKAO Low and Mid will operate in tandem on different continents, each spread over thousands of kilometers, requiring a careful and complex collaboration.

"In the Low case, once we do the digitalization of the signals, we're producing raw data at the rate of about two petabytes per second," SKAO's computing infrastructure lead Miles Deegan said.

"We then go through several stages of reducing that data - we've got some FPGAs, and what have you - we do a little processing, and then from the deserts we go to Perth and Cape Town respectively.”

In those cities, large supercomputers will crunch what comes in - with the two ‘Science Data Processor’ HPC systems expected to total at least 250 petaflops, although that number could still rise.

“They will do further reduction on this data, and hopefully turn it in something which is scientifically useful,” Deegan said. That useful data will accumulate at the rate of about 300 petabytes per telescope per year, once the system is in full operations from 2027-2030.

"And then that's not the end of the story,” he said. The data has to be sent to the scientific community around the globe. “So we will need dedicated links to distribute this 300 petabytes per annum to a network of what we call SK regional centers.

"It's a journey of tens of thousands of kilometers for a piece of data, to go from the analog radio wave, to being turned into something digital, to being turned into something scientifically useful, and then to be sent off to the scientists."

That journey first has to start at the dish or antenna, and be heavily processed and compressed before it travels to the data center.

That's handled by the Central Signal Processor (CSP), which is actually a number of different semiconductors, algorithms, and processes, rather than a single chip.

Some of this is done on site, in a desert - radio astronomers have to build in remote regions because even the smallest of manmade radio emissions can drown everything out. That means dealing with extreme heat, all while trying to not emit anything.

"We've got the RFI challenges; that gives us a bit of a problem," SKAO network architect Peter Lewis said of the equally difficult data transfer efforts.

"If you look at the SKA Mid, within the dishes there's a kind of a Faraday cage, a shielded cabinet which has got no air-con in there. We're gonna put a load of kit in there in the middle of nowhere where temperatures can get to 40°C (104°F).

"We're looking at putting industrial Ethernet switches in where we can. Where we can't, we just have to use standard technology… it will just be running fairly hot.”

With SKA Mid, the group has taken the decision to shift a bunch of the CSP out of the desert and near to the Cape Town data center.

"We are in the process of actually trying to move some stuff around," Deegan said. "In the case of the Low telescope, we're just about getting around finishing off the analysis as to whether we should make the same move. My guess is it will happen, but the jury's still out.”

It is perhaps fitting that a project aiming to study the beginnings of the universe has required a level of long-term thinking and planning humans generally aren't that well suited to.

First conceived in the early 1990s, designed in the late-2010s, the SKAO has gone through numerous iterations - whether it be because of new ideas, political infighting, or technological advancements.

This has made designing the compute and networking infrastructure for a shifting project set years in the future quite challenging.

"We did some designs back in 2017 and, clearly, we're not going to deploy the things that we designed then, because technology's just moved on so quickly,” Lewis said. "Even the designs that we're looking at today, will be different by the time it goes live."

It’s a constant challenge, he said, of dealing with “people asking us what does the rack layout look like, what's the power draw, what cooling do you need? And all we can do is give what we can do today, with some kind of view as to how the power curve will drop over time.”

But, in other ways, time has been the friend of the networking and computing team at SKAO, which in 2013 thought it would have to have a fiber network with greater capacity than global Internet traffic.

"To be honest, when we first started, the data rates were quite enormous," Lewis said. "With a project that's taking this long, obviously, technologies have kind of caught up."

In those early days, it seemed like there would be simply no way to transfer the data far from the dishes. SKAO helped fund projects like the IBM Dome in 2014, which was a hot water-cooled microserver that they hoped could process 14 exabytes of data on site and filter out one petabyte of useful information.

Dome was ultimately a failure, although parts of the project found their way into IBM's (now waning) HPC business, including the SuperMUC supercomputer in Zurich. But the amount of data has changed, as has the ability to send it over greater distances, meaning that SKAO doesn't rely on something like Dome to be successful.

That could change once again. "The ambition is to ultimately build a second phase of these SKAO telescopes, and maybe even further telescopes,” Deegan said. “The observatory is supposed to have a lifetime of at least 50 years and things aren't going to be static

“If we can successfully deliver these two telescopes, we will then look to expand them further. We’re looking at a factor of 10 increase in the number of antennas and that really increases the data and the amount of computing for algorithmic scaling reasons, especially if you start spreading these things out over large distances,” he said.

“It’s not like we will deliver whatever is needed on day one, and then just feed and water those systems and plod along for forevermore. It's just gonna be an ongoing problem for 50 years."