Over the past few years, Google has been quietly overhauling its data centers, replacing its networking infrastructure with a radical in-house approach that has long been the dream of those in the networking community.

It’s called Mission Apollo, and it’s all about using light instead of electrons, and replacing traditional network switches with optical circuit switches (OCS). Amin Vahdat, Google’s systems and services infrastructure team lead, told us why that is such a big deal.

This feature appeared in the latest issue of DCD Magazine. Subscribe for free today

Keeping things light

There’s a fundamental challenge with data center communication, an inefficiency baked into the fact that it straddles two worlds. Processing is done on electronics, so information at the server level is kept in the electrical domain. But moving information around is faster and easier in the world of light, with optics.

In traditional network topologies, signals jump back and forth between electrical and optical. “It's all been hop by hop, you convert back to electronics, you push it back out to optics, and so on, leaving most of the work in the electronic domain,” Vahdat said. “This is expensive, both in terms of cost and energy.”

With OCS, the company “leaves data in the optical domain as long as possible,” using tiny mirrors to redirect beams of light from a source point and send them directly to the destination port as an optical cross-connect.

Ripping out the spine

“Making this work reduces the latency of the communication, because you now don't have to bounce around the data center nearly as much,” Vahdat said. “It eliminates stages of electrical switching - this would be the spine of most people's data centers, including ours previously.”

The traditional 'Clos' architecture found in other data centers relies on a spine made with electronic packet switches (EPS), built around silicon from companies like Broadcom and Marvell, that is connected to 'leaves,' or top-of-rack switches.

EPS systems are expensive and consume a fair bit of power, and require latency-heavy per-packet processing when the signals are in electronic form, before converting them back to light form for onward transmission.

OCS needs less power, Vahdat said: “With these systems, essentially the only power consumed by these devices is the power required to hold the mirrors in place. Which is a tiny amount, as these are tiny mirrors.”

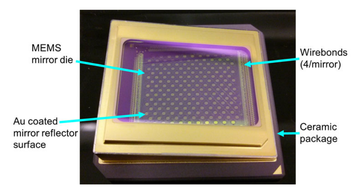

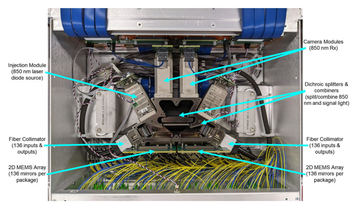

Light enters the Project Apollo switch through a bundle of fibers, and is reflected by multiple silicon wafers, each of which contains a tiny array of mirrors. These mirrors are 3D Micro-Electro-Mechanical Systems (MEMS) which can be individually re-aligned quickly so that each light signal can be immediately redirected to a different fiber in the output bundle.

Each array contains 176 minuscule mirrors, although only 136 are used for yield reasons. “These mirrors, they're all custom, they're all a little bit different. And so what this means is across all possible in-outs, the combination is 136 squared,” he said.

That means 18,496 possible combinations between two mirror packages.

The maximum power consumption of the entire system is 108W (and, usually, it uses a lot less), which is well below what a similar EPS can achieve, at around 3,000 watts.

Over the past few years, Google has deployed thousands of these OCS systems. The current generation, Palomar, ”is widely deployed across all of our infrastructures,” Vahdat said.

Google believes this is the largest use of OCS in the world, by a comfortable margin. “We've been at this for a while,” Vahdat said.

Build it yourself

Developing the overall system required a number of custom components, as well as custom manufacturing equipment.

Producing the Palomar OCS meant developing custom testers, alignment, and assembly stations for the MEMS mirrors, fiber collimators, optical core and its constituent components, and the full OCS product. A custom, automated alignment tool was developed to place each 2D lens array down with sub-micron accuracy.

“We also built the transceivers and the circulators,” Vahdat said, the latter of which helps light travel in one direction through different ports. “Did we invent circulators? No, but is it a custom component that we designed and built, and deployed at scale? Yes.”

He added: “There's some really cool technology around these optical circulators that allows us to cut our fiber count by a factor of two relative to any previous techniques.”

As for the transceivers, used for transmitting and receiving the optical signals in the data center, Google co-designed low-cost wavelength-division multiplexing transceivers over four generations of optical interconnect speeds (40, 100, 200, 400GbE) with a combination of high-speed optics, electronics, and signal processing technology development.

“We invented the transceivers with the right power and loss characteristics, because one of the challenges with this technology is that we now introduce insertion loss on the path between two electrical switches.”

Instead of a fiber pathway, there are now optical circuit switches that cause the light to lose some of its intensity as it bounces through the facility. "We had to design transceivers that could balance the costs, the power, and the format requirements to make sure that they could handle modest insertion loss," Vahdat said.

"We believe that we have some of the most power-efficient transceivers out there. And it really pushed us to make sure that we could engineer things end-to-end to take advantage of this technology."

Part of that cohesive vision is a software-defined networking (SDN) layer, called Orion. It predates Mission Apollo, "so we had already moved into a logically centralized control plane," Vahdat said.

"The delta going from logically centralized routing on a spine-based topology to one that manages this direct connect topology with some amount of traffic engineering - I'm not saying it was easy, it took a long time and a lot of engineers, but it wasn't as giant a leap, as it would have been if we didn't have the SDN traffic engineering before."

The company "essentially extended Orion and its routing control plane to manage these direct connect topologies and perform traffic engineering and reconfiguration of the mirrors in the end, but logical topology in real time based on traffic signals.

"And so this was a substantial undertaking, but it was an imaginable one, rather than an unimaginable one."

Spotting patterns

One of the challenges of Apollo is reconfiguration time. While Clos networks use EPS to connect all ports to each other through EPS systems, OCS is not as flexible. If you want to change your direct connect architecture to connect two different points, the mirrors take a few seconds to reconfigure, which is significantly slower than if you had stayed with EPS.

The trick to overcoming this, Google believes, is to reconfigure less often. The company deployed its data center infrastructure along with the OCS, building it with the system in mind.

"If you aggregate around enough data, you can leverage long-lived communication patterns," Vahdat said. "I'll use the Google terminology 'Superblock', which is an aggregation of 1-2000 servers. There is a stable amount of data that goes to another Superblock.

"If I have 20, 30, 40 superblocks, in a data center - it could be more - the amount of data that goes from Superblock X to Superblock Y relative to the others is not perfectly fixed, but there is some stability there.

"And so we can leave things in the optical domain, and switch that data to the destination Superblock, leaving it all optical. If there are shifts in the communication patterns, certainly radical ones, we can then reconfigure the topology."

That also creates opportunities for reconfiguring networks within a data center. “If we need more electrical packet switches, we can essentially dynamically recruit a Superblock to act as a spine,” Vahdat said.

“Imagine that we have a Superblock with no servers attached, you can now recruit that Superblock to essentially act as a dedicated spine,” he said, with the system taking over a block that either doesn’t have servers yet, or isn’t in use.

“It doesn't need to sync any data, it can transit data onward. A Superblock that's not a source of traffic can essentially become a mini-spine. If you love graph theory, and you love routing, it's just a really cool result. And I happen to love graph theory.”

Always online

Another thing that Vahdat, and Google as a whole, loves is what that means for operation time.

“Optical circuit switches now can become part of the building infrastructure," he said. "Photons don't care about how the data is encoded, so they can move from 10 gigabits per second to 40, to 200, to 400 to 800 and beyond, without necessarily needing to be upgraded."

Different generations of transceiver can operate in the same network, while Google upgrades at its own pace, “rather than the external state of the art, which basically said that once you move from one generation of speeds to another, you have to take down your whole data center and start over,” Vahdat said.

“The most painful part from our customers' perspective is you're out for six months, and they have to migrate their service out for an extended period of time,” he said.

“At our scale, this would mean that we were pushing people in and out always, because we're having to upgrade something somewhere at all times, and our services are deployed across the planet, with multiple instances, that means that again, our services would be subject to these moves all the time.”

Equally, it has reduced capex costs as the same OCS can be used across each generation, whereas EPS systems have to be replaced along with transceivers. The company believes that costs have dropped by as much as 70 percent. “The power savings were also substantial,” Vahdat said.

Keeping that communication in light form is set to save Google billions, reduce its power use, and reduce latency.

What’s next

“We're doing it at the Superblock level,” Vahdat said. “Can we figure out how we will do more frequent optical reconfiguration so that we could push it down even further to the top-of-rack level, because that would also have some substantial benefits? That's a hard problem that we haven't fully cracked.”

The company is now looking to develop OCS systems with higher port counts, lower insertion loss, and faster reconfiguration times. "I think the opportunities for efficiency and reliability go up from there," Vahdat said.

The impact can be vast, he noted. “The bisection bandwidth of modern data centers today is comparable to the Internet as a whole,” he said.

“So in other words, if you take a data center - I'm not just talking about ours, this would be the same at your favorite [hyperscale] data center - and you cut it in half and measure the amount of bandwidth going across the two halves, it’s as much bandwidth as you would see if you cut the Internet in half. So it’s just a tremendous amount of communication.”