It’s the largest public-private computing partnership ever created, a grand collaboration against the challenge of our times. It hopes to minimize the impact of this crisis, help understand how Covid-19 spreads, and how to stop it.

We’ve never had anything quite like it, and yet we still have so far to go.

The Covid-19 High-Performance Computing Consortium began with a phone call. In the early days of the virus, IBM Research director Dario Gil used his White House connections to call the Office of Science and Technology Policy and ask how his company could help.

Soon, the Department of Energy, home to most of the world's most powerful supercomputers, was brought in, along with NASA, all looking to work together.

“The principles were outlined pretty quickly,” Dave Turek, IBM’s exascale head at the time, told DCD. “We need to amalgamate our resources. The projects have to have scientific merit, there has to be urgency behind it, it all has to be free, and it has to be really, really fast.”

The group created a website and sent out a press release. "Once we made known we're doing this, the phone lit up," Turek said.

Soon, all the major cloud providers and supercomputing manufacturers were on board, along with chip designers, and more than a dozen research institutes.

Immediately, researchers could gain access to 30 supercomputers with over 400 petaflops of performance, all for free. It has since expanded to 600 petaflops, and is expected to continue to grow - even adding exascale systems in 2021, if need be.

This feature appeared in the August issue of the DCD Magazine. Subscribe for free today.

Setting up the consortium

The next challenge was speed. There's little point in bringing all this power together if people can't access it, and with the virus spreading further every day, any wasted time would be a tragedy.

The group had to quickly form a bureaucratic apparatus that could organize and track all that was happening, without bogging it down with, well, too much bureaucracy.

“There’s three portions to it,” Under Secretary of Energy for Science Paul Dabbar explained. “There’s the executive committee chaired by myself and Dario Gil, which deals with a lot of the governance structure.”

Then comes the Science Committee, which “reviews the proposals that come in, in general in about three days,” Dabbar told DCD. “A typical grant follow-up process, with submittals, peer review, and selection takes about three months. We can’t take that long.”

After that, accepted research proposals are sent to the Computing Allocation Committee, which works out which systems the proposals should be run on - be it the 200 petaflops Summit supercomputer or a cloud service provider’s data center.

“Since the beginning of February, when we had the first Covid project get on Summit, we’ve had hundreds of thousands of node hours on the world's most powerful machine dedicated to specifically this problem,” Oak Ridge Computing Facility’s director of science Bronson Messer said.

While Japan’s 415 petaflops Fugaku system this summer overtook Summit in the Top500 rankings, it isn’t actually fully operational - ‘only’ 89 petaflops are currently available, which is also being dedicated to the consortium.

“My role is to facilitate getting researchers up to speed on Summit and moving towards what they actually want to do as quickly as possible,” Messer told DCD.

Using such a powerful system isn’t a simple task, Oak Ridge’s molecular biophysics director Dr. Jeremy Smith explained: “It's not easy to use the full capability of a supercomputer like Summit, use GPUs and CPUs and get them all talking to each other so they're not idle for very long. So we have a supercomputing group that concentrates on optimizing code to run on the supercomputer fast,” which works in collaboration with IBM and Nvidia on the code.

Research project principal investigators (PIs) can suggest which systems they would like to work on, based on specific requirements or on past experience with individual machines or with cloud providers.

Summit, with its incredible power, is generally reserved for certain projects, Messer said. “A good Summit project, apart from anything else, is one that can really effectively make use of hybrid node architectures, can really make use of GPUs to do the computation. And then the other part is scalability. It really needs to be a problem that has to be scaled over many, many nodes.

“Those are the kinds of problems that I'm most excited about seeing us tackle because nobody else can do things that need a large number of compute nodes all computing in an orchestrated way.”

Smith, meanwhile, has been using Summit against Covid-19 since before the consortium was formed. "By the end of January, it became apparent that we could use supercomputers to try and calculate which chemicals would be useful as drugs against the disease," he told DCD.

He teamed up with postdoctoral researcher Micholas Smith (no relation) to identify small-molecule drug compounds of value for experimental testing. "Micholas promptly fell ill with the flu," Smith said. "But you can still run your calculations when you've got the flu and are in bed with a fever, right?"

The researchers performed calculations on over 8,000 compounds to find ones that were most likely to bind to the primary spike protein of Covid-19 and stop the viral lifecycle from working, posting the research in mid-February.

The media, hungry for positive news amid the crisis, jumped all over the story - although many mischaracterized the work as for a vaccine, rather than a step towards therapeutic treatment. But the attention, and the clear progress the research showed, helped lay the groundwork for the consortium.

We're not yet at the stage where a supercomputer could simply find a cure or treatment on its own, they are just part of a wider effort - with much of the current research focused on reducing the time and cost of lab work.

Put simply, if researchers in 'wet labs' working with physical components are searching for needles in an enormous haystack, Smith's work reduces the amount of hay that they have to sift through "so you have a higher concentration of needles," he said.

"You have a database of chemicals, and from that you make a new database that's enriched in hits, that's to say chemicals.”

In Smith’s team, “we have a subgroup that makes computer models of drug targets from experimental results. Another one that does these simulations on the Summit supercomputer, and a docking group which docks these chemicals to the target - we can dock one billion chemicals in a day.”

While computing power and simulation methods have improved remarkably over the past decade, “they still leave a lot to be desired,” Smith said. “On average, we are wrong nine times out of 10. If I say ‘this chemical will bind to the target,’ nine times out of 10 it won't. So experimentalists still have to test a good number of chemicals before they find one that actually does bind to the target.”

Sure, there’s a large margin of error, but it’s about bringing the number down from an astronomically greater range. “It reduces the cost significantly,” Dr. Jerome Baudry said.

“It costs $10-20 dollars to test a molecule, so if you've got a million molecules to screen, it's gonna cost you $20 million, and take a lot of time - just trying to find the potential needle in the haystack,” he said. “Nobody does that at that scale, even big pharmas. It's very complicated to find this kind of pocket money to start a fishing expedition.”

Instead, the fishing can happen at a faster, albeit less accurate, rate on a supercomputer. In normal times, such an effort is cheaper than in a lab, but right now “it’s entirely free." For Baudry, this has proved astounding: “When, like me, you are given such a computer and the amazing expertise around it and no one is asking you to send a check, well, I don't want to sound too dramatic, but the value is in months saved.”

At the University of Alabama in Huntsville, Baudry is working on his own search - as well as collaborating with Smith’s team.

“We're trying to model the physical processes that actually happen in the lab, in the test tube, during drug discovery,” Baudry said.

“Discovery starts with trying to find small molecules that will dock on the surface of a protein. A lot of diseases that we are able to treat pharmaceutically are [caused by] a protein that is either not working at all, or is working too well, and it puts a cell into hyperdrive, which could be the cause of cancer.”

Usually, treatment involves using “small molecules that will dock on the surface of the protein and restore its function if it's not working anymore, or block it from functioning too quickly if it's working too fast,” Baudry said.

Essentially, researchers have to find a key that will go into the lock of the protein that “not only opens the door, but opens the door at the right rate in the right way, instead of not opening the door at all, or opening the door all the time.”

The analogy, of course, has its limits: “It's more complicated than that because you have millions of locks, and millions of keys, and the lock itself changes its shape all the time,” Baudry said. “The proteins move at the picosecond - so thousands of billions of a second later in the life of a protein, and the shape of the lock will be different already.”

All this needs to be simulated, with countless different molecules matching against countless iterations of the protein. “So it's a lot of calculations indeed - there's no way on Earth we could do it without a supercomputer at a good level.”

The natural approach

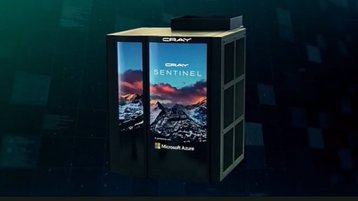

Using HPE’s Sentinel supercomputer, available through Microsoft Azure, Baudry's team has published a list of 125 naturally occurring products that interact with coronavirus proteins and can be considered for drug development against Covid-19. Access to the system, and help on optimizing its use, was provided for free by HPE and subsidiary Cray.

“Natural products are molecules made by living things - plants, fungi, bacteria; sometimes we study animals, but it's rare.”

Baudry is looking at this area, in collaboration with the National Center for Natural Products, as they are “molecules that already have survived the test of billions of years of evolution.”

Coming up with an entirely new drug is always a bit of a gamble because “it may work wonderfully well in the test tube, but when you put it in the body, sometimes you realize that you mess with other parts of the cell,” he said. “That’s the reason why most drugs fail clinical trials actually.”

Natural products have their own drawbacks, and can be poisonous in their own way of course, but the molecules “are working somewhere in some organism without killing the bug or the plant - so we’ve already crossed a lot of boxes for potential use.”

Baudry’s team is the “only group using natural products for Covid-19 on this scale, as far as I know,” he said. “Because this machine is so powerful, we can afford to screen a lot of them.”

All around the world, researchers are able to screen molecules, predict Covid’s spread, and simulate social distancing economic models with more resources than ever.

The cloud approach

“I remember in the first few weeks [of the virus], we did not really think about what was happening to price-performance and all of that - we kept getting requests, and we kept offering GPUs and CPUs,” Google Cloud’s networking product management head Lakshmi Sharma said.

The consortium member has given huge amounts of compute to researchers, including more than 80,000 compute nodes to a Harvard Medical School research effort. “We did not think about where the request was coming from, as long as it helped with the discovery of the drug, as long as it helped contact tracing, or with any of that.”

She added: “We just went into this mode of serving first and business later.”

It’s an attitude shared by everyone in the consortium - and off the record conversations with researchers hammered home that such comments were not just exercises in PR, but that rivals were truly working together for the common good.

“Government agencies working together that's pretty standard, right?,” Undersecretary Dabbar said. “Given how much we fund academia, us working with the MITs and the University of Illinois of the world is kind of everyday stuff, too.”

But getting the private sector on board in a way that doesn’t involve contractual agreements and financial incentives “has not been the normal interaction,” he said.

“Google, AWS, HPE, IBM, Nvidia, Intel - they're all now working together, contributing for free to help solve this. It's really positive as a member of humanity that people who are normally competitors now contribute for free and work together. I think it's amazing.”

Such solidarity goes beyond the corporate level. While the consortium was started as a US affair, it has since officially brought in South Korean and Japanese state research institutes, has a partnership with Europe’s PRACE, and is teaming up with academic institutions in India, Nepal, and elsewhere.

“Some of this was proactive diplomacy,” Dabbar said. “We used the G7 just to process a lot of that.”

Global cooperation, with a caveat

But there remains a major supercomputing power not involved in the consortium: China. Here, it turns out that there are limits to the camaraderie of the collaboration.

“I think we are very cautious about research with Communist Party-run China,” Dabbar said. “We certainly have security issues,” he added, referencing reports that the country was behind hacks on Covid-19 research labs.

“But I think there's a broader point, much broader than the narrow topic of Covid and the consortium - the US has a culture of open science, and that kind of careful balance between collaboration and competition, that came from Europe in the mid to late 1800s,” he said.

“It turns out that a certain government is running a certain way looking at science and technology very differently than the model we follow, including non-transparency and illicit taking of technology. It's hard [for us] to work with an entity like that.”

Chinese officials have denied that their nation is behind such attacks. Earlier this year, the country introduced regulations on Covid-19 research, requiring government approval before they could publish results. Some claim the move was to improve the quality of reports, others say its a bid to control information on the start of the pandemic.

China’s national supercomputers are being used for the country's own Covid research efforts, while some Chinese universities are using the Russian 'Good Hope Net,' which provides access to 1.3 petaflops of compute.

But as much as this story is about international cooperation and competition, about football-field-sized supercomputers, and billion-dollar corporations - it is also about people.

The men and women working in the labs and on supercomputers face the same daily toll felt by us all. They see the news, they have relatives with the virus; some may succumb themselves.

Many of the researchers we spoke to on and off record were clearly tired, none were paid to take part in the consortium (where members meet three days a week), and many had to continue their other lab and national security work, trying to balance the sudden crisis with existing obligations.

All that DCD spoke to were keen to note that, while the collaboration and access to resources were professionally astounding, it was nothing when compared to the tragic personal impact of Covid-19 being felt around the world.

But they hope that their work could build the framework to make sure this never happens again.

“There's been an ongoing crisis in drug development for years,” Jim Brase, the deputy associate director for computation at Lawrence Livermore National Laboratory, said. “We're not developing new medicines.”

Brase, who heads his lab’s vaccine and antibody design for Covid, is also the lead for an older consortium, the Accelerating Therapeutics for Opportunities in Medicine group. ATOM was originally set up to focus on cancer, and while that remains a significant aspect of its work, the partnership has expanded to “building a general platform for the computational design of molecules.”

In pharmaceutical research, “there are a lot of neglected areas, whether it's rare cancers, or infectious disease in the third world,” he said. “And if we build this sort of platform for open data, open research, and [give academia] drug discovery tools to do a lot more than they can do today, tools that have been traditionally sort of buried inside big pharma companies… they could work on molecular design projects for medicines in the public good.”

Right now, much of the Covid drug research work is focused on short term gains, for an obvious reason - we’re dealing with a disaster, and are trying to find a way out as soon as possible. “ATOM will become the repository for some of the rapid gains we've made, because of the focus we've had on this for the last few months,” Brase said. “I think that will greatly boost ATOM, which is longer-term.”

The aim is to turn ATOM into a “sustained effort where we're working on creating molecule sets for broad classes of coronaviruses,” Brase said. “Where we understand their efficacy, how they engage the various viral targets, what their safety properties are, what their pharmacokinetic properties are, and so on. Then we would be primed to be able to put those rapidly into trials and monitor those.”

To fulfill this vision requires computation-led design only possible on powerful supercomputers. “That's what we're trying to do with this platform,” he said. “We have demonstrated that we can do molecular design and validate results of this - on timescales of weeks, not years. We are confident that this will work at some level.”

Big pharma is pushing into computationally-led design, too. “But they have a really hard time sustaining efforts in the infectious disease target classes, the business model doesn't work very well.”

The longer plan

Instead, ATOM - or a project like it - needs to be run in the public interest, as a public-private partnership “that actually remains focused on this and is working on broad antiviral classes of antibacterial agents, new antibiotics, and so on,” Brase believes.

“I think in two or three years, we can be in a much, much better shape at being able to handle a rapid response to something like this,” he said. “It's not going to be for this crisis, unfortunately, although we hope this will point out the urgent necessity of continuing work on this.”

IBM’s Turek also envisions “the beginning of this kind of focused digital investigation of theoretical biological threats: If I can operate on the virus digitally, and understand how it works and how to defeat it, then I don't have to worry about it escaping the laboratory - it makes perfect sense if you think about it.”

But in a year where little has made sense, where governments and individuals have often failed to do what seems logical, it’s hard to know what will happen next.

The question remains whether, when we finally beat Covid-19, there will be a sustained undertaking to prevent a similar event happening again - or if old rivalries, financial concerns, and other distractions will cause efforts to crumble.

Turek remains hopeful: “If somebody came up with a vaccine for Covid-19 tomorrow, I don't think people would sit back and say, ‘well, that's done, let's go back to whatever we were doing before’... Right?”