For the past two decades, there has been plenty of cross-pollination between the worlds of open source, higher education and science. So it will come as no surprise that CERN – one of Europe’s most important scientific institutions - has been using OpenStack as its cloud platform of choice.

With nearly 300,000 cores in operation, digital infrastructure at CERN represents one of the most ambitious open cloud deployments in the world. It relies on application containers based on Kubernetes, employs a hybrid architecture that can reach into public clouds for additional capacity, and its current storage requirements are estimated at 70 Petabytes per year – with the storage platform based on another open source project, Ceph.

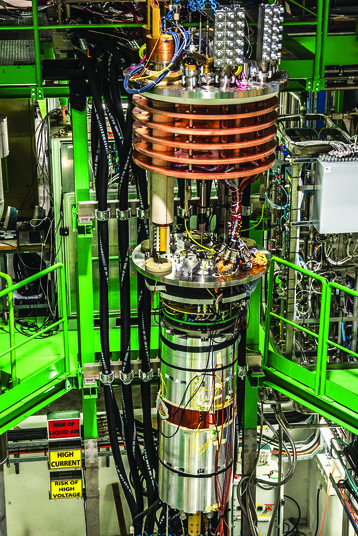

The OpenStack cloud at CERN forms part of the Worldwide LHC Computing Grid, a distributed scientific computing network that comprises 170 data centers across 42 countries and can harness the power of up to 800,000 cores to solve the problems of the Large Hadron Collider, helping answer some of the most fundamental questions in physics.

Forty-two

Today, CERN itself operates just two data centers: a facility on its campus in Geneva, Switzerland, and another one in Budapest, Hungary, linked by a network with 23ms of latency. According to a presentation by Arne Wiebalck, computing engineer at CERN, these facilities contain more than 40 different types of hardware.

“The data center in Geneva was built in the 1970s,” Tim Bell, compute infrastructure manager at CERN and board member of the OpenStack Foundation, told DCD. “It has a raised floor you can walk under. It used to have a mainframe and a Cray – we’ve done a lot of work to retrofit and improve the cooling, and that gets it to a 3.5MW facility. We would like to upgrade that further, but we are actually limited on the site for the amount of electricity we can get – since the accelerator needs 200MW.

“With that, we decided to expand out by taking a second data center that’s in Budapest, the Wigner data center. And that allows us to bring on an additional 2.7MW in that environment.

“The servers themselves are industry-standard, classic Intel and AMD-based systems. We just rack them up. In the Geneva data center we can’t put the full density on, simply because we can’t do the cooling necessary, so we are limited to something between six and ten kilowatts per rack.”

When CERN requires a new batch of servers, it puts out a call for tenders with exact specifications of the hardware it needs – however bidding on these contracts is limited to companies located in the countries that are members of CERN, which help fund the organization. Today, there are 22, including most EU states and Israel.

“We choose the cheapest compliant [hardware],” Bell said.

CERN initially selected OpenStack for its low cost. “In 2011, we could see how much additional computer processing the LHC was going to need, and equally, we could see that we are in a situation where both staff [numbers] and budget are going to be flat. This meant that open source was an attractive solution. Also, the culture of the organization, since we released the World Wide Web into the public domain in the nineties, has been very much on the basis of giving back to mankind.

“It was very natural to be looking at an open source cloud solution – but at the same time, we used online configuration management systems based on Puppet, monitoring solutions based on Grafana. We built a new environment benefiting from the work of the Web 1.0 and Web 2.0 companies, rather than doing everything ourselves, which was how we did it in the previous decade.

“It is also interesting that the implementation of high performance processes [in OpenStack], CPU pinning, huge page sizes - all of that actually came from the features needed in a telecoms environment," he added. “We have situations when we see improvements that others need, that science can benefit from.”

While investigating the origins of the universe, the research organization is not insulated from the concerns of the wider IT industry: for example, in the beginning of the year CERN had to patch and reboot its entire cloud infrastructure - that’s 9,000 hosts and 35,000 virtual machines - to protect against infamous Spectre and Meltdown vulnerabilities.

Like any other IT organization, the team at CERN has to struggle with the mysteries of dying instances, missing database entries and logs that grow out of control. “Everyone has mixed up dev and prod once,” Wiebalck joked during his presentation. “Some have even mixed up dev and prod twice.”

Everything learned in the field is fed back to the open source community: to date, the team at CERN has made a total of 745 commits to the code of various OpenStack projects, discovered 339 bugs and resolved another 155.

“Everything that CERN does that’s of any interest to anyone, we contribute back upstream,” Bell said. “It’s certainly nice, as a government-funded organization, that we are able to freely share what we do. We can say ‘we are trying this out’ and people give us advice – and equally, they can look at what we’re doing and hope that if it works at CERN, chances are it is going to work in their environment.”

To find out more about the upcoming CERN infrastructure upgrade, read our feature: Probing the universe

This article appeared in the August/September issue of DCD magazine. Subscribe for free today: