In January, Victor Peng was appointed the CEO of Xilinx, the US company best known for creating field-programmable gate arrays (FPGAs) - integrated circuits that can be reconfigured using software. Now, he’s ready to talk about his plans for the decades-old semiconductor firm.

“This is my first press event,” Peng told DCD as he laid out the company’s new strategy: Data Center First.

A growth market

Xilinx has achieved success in its core markets, like wireless infrastructure, defense, audio, video and broadcast equipment. But Peng believes that “the data center will be our largest area for growth, and we will make it out highest priority.”

The company’s push into the data center comes on three fronts - compute acceleration, storage, and networking. “We can play in all three,” Peng said.

“One of the challenges is that we’re still primarily known as the FPGA company, and it’s the classic issue of ‘what got you here won’t necessarily get you where you want to go.’ The fact that we invented the FPGA and lead in this field is a good thing, but then there are some very outdated views on what an FPGA can do.

“Some of our competitors want to perpetuate that old mentality because they want you to think that it’s too expensive, low performance and only good for prototyping. That’s not true.”

The other challenge is developer support, the lack of which can kill a product: “We need to make it significantly easier to develop for. We need to build out the ecosystem, libraries and tools and more applications. But that’s exactly what we are doing.

“We’ve increased our hiring on the software side, but we can’t do it all organically, so that’s why we do have to work with partners, the ecosystem, and make investments. We’re doing more things in open source, so collectively the R&D is significant.”

Ultra ambitions

Peng claims FPGAs are ideal for the growing number of machine learning workloads (inference, but not training), as well as video transcoding. He points towards Xilinx’s deal with AWS to provide the cloud company with UltraScale Plus FPGAs for EC2 F1 instances. According to AWS, these instances can accelerate applications up to 30x when compared to servers that use CPUs alone.

Peng refuted suggestions that the instances have not been as successful as some had expected: “What tends to happen is people feel like ‘it’s been around a while, how come it hasn’t taken off in a broader way?’ But they announced in November 2016, they went to general availability in April 2017 - and at that time there were zero applications, they were just opening the doors for developers.”

Today, according to Peng, there are around 20 applications using FPGAs in production for cloud customers, and more than 50 app developing companies that are targeting this field. AWS is also expanding the availability of the instances, while Alibaba, Huawei, Baidu and Tencent all plan to launch their own Xilinx FPGA-based services.

“A lot of that is part of a goal to be a software targeted platform, and FPGA-as-a-service is a fantastic vehicle for it. It gives a platform for the application developers to use this without having to design a board.”

Another example of a successful, large-scale FPGA deployment that Peng likes to reference is that of Microsoft’s Project Catapult - where the software giant inserted FPGAs into servers to create an ‘acceleration fabric’ throughout the data center. Used for Microsoft Azure, Bing and AI services, this set-up helps speed up processing and networking speeds, and is now a standard component of the company’s cloud infrastructure.

“I think that model of homogeneous, distributed, but adaptable acceleration is very powerful,” Peng said. “Because that gives you the best TCO overall, when you have a dynamic environment; the best TCO is not to have fixed appliances for everything you might run.”

There’s one problem, however - the FPGAs Microsoft uses are from Altera, the company Intel bought for $16.7 billion in 2015. “When they were an independent company, it was a duopoly, we’d be selling to the same customer, sometimes we’d win, sometimes we wouldn’t. We’ve been talking to Microsoft, and we continue to talk to Microsoft.”

While Peng expects Xilinx to continue offering classic FPGAs “for decades,” the other announcement the new CEO was ready to share was the development of a brand new product called ACAP.

The next step

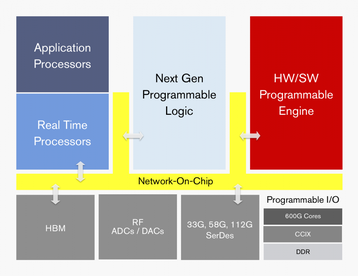

The ‘Adaptive Compute Acceleration Platform’ is made up of a new generation of FPGA fabric with distributed memory and hardware-programmable DSP blocks, a multi-core SoC, and one or more software-programmable and hardware-adaptable compute engines, connected through a network on a chip.

“This message of ‘don’t think of it as an FPGA’ has got to be something we do over and over again, because after being the FPGA company, we have to say ‘no we’re not the FPGA company.’ With ACAP, at the moment nobody even knows what that is - but they will understand over time.”

The first ACAP product family, codenamed Everest, will be developed with TSMC 7nm process technology and will tape out later this year, actually shipping to customers next year.

Creating Everest took $1bn, 1,500 engineers and four years of research, and the company expects it to achieve a 20x performance improvement on deep neural networks compared to the 16nm Virtex VU9P FPGA.

Peng was coy on specifics, but confirmed that Xilinx is in advanced talks with cloud companies about ACAP.

With a renewed focus and a new product, we asked how the success of this new strategy will be judged. “It’s hard for me to know how it will ramp,” Peng said. “There’s no roadmap, this is a whole new area, and not just for us, but for the industry. I mean who would have predicted Nvidia’s ramp up? Even Nvidia didn’t predict Nvidia’s ramp up.”

This article appeared in the April/May issue of DCD Magazine. Subscribe to the digital and print editions here: