The similarity between Yahoo’s data centers and traditional chicken coops is uncanny. And like the “chicken or the egg” debate, it begs the question: which came first?

Yahoo has built data centers in various locations, including Lockport NY, using a design referred to as the “chicken coop”. It closely resembles the CapeCodder chicken coop from FarmNyards in Beverly, MA. After some digging, I located Larry Kudlik, owner of FarmNyards, and the person who designed the CapeCodder.

Kudlik was not surprised when I sent him a picture of Yahoo’s Lockport data center (shown here). “The CapeCodder was designed specifically to provide free-flow ventilation,” explained Kudlik. “It’s impossible for hot air to get trapped in the coop. The warmer the roof gets, the more draft created.”

He continued: “In my coop design, there are openings in the elevated floor,” added Kudlik. “The air from below the floor is drawn up through the coop keeping the chickens cool. The air movement also removes excess moisture.”

Yahoo’s data-center evolution

Which came first? Apparently, it’s no contest. “I’ve been designing and building CapeCodder Chicken Coops for over thirty years now,” explained Kudlik. “I’ve shipped thousands of them, some even have solar power and self-cleaning floors.”

If that’s true, has Yahoo tapped into a free-cooling concept used by farmers for generations? I contacted Yahoo, and Brett Illers, senior project manager of sustainability and energy efficiency was kind enough to field my questions.

To begin, Illers affirmed that ventilating a data center using a full-roof cupola system was a great way to cool computing equipment. Illers then provided some historical background. The company started building their own data centers in 2007. The first design was standard fare having a raised-floor white-space and forced-air cooling.

The company’s second iteration Yahoo Thermal Cooling (YTC) uses a different approach. The white-space in a YTC data center is considered the cool zone. Hot air exiting the server rack is captured in an enclosed space and forced up through an inter-cooler. What makes the YTC concept unique is the fact that server fans move the air.

With the success of YTC, Yahoo decided to take the next step and attempt a ground-up redesign: “The purpose of the project code-named Yahoo Compute Coop (YCC) - solidifying the chicken-coop connection - is to research, design, build, and implement a greenfield efficient data factory,” he said. “And to demonstrate that the YCC concept is feasible for large facilities housing tens of thousands of heat-producing computing servers.”

The projects work is documented in a research paper: YCC: A Next-Generation Passive Cooling Design for Data Centers.

The YCC project had the following wish list:

- Decrease energy consumption and waste output

- Implement naturally-occurring environmental effects to maximize “free-cooling”

- Simplify building methods and materials

- Shorten build-to-operate schedules and reduce costs

The design

Yahoo engineers and architects felt it important to consider the entire building as the air handler. “The building shape was specifically designed to allow heat to rise via natural convection,” explained the research paper. “And the length of the building relative to its width (120 feet by 60 feet) provided easier access to outside air by increasing the area-to-volume ratio.”

The design team then went to work creating pre-engineered building components along with skid-based electrical systems in an attempt to lower front-end expenses and operating costs. The design team tackled cooling next, well aware that project requirements and the wish list eliminated most traditional forms of cooling as a consideration.

To make use of the simplified air-flow path, Yahoo engineers designed louver systems to:

- Regulate air entering the data center

- Control exhaust air exiting the cupola

- Adjust temperature of recirculated air

Lastly, the engineering team designed fan modules cosisting of five horsepower, variable-speed fans; filter assemblies; and evaporative (water) Inter-Cooling Modules (ICM) for those hot, muggy summer days when free-cooling is not enough.

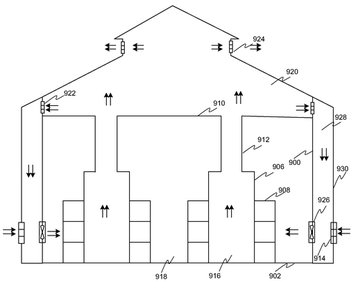

Now let’s look at how melding the building shape, adjustable louver systems, fan modules, filter assemblies, and ICMs offers Yahoo data center engineers a great deal of flexibility when it comes to cooling, as shown on the illustration from theYCC patent.

The system can operate in various modes:

1. Unconditioned outside air cooling

When the outside air is between 70F and 85F (21C to 29C) enters the adjustable louvers (914) on the data-center side walls. The outside air is pulled through the mixing space (928) into the data hall (918) by air-handling units (926) with the only conditioning being filtering.

Fans housed in the rack-mounted computing and networking devices (908) draw cooling air from the inside space (918) through the rack-mounted equipment and eject the now-hot exhaust air to the interior space (916). Through natural convection, the exhaust air moves up into the attic (920). The exhaust air continues out of the building through the adjustable louvers (924) in the roof-length cupola.

2. Outside air tempered by evaporative cooling

- When temperatures exceed 85 degrees F, outside air is drawn through the Inter-Cooling Modules located between the adjustable side-wall louvers (914) and the air-handling units (926). After cooling the servers, the exhaust air exits the building in the same manner as when unconditioned air enters the data center.

3. Mixed outside air cooling

When the outside air temperature drops below 70 degrees F, heated exhaust air is mixed with incoming outside air to maintain an air temperature of 70 degrees F. This can be accomplished several ways, depending on how automated the cooling system is. One example would be where the adjustable louvers (914, 922, and 924) are connected to a control system that can selectively activate each set of louvers. If the outside air is too cold, the control system will close louvers (914 and 922). Heated exhaust air in the attic space (920) is redirected to the mixing space (928) and recirculated through the inside space (918).

The verdict is in

Mr. Kudlik was right. The free-cooling design is efficient. After a few years of operation, Yahoo calculated the following statistics:

- Yahoo engineers determined that YCC yielded roughly two percent annualized “cost to cool” with evaporative cooling; meaning that free-cooling would have an even lower percentage. (cost to cool is the energy (kW) expended to remove the heat generated by the data center load as a percentage of the data center load itself.)

- Approximately 36 million gallons of water were saved per year with YCC compared to conventional water-cooled chiller plant designs having comparable IT loads.

- The YCC design realized an almost 40 percent cut in the amount of electricity used relative to industry-typical legacy data centers.

- Illers mentioned the YTC data center had a Power Usage Effectiveness (PUE) of 1.3, whereas the Lockport YCC data center’s PUE is a very respectable 1.08.

The Department of Energy in 2010 recognized YCC as being a best-in-class, energy-efficient design by awarding Yahoo a 9.9 million dollar sustainability grant from the DoE’s Green IT program. On Jan 13, 2011, the U.S. Patent Office awarded Yahoo patent number 20110009047 A1 “Integrated Building Based Air Handler for Server Farm Cooling System.”

Not satisfied yet, Illers said Yahoo engineers are still tweaking YCC’s design. I wonder if the engineers are working on self-cleaning floors?