LinkedIn has developed network topology that will enable the company to deploy hundreds of thousands of servers in a single data center, a considerable step up from tens of thousands of machines per facility it manages today.

The approach involves migration from the current 10/40G connectivity to faster and more flexible 10/25/50/100G architecture by using 100G Parallel Single Mode 4-lane (PSM4) interconnect technology and deploying it in a split 50G configuration.

“That enables us all the benefit of the latest switching technology at the price for optics that is half the price of 40G optical interconnect,” explained Yuval Bachar, principal engineer for Global Infrastructure Architecture and Strategy at LinkedIn.

The technology will be deployed at scale at the company’s LOR1 data center in Hillsboro, Oregon, which is currently under construction and is due to open in late 2017.

Now that’s what I call hyperscale

LinkedIn expects that its data centers are only going to grow in size. In order to support this growth, the company launched Project Altair and tasked its engineers with development of a new type of data center fabric.

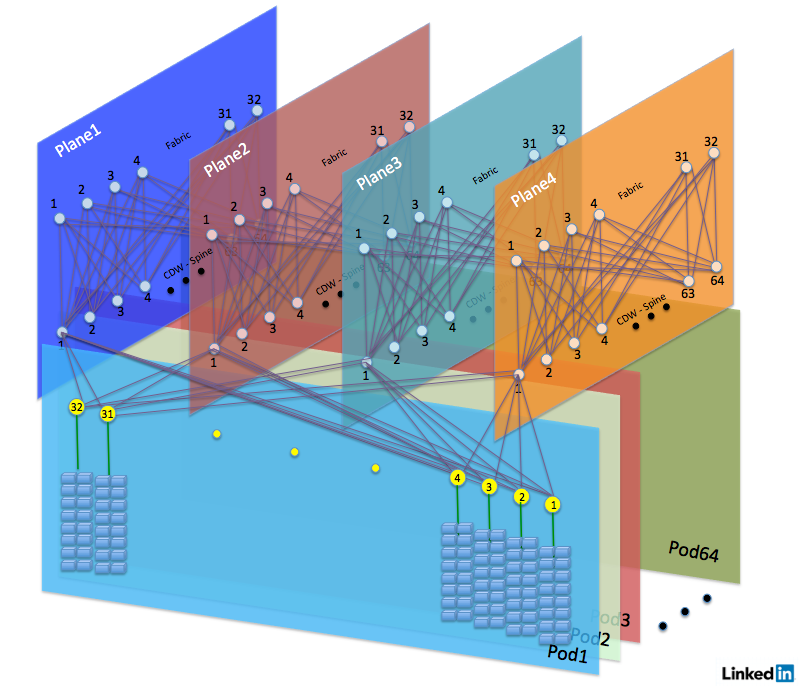

Last week, a string of blog posts revealed just what this new fabric entails. Turns out that the LOR1 campus in Oregon consists of four data centers - all four are based on a pod configuration, with a total of 64 pods, each supporting thousands of servers.

According to Bachar, the network relies on just one type of switch across the entire facility. The switch – a product of Project Falco - comes in a 1U form-factor and features 32 ports, each supporting full 100 Gigabit of throughput per second.

In February, LinkedIn already announced that it had developed a switch codenamed ’the pigeon’ and its technical specifications fit the bill.

“Looking at the per-port cost, the price of a 40G optical module (Single mode) like LR4-Light is comparable to the price of PSM4 module. However the PSM4 module delivers two ports per module, and additional 25 percent bandwidth compared to the LR4-Light,” said Bachar.

“On a large-scale data center – and even a smaller scale one – savings can reach millions of dollars in CapEx investment for a better and faster solution.”

The engineer added that LinkedIn needed help from the optical module suppliers to deliver a sufficient quantity of 50G hardware.

He also mentioned that the company is already investigating 200G connectivity and looking forward to an eight-channel version of QSFP, which will enable the next technology jump – this time to 400G.