Social networking giant Facebook has upgraded the network that links its servers in order to keep up with the growth of internal traffic.

The new Express Backbone (EBB) should ease network congestion within its data centers and enable better service to end-users.

All user traffic remains on the existing network, a.k.a. the ‘Classic Backbone’.

A different challenge

For the past decade, Facebook’s data centers in the US and Europe were interlinked using a single wide-area (WAN) backbone network, which carried both user traffic and internal server-to-server traffic.

Internal traffic consists of various operations that are not directly related to user interaction – for example, moving pictures and videos into cold storage or replicating data offsite for disaster recovery.

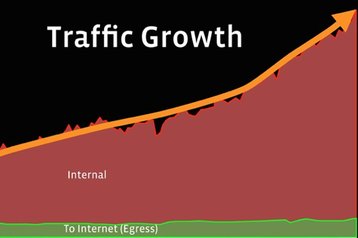

According to a blog post by network engineers Mikel Jimenez and Henry Kwok, the amount of internal data center traffic at Facebook has been growing much faster than user traffic, to the point where it began to interfere with front-end functionality.

“As new data centers were being built, we realized the need to split the cross-data center vs Internet-facing traffic into different networks and optimize them individually. In a less than a year, we built the first version of our new cross-data center backbone network, called the Express Backbone (EBB), and we’ve been growing it ever since,” the engineers explained.

In the creation of the EBB, Facebook divided the physical topology of the network into four parallel planes, just like it did when designing its data center fabric back in 2014. It also developed a proprietary modular routing platform to provide IGP and messaging functionality.

“Our very first iteration of the new network was built using a well-understood combination of an IGP and a full mesh iBGP topology to implement basic packet routing,” Jimenez and Kwok wrote. “The next step was adding a traffic-matrix estimator and central controller to perform traffic engineering functions assuming ‘static’ topology. The final iteration replaced the ‘classical’ IGP with an in-house distributed networking platform called Open/R, fully integrating the distributed piece with the central controller.”

The resulting network enables clean fault domain splitting and can react to traffic spikes in real-time. Next, Facebook plans to extend network controller functionality to better manage traffic congestion.