Facebook is a company that likes to make things. I saw the signs of this everywhere, while I was walking around the campus at Menlo Park on a Friday. The site has its own wood shop and an analog research laboratory where its employees can learn to do woodworking and be creative in the physical field. They like to know how everything works. And they like to be open about the knowledge they accumulate.

About six years ago, Facebook decided to design its own data center hardware from the ground up. The Open Compute Project (OCP) was established in 2011 by Facebook, along with Rackspace and Intel, to help drive open source hardware innovation in, near and around the data center ecosystem.

Three of the world’s five largest companies by market cap are OCP members (Google/Alphabet, Microsoft and Facebook) and Amazon is also using custom server designs behind the scenes. From this list, only Apple is still using gear from traditional vendors in its data centers.

In order to make OCP technologies more accessible to potential adopters, Facebook organized an OCP Technology Day to show some validated use cases.

OCP Technology Day

During the OCP technology day on Tuesday 30th of August 2016 at Facebook’s new building 20 (the building that Mark Zuckerberg has been recently live-streaming from), across the street from the campus, Facebook demonstrated how OCP products can be used by other companies and even enterprises. Why is this important and what does this tell us?

At the event, a member of the Facebook staff demonstrated their use of open source storage software called GlusterFS. Using the standard Facebook Knox JBOD (manufactured by Wiwynn) and connecting it to a Facebook Leopard server through a standard LSI SAS RAID controller, they provide their internal users with POSIX storage environment through NFS. With some additional configuration and tools the data is replicated and the service made highly available to meet strict internal SLA’s.

What Facebook has done here is provide a storage solution to their internal users, which is in many ways similar to any enterprise environment, based on open standard hardware and software, and completely under their own control. For Facebook, this solution resulted in significant savings versus the cost of proprietary hardware, software (and licensing fees), vendor training and external support contracts. More importantly, this use case demonstrated by Facebook shows us that any enterprise is now able to make a strategic choice for OCP, and reap the many benefits that open hardware has to offer. It’s just a matter of finding the financial break-even point and making the strategic choice.

Here is how much Facebook says it saves on costs:

| Storage Solution | Relative Cost |

|---|---|

|

Proprietary NFS |

$$$$ to $$$ |

|

3x Replicated w/OCP |

$$ |

|

Erasure Coded w/ OCP (8+4) |

$ |

During the afternoon, both Red Hat and Canonical demonstrated how they install their operating systems on OCP hardware.

The Facebook Leopard bare-metal server doesn’t have a VGA or HDMI connector, so there’s no option to connect a monitor to install the OS. Instead it attempts to boot using PXE from either the installed Mezzanine card or the on-board LOM first and from SATA later. It keeps going through that loop until a bootable device is found.

This makes some types of automated deployment obvious, which is already something you would want when managing a fleet of servers (there are of course overrides and the BMC which offers virtual devices as well).

In this case, the lab uses a single 10G Mezzanine adapter by Mellanox, which is the most common adapter at Facebook. But it could also use dual 10G, 40G or the latest 25G to 100G standards, as all of those are available in the OCP Mezzanine format.

As the demo unfolded, both Red Hat and Canonical successfully deployed their respective flavors of OpenStack - Red Hat’s RHEL using CloudForms and Canonical’s Ubuntu using MAAS and Juju. They can even manage the Facebook Wedge switches that also run a form of Linux.

Since the OCP Leopard motherboard already supports installation of Microsoft Windows or VMware though manufactures such as Wiwynn, this really opens the doors to wider adoption.

Using just these few building blocks of compute, storage, network and rack we are confident that most software-defined solutions are within reach of a wider customer base.

To the lab

Back to the Friday at Building 16. After tasting the food at all the different type of restaurants that the campus has to offer, it is time to go back to work.

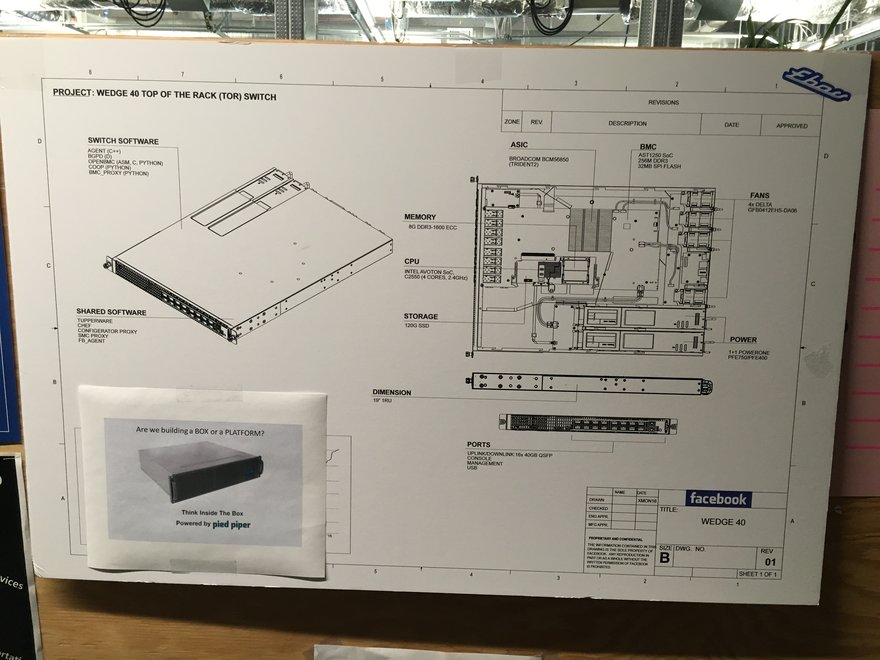

In the networking lab, we see the first Wedge-40 white box switch ever produced. It is signed by everyone that contributed to it. In another network lab, a lot of new colorful Wedge-100 switches. Engineers are working on other products yet to be opened up to the public. There is also a telco lab for designing cellular networks. And another lab for specialized storage systems, based on everything from regular hard drives to Blu-ray and all-flash NVMe.

On the other side lies the OCP Validation Lab. It is a temporary location since they just started working on this. There are two OCP racks in the room that are almost fully populated with servers, storage and networking equipment.

A bit further down the hall in Building 17 is the latest “Area 404” and through the large windows, we can see they are working on much more than just OCP gear.

For manufacturing, Facebook teams up with the ODMs that also build your laptops, servers and mobile phones. Circle B teams up with these and other partners to deliver OCP based solutions to the sub-hyper scale data center operators.

Of course anyone who is interested in more details can go to the OCP Facebook community page and watch the recorded presentations or find them in the OCP Wiki.

Menno Kortekaas is a technical director at Circle B, a Dutch systems integration company that specializes in Open Compute hardware.