I keep hearing that Edge computing is the next big thing - and specifically, in-network edge computing models such as MEC. (See Box for a list of different types of "Edge"). I hear it from network vendors, telcos, some consultants, blockchain-based startups and others. But, oddly, very rarely from developers of applications or devices.

My view is that it's important, but it's also being overhyped. Network-edge computing will only ever be a small slice of the overall cloud and computing domain. And because it's small, it will likely be an addition to (and integrated with) web-scale cloud platforms. We are very unlikely to see Edge-first providers become "the next Amazon Web Services, only distributed."

The myth of Multi-access Edge Computing

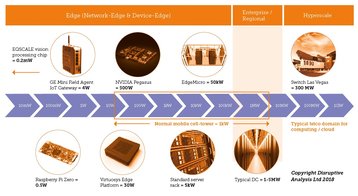

Why do I think it will be small? Because I've been looking at it through a different lens to most: power. It's a metric used by those at the top and bottom ends of the computing industry, but only rarely by those in the middle, such as network owners. This means they're ignoring a couple of orders of magnitude.

Cloud computing involves huge numbers of servers, processors, equipment racks, and square meters of floorspace. But the figure that gets used most among data-center folk is probably power consumption in watts, or more usually kW, MW or GW.

Power is useful, as it covers the needs not just of compute CPUs and GPUs, but also storage and networking elements in data centers. Organizing and analyzing information is ultimately about energy, so it's a valid, top-level metric.

The world's big data centers have a total power consumption of roughly 100GW. A typical facility might have a capacity of 30MW, but the world's largest data centers can use over 100MW each, and there are plans for locations with 600MW or even 1GW. They're not all running at full power, all the time of course.

This growth is driven by an increase in the number of servers and racks, but it also reflects power consumption for each server, as chips get more powerful. Most racks use 3-5kW of power, but some can go as high as 20kW if power - and cooling - is available.

So "the cloud" needs 100GW, a figure that is continuing to grow rapidly.

Meanwhile, smaller, regional data-centers in second- and third-tier cities are growing and companies and governments often have private data-centers as well, using about 1MW to 5MW each.

The "device edge" is the other end of the computing power spectrum. When devices use batteries, managing the power-budget down to watts or milliwatts is critical.

Sensors might use less than 10mW when idle, and 100mW when actively processing data. A Raspberry Pi might use 0.5W, a smartphone processor might use 1-3W,

an IoT gateway (controlling various local devices) could consume 5-10W, a laptop might draw 50W, and a decent crypto mining rig might use 1kW.

Beyond this, researchers are working on sub-milliwatt vision processors, and ARM has designs able to run machine-learning algorithms on very low-powered devices.

But perhaps the most interesting "device edge" is the future top-end Nvidia Pegasus board, aimed at self-driving vehicles. It is a 500W supercomputer. That might sound like a lot of electricity, but it's still less than one percent of the engine power on most cars. A top-end Tesla P100D puts over 500kW to the wheels in "ludicrous mode." Cars' aircon alone might use 2kW.

Although relatively small, these device-edge computing platforms are numerous. There are billions of phones, and hundreds of millions of vehicles and PCs. Potentially, we'll get tens of billions of sensors.

So at one end we have milliwatts multiplied by millions of devices, and at the other end we have Gigawatts in a few centralized facilities.

So what about the middle, where the network lives? There are many companies talking about MEC (Multi-access Edge Computing), with servers designed to run at cellular base stations, network aggregation points, and also in fixed-network nodes.

Some are "micro data centers" capable of holding a few racks of servers near the largest cell towers. The very largest might be 50kW shipping-container sized units, but those will be pretty rare and will obviously need a dedicated power supply.

The actual power supply available to a typical cell tower might be 1-2kW. The radio gets first call, but perhaps 10 percent could be dedicated to a compute platform (a generous assumption), we get 100-200W. In other words, cell tower Edge-nodes mostly can’t support a container data center, and most such nodes will be less than half as powerful as a single car's computer.

Cellular small-cells, home gateways, cable street-side cabinets or enterprise "white boxes" will have even smaller modules: for these, 10W to 30W is more reasonable.

Five years from now, there could probably be 150GW of large-scale data centers, plus a decent number of midsize regional data-centers, plus private enterprise facilities.

And we could have 10 billion phones, PCs, tablets and other small end-points contributing to a distributed edge. We might also have 10 million almost-autonomous vehicles, with a lot of compute.

Not the New Frontier

Now, imagine 10 million Edge compute nodes, at cell sites large and small, built into Wi-Fi APs or controllers, and perhaps in cable/fixed streetside cabinets. They will likely have power ratings between 10W and 300W, although the largest will be numerically few in number. Choose 100W on average, for a simpler calculation.

And let's add in 20,000 container-sized 50kW units, or repurposed central-offices-as-data centers, as well.

In this optimistic assumption (see Box 2: Energy for the Edge) we end up with a network edge which consumes less than one percent of total aggregate compute capability. With more pessimistic assumptions, it might easily be just 0.1 percent.

Admittedly this is a crude analysis. A lot of devices will be running idle most of the time, and laptops are often switched off entirely. But equally, network-edge computers won't be running at 100 percent, 24x7 either.

At a rough, order-of-magnitude level, anything more than one percent of total power will simply not be possible, unless there are large-scale upgrades to the network infrastructure's power sources, perhaps installed at the same time as backhaul upgrades for 5G, or deployment of FTTH.

Could this 0.1-1 percent of computing be of such pivotal importance, that it brings everything else into their orbit and control? Could the "Edge" really be the new frontier? I think not.

In reality, the reverse is more likely. Either device-based applications will offload certain workloads to the network, or the hyperscale clouds will distribute certain functions.

There will be some counter-examples, where the network-edge is the control point for certain verticals or applications - say some security functions, as well as an evolution of today's CDNs. But will IoT management, or AI, be concentrated in these Edge nodes? It seems improbable.

There will be almost no applications that run only in the network-edge - it’ll be used just for specific workloads or microservices, as a subset of a broader multi-tier application.

The main compute heavy-lifting will be done on-device, or on-cloud. Collaboration between Edge Compute providers and industry/hyperscale cloud will be needed, as the network-edge will only be a component in a bigger solution, and will only very rarely be the most important component.

One thing is definite: mobile operators won’t become distributed quasi-Amazons, running image-processing for all nearby cars or industry 4.0 robots in their networks, linked via 5G.

This landscape of compute resource may throw up some unintended consequences. Ironically, it seems more likely that a future car's hefty computer, and abundant local power, could be used to offload tasks from the network, rather than vice versa.

Dean Bubley is the founder of Disruptive Analysis.

This article appeared in the August/September issue of DCD magazine. Subscribe for free today: